Apple is researching technology to allow an "Apple Glass" user to not be constrained to fixed physical gestures or touch controls, and instead manipulate any object to alter what is visible on the headset.

It's already possible to use Apple's See in AR app to show you a Mac Pro or an iPad Pro on your own desk before ever buying one. You can walk around a Lamborghini Huracan EVO Spyder car in the same way. You just can't do anything with these virtual objects.

You can look, but you can't touch and that's fine, that's even impressive. But as Apple AR becomes more part of our regular world, and as Apple integrates it with everything else it does, it becomes a problem.

With AR and especially with what Apple refers to as Mixed Reality (MR), it's great to be able to see an iPad Pro in front of you, but you need to be able to use it. You have to be able to pick up a virtual object and use it, or otherwise AR is no better than a 3D movie.

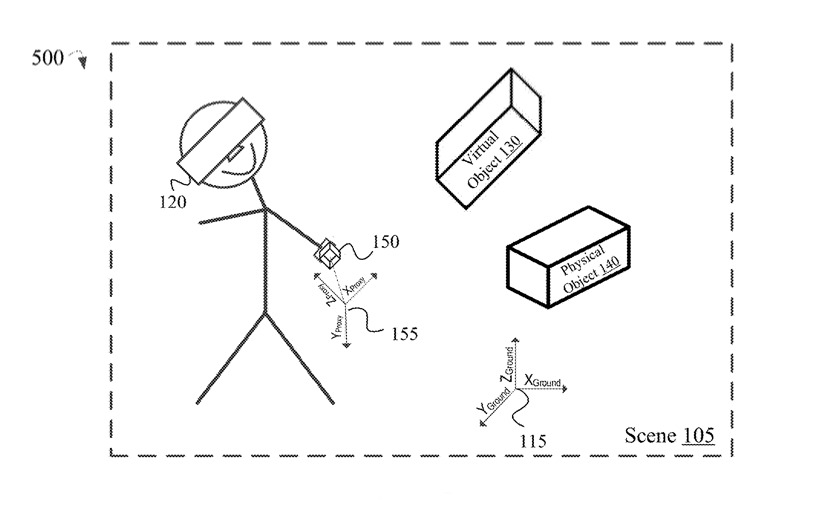

Apple's proposed solution is described in "Manipulation of Virtual Objects using a Tracked Physical Object," a patent application filed in January 2020 but only revealed this week. It suggests that truly mixing realities, in that the virtual object could be mapped onto an actual object in the real world.

"Various electronic devices exist, such as head-mound devices (also known as headsets and HMDs)," begins the application, "with displays that present users with a computer-generated reality (CGR) environment in which they may be fully immersed in a surrounding physical environment, fully immersed in a virtual reality environment comprising virtual objects, or anywhere in between."

"While direct manipulation of physical objects in the surrounding physical environment is available to users naturally, the same is not true for virtual objects in the CGR environment," it continues.

"Lacking a means to directly interact with virtual objects presented to a user as part of a CGR environment limits a degree to which the virtual objects are integrated into the CGR environment. Thus, it may be desirable to provide users with a means of directly manipulating virtual objects presented as part of CGR environments."

Maybe it wouldn't be practical to keep a life-size Lamborghini-shaped block of wood. And maybe it wouldn't be a lot of use to have a Mac Pro-shaped piece of fiberglass. But you could much more usefully have an iPad-shaped and iPad-sized object.

Apple refers to the real-world object as a "proxy device," and says that the same camera system that are used to position virtual AR around the user can precisely track that.

"Input is received from the proxy device using an input device of the proxy device that represents a request to create a fixed alignment between the virtual object and the virtual representation in a three-dimensional ("3-D") coordinate space defined for the content," says the patent application.

"A position and an orientation of the virtual object in the 3-D coordinate space is dynamically updated using position data that defines movement of the proxy device in the physical environment," it continues.

So if you are holding, say, a rectangular device, the AR/MR system can tell where it is, what orientation it has, what face is up, and so on. It can tell when you move or turn it, which means it knows exactly where to overlay the AR image.

"The method involves presenting content including a virtual object and a virtual representation of a proxy device physically unassociated with an electronic device on a display of the electronic device," it continues.

So to someone standing next to you, you're waving about a blank slate. But to you, it is an iPad Pro with all the controls and displays this means. In this sense, the idea is similar to the recent one about privacy screens.

The invention is credited to Austin C. Germer, and Ryan S. Burgoyne, the latter of whom was recently listed on a patent application covering gaze-based user interactions.

William Gallagher

William Gallagher

-m.jpg)

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

3 Comments

The concept is great, I'm looking forward to seeing what Apple comes up with.

That said when they are for sale to the general public, there is one thing that is inevitable.

I'd predict it will be within weeks, likely less than a month, the first person will crash their car because they were playing a game on the glasses while driving.

Google R&D photocopiers going full blast.

Cool! Hope I’m alive to see it.