Apple AR hardware may move lenses or external cameras to provide an "Apple Glass" wearer with the right focus for precisely what they're looking at.

Apple has many previous patents regarding gaze tracking, such as ones to do with privacy, with detecting if you're distracted, or reading a notification. It's even had a couple solely to do with speeding up Apple AR by rendering just what you're looking at, or lowering resolution of everything else to save power.

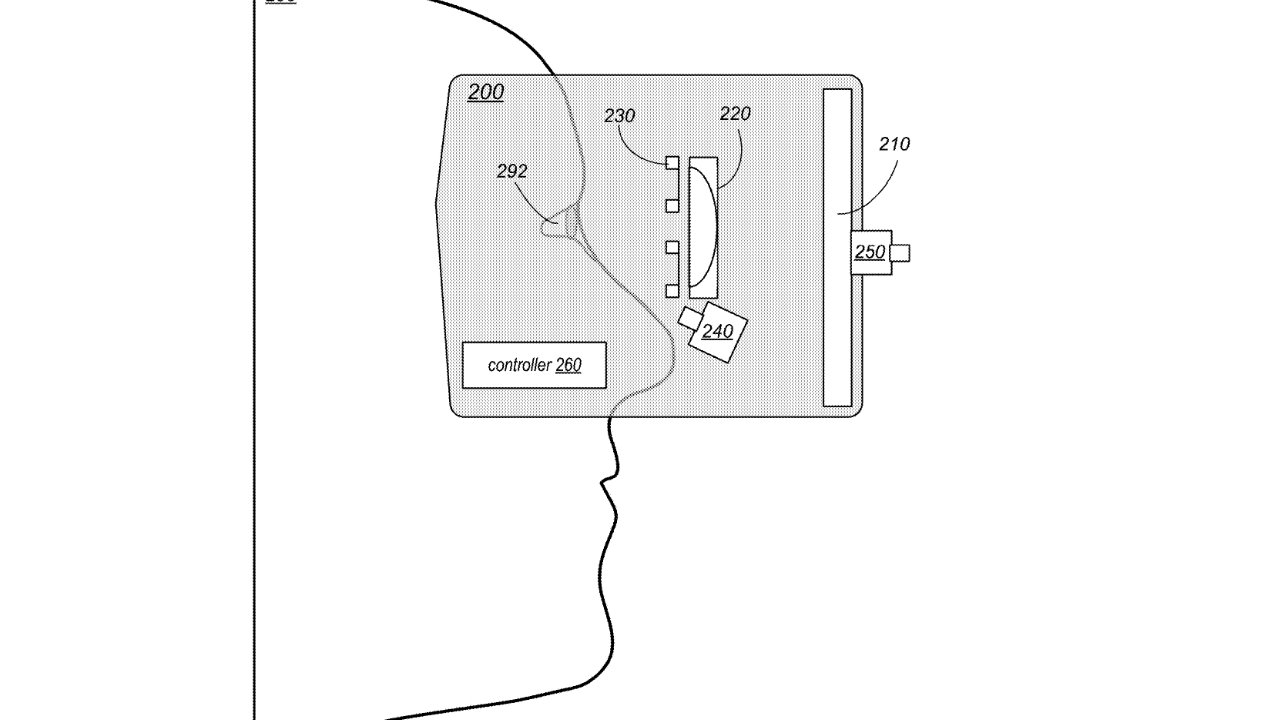

Now, though, "Focusing for virtual and augmented reality systems," is a newly-granted patent that wants to extend gaze tracking further. This one picks up on another earlier patent application regarding self-adjusting lenses, and adds the potential for your gaze to control external cameras.

"For AR applications," says Apple, "gaze tracking information may be used to direct external cameras to focus in the direction of the user's gaze so that the cameras focus on objects at which the user is looking."

"For AR or VR applications, the gaze tracking information may be used to adjust the focus of the eye lenses so that the virtual content that the user is currently looking at on the display has the proper vergence to match the convergence of the user's eyes," it continues.

So this patent is not about how to track gaze; it's about what to do with the information once it's been tracked. A user could be in a virtual conference, for instance, where the actual space is being relayed to them via a series of cameras.

As they look to their left, the cameras in that physical room could be automatically told to refocus or even reposition to provide the best view. "The external cameras may include an autofocus mechanism that allows the cameras to automatically focus on objects or surfaces in the environment," says Apple.

It's not just about providing the optimum view, though. It's also seemingly about fixing problems Apple must have found in its testing of Head-Mounted Displays (HMD), such as "Apple Glass."

"In conventional AR HMDs, the autofocus mechanism may focus on something that the user is not looking at," says Apple. That's presumably in HMDs that do not feature gaze tracking, although Apple does not specify.

It only says that gaze tracking can "direct the autofocus mechanism of the external cameras to focus in the direction of the user's gaze so that the external cameras focus on objects in the environment at which the user is currently looking."

Ultimately, the aim is to always show the user what they are choosing to look at. This patent also covers how that's still the case when what they're watching is a recording.

"In some embodiments, adjusting focus of the eye lenses may be applied during playback of recorded video," says Apple. "Depth information may be recorded with the video, or may be derived from the computer graphics."

This patent is credited solely to D. Amnon Silverstein, whose previous related work includes a patent on autofocusing images using motion detection.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

There are no Comments Here, Yet

Be "First!" to Reply on Our Forums ->