The long-rumored "Apple Glass" could provide digital images in AR that appear to be completely solid when overlaying the view of the environment, by selectively blocking elements of the screen to stop light from passing through.

The core idea of augmented reality is to overlay a digital image on top of one for the user's local environment. This can be achieved in a few ways, such as the traditional display-based version where a camera view is overlaid with graphics, like with ARKit applications for the iPhone.

The other way of doing the same thing is to have a transparent display placed in front of the user's eyes, and to display the AR graphics on there. This gives the user an unencumbered view of where they are, as well as the extra graphical additions.

However, this second technique has the downside of the display itself being completely transparent, with displayed images always having a level of transparency. This kills off any illusion that the digitally-created and superimposed object is within the user's local environment.

The camera-based version doesn't have this issue, as the user only sees the rendered composite of the digital content and the camera feed, not an unblocked real-world view.

In a patent granted on Tuesday by the U.S. Patent and Trademark Office titled "Head-mounted device with an adjustable opacity system," Apple aims to selectively block light from reaching the user to solve the problem.

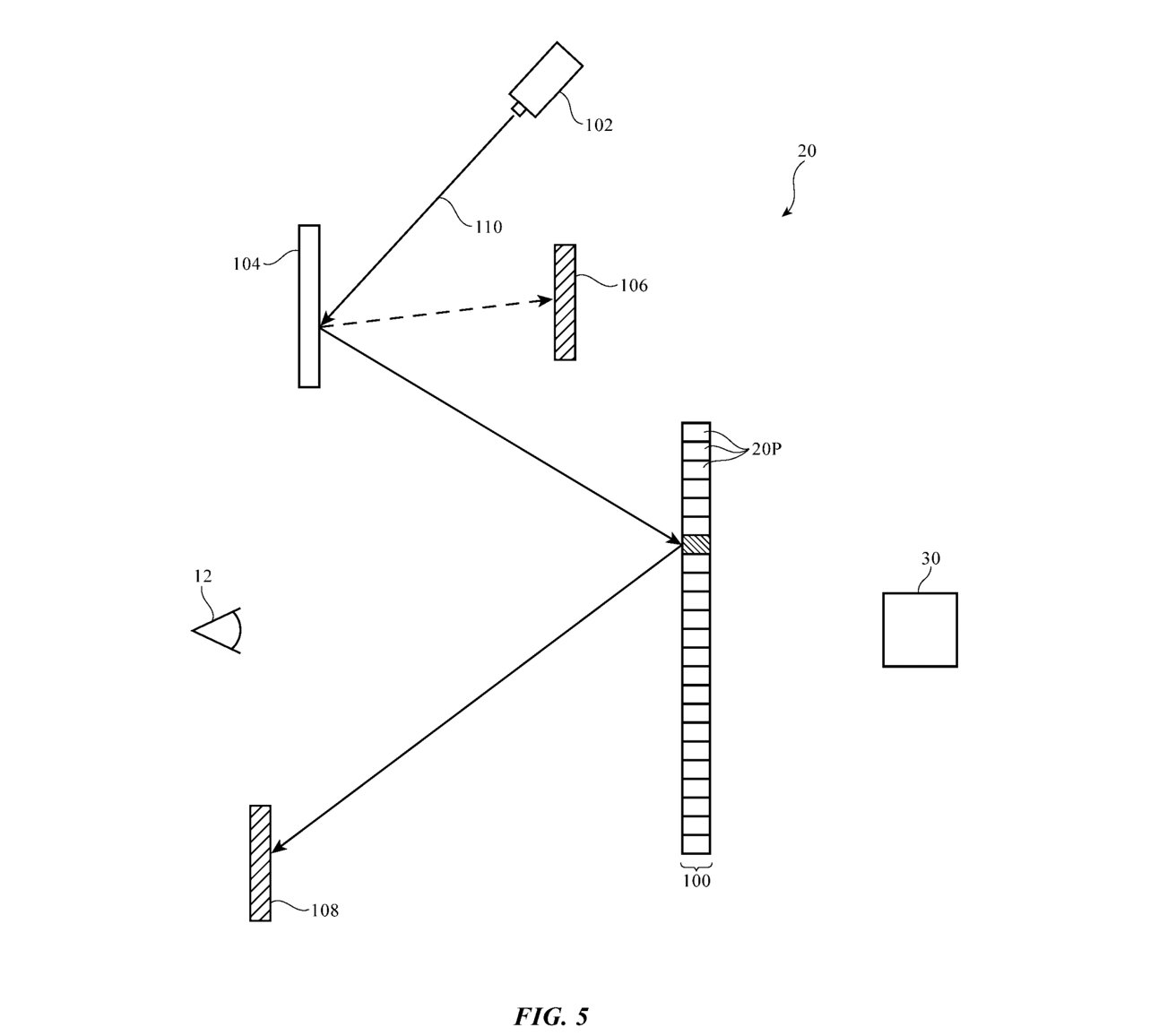

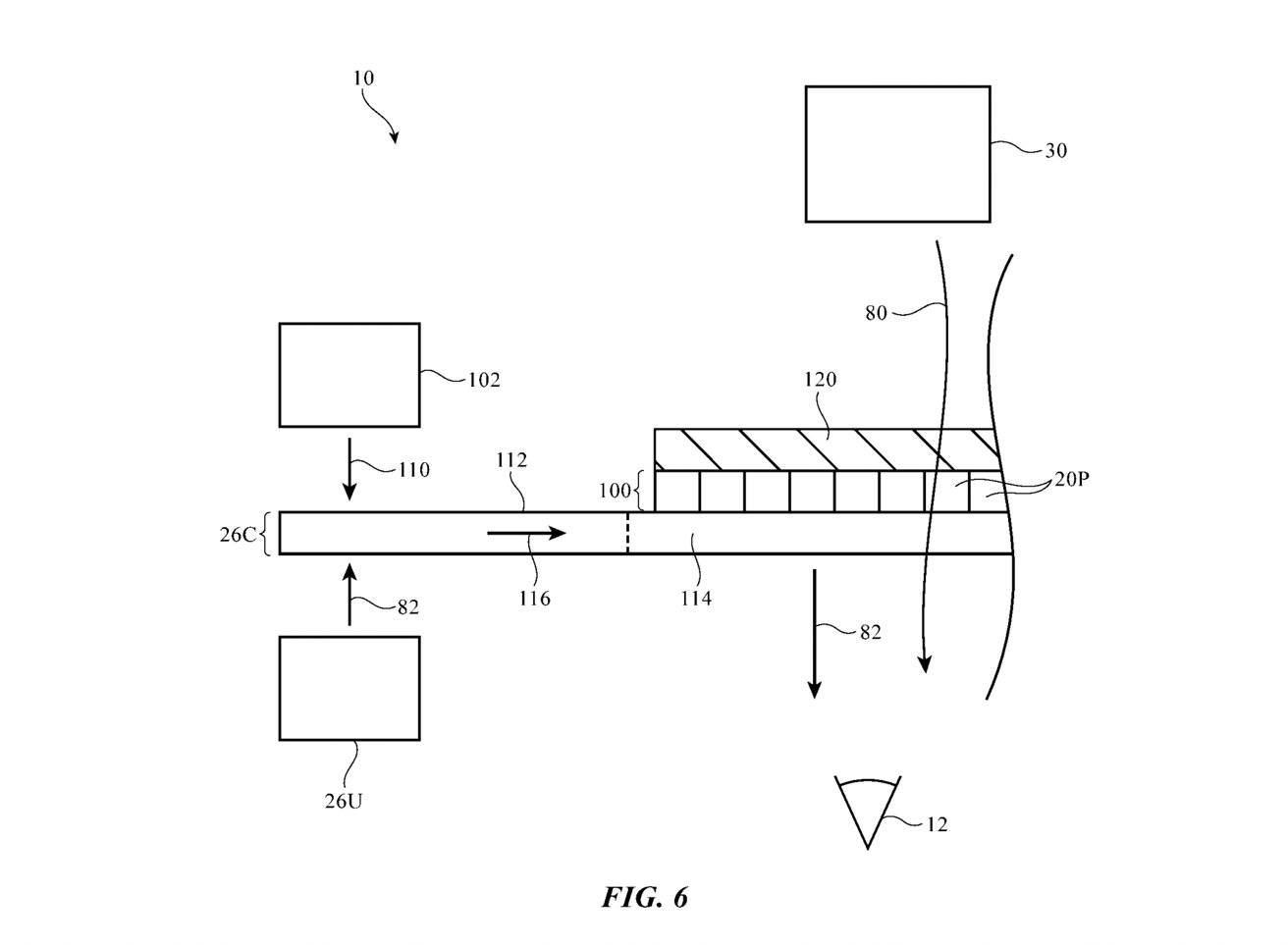

In Apple's solution, it continues to use a transparent display, which combines a display panel with an optical coupler. This is primarily used to display the rendered digital image to the user, rather than just the display.

Overlapping the optical coupler is the adjustable opacity system, which can use a photochromic layer and a light source. This secondary light, which could be ultraviolet light, is exposed to selective areas of the photochromic layer, which in turn varies the amount of opacity.

The more UV light the system shines onto the photochromic layer, the less visible light it lets through to the user's eyes.

Apple further suggests that the system could use two optical couplers, with one managing the rendered content while the other handles the redirection of UV light to sections of the layer. The adjustable opacity system may also use filters to block direct light from the source, as well as a heating element for the layer itself.

UV light-absorbing material may also be used in the headset to stop stray light from reaching the user's eyes.

The patent was originally filed on March 13, 2019, and lists its inventors as David A. Kalinowski, Hyungryul Choi, and Jae Hwang Lee.

Apple files numerous patent applications on a weekly basis, but while the existence of a patent indicates areas of interest for Apple's research and development teams, they don't guarantee the existence in a future product or service.

Apple is believed to be working on its own AR or VR headset or smart glasses, under the name "Apple Glass." On Tuesday, analyst Ming-Chi Kuo forecast Apple would launch its head-mounted product in the second quarter of 2022.

Naturally, it has numerous patents under its belt relating to head-mounted display technology.

In 2014 and 2016, it received patents for variations of a "transparent electronic device," that could overlay text and images over the real-world view, though not necessarily as part of a headset.

There has also been a patent for a stereoscopic headset from March 2021, using lenticular lenses on an OLED screen, while "Split-screen driving of electronic device displays" from April 2021 points to using an iPhone or iPad to display an AR or VR view without the aid of a headset.

Follow all the details of WWDC 2021 with the comprehensive AppleInsider coverage of the whole week-long event from June 7 through June 11, including details of all the new launches and updates.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Malcolm Owen

Malcolm Owen

-m.jpg)

Christine McKee

Christine McKee

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

2 Comments

The eventual design of AR/VR headsets will be entirely opaque all the time. With cameras and displays that can exceed the quality of human vision, there is no need to directly pass light though them. A much simpler design is for the cameras and AI to recognize nearby objects in the real world and present them to the viewer in the virtual world. This will prevent people from walking into things or smashing their hands into tables. The Oculus Quest 2 can now recognize your couch and desk and display them in the virtual world so you can stand up and sit down safely. It does the same for the hand controllers so if you leave one on a table, you can pick it up again even with the headset on.

By making the headset entirely opaque and using the cameras to show elements of the real world on the screen, you get true augmented vision. The viewer could walk around in total darkness and see the world as if it was in broad daylight. They could zoom in to see detail of distant objects. They could instantly rewind reality and see something they missed even it it was behind them at the time. Don't like the weather, season or time of day? Just change it. Be somewhere else on the planet entirely while walking down the street. The possibilities are endless.

This like the Apple car isn’t happening.