iPhone vs Android: Two different photography and machine learning approaches

A controversy with Samsung's phone cameras has renewed the conversation surrounding computational photography, and highlights the difference between it, and Apple's approach in iOS.

It isn't a big secret that Apple relies upon advanced algorithms and computational photography for nearly all of its iPhone camera features. However, users are beginning to ask where to draw the line between these algorithms and something more intrusive, like post-capture pixel alteration.

In this piece, we will examine the controversy surrounding Samsung's moon photos, how the company addresses computational photography, and what this means for Apple and its competitors going forward.

Computational photography

Computational photography isn't a new concept. It became necessary as people wanted more performance from their tiny smartphone cameras.

The basic idea is that computers can perform billions of operations in a moment, like after a camera shutter press, to replace the need for basic edits or apply more advanced corrections. The more we can program the computer to do after the shutter press, the better the photo can be.

This started with Apple's dual camera system on iPhone 7. Other photographic innovations before then, like Live Photos, could be considered computational photography, but Portrait Mode was the turning point for Apple.

Apple introduced Portrait Mode in 2016, which took depth data from the two cameras on the iPhone 7 Plus to create an artificial bokeh. The company claimed it was possible thanks to the dual camera system and advanced image signal processor, which conducted 100 billion operations per photo.

Needless to say, this wasn't perfect, but it was a step into the future of photography. Camera technology would continue to adapt to the smartphone form factor, chips would get faster, and image sensors would get more powerful per square inch.

In 2023, it isn't unheard of to shoot cinematically blurred video using advanced computation engines with mixed results. Computational photography is everywhere, from the Photonic Engine to Photographic Styles — an algorithm processes every photo taken on iPhone. Yes, even ProRAW.

This was all necessitated by people's desire to capture their life with the device they had on hand — their iPhone. Dedicated cameras have physics on their side with large sensors and giant lenses, but the average person doesn't want to spend hundreds or thousands of dollars on a dedicated rig.

So, computational photography has stepped in to enhance what smartphones' tiny sensors can do. Advanced algorithms built on large databases inform the image signal processor how to capture the ideal image, process noise, and expose a subject.

However, there is a big difference between using computational photography to enhance the camera's capabilities and altering an image based on data that the sensor never captured.

Samsung's moonshot

To be clear: Apple is using machine learning models — or "AI, Artificial Intelligence" for those using the poorly coined popular new buzzword — for computational photography. The algorithms provide information about controlling multi-image captures to produce the best results or create depth-of-field profiles.

The image processor analyzes skin tone, skies, plants, pets, and more to provide proper coloration and exposure, not pixel replacement. It isn't looking for objects, like the moon, to provide specific enhancements based on information outside of the camera sensor.

We're pointing this out because those debating Samsung's moon photos have used Apple's computational photography as an example of how other companies perform these photographic alterations. That simply isn't the case.

Samsung has documented how Samsung phones, since the Galaxy S10, have processed images using object recognition and alteration. The Scene Optimizer began recognizing the moon with the Galaxy S21.

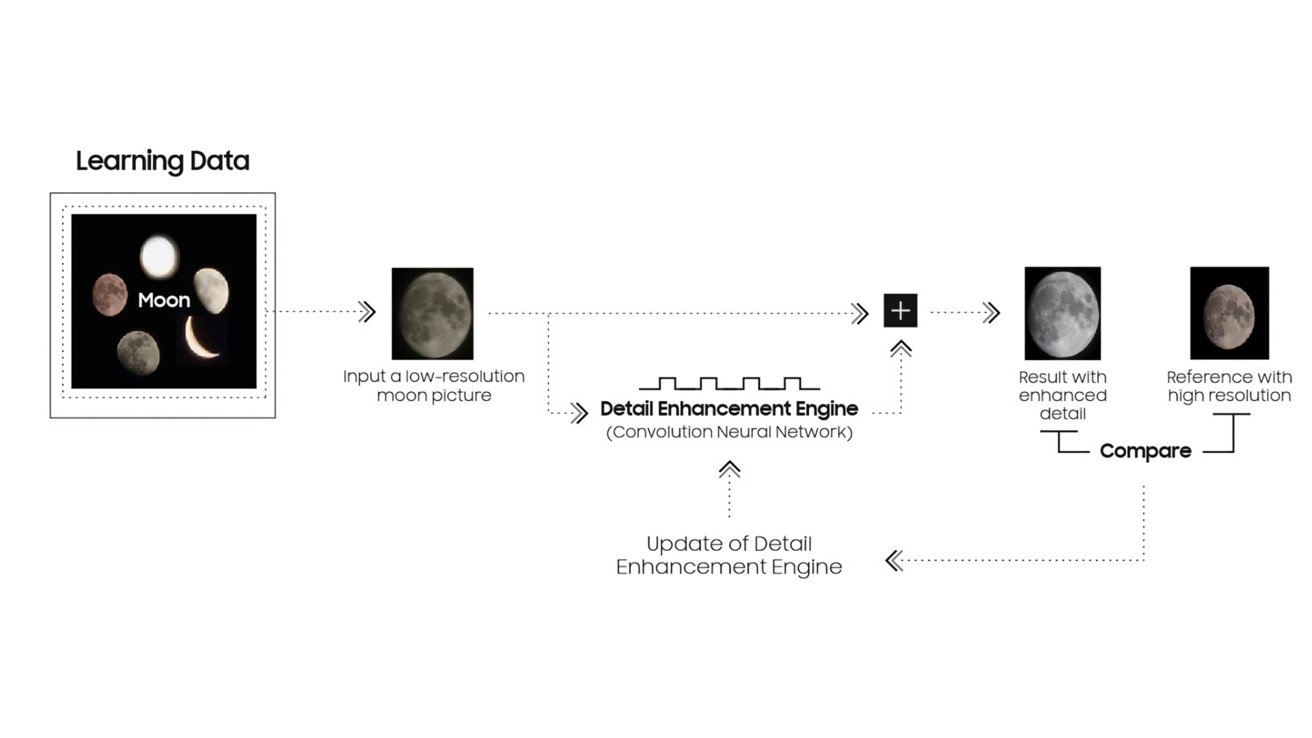

As the recently-published document describes, "AI" recognizes the moon through learned data, and the detail improvement engine function is applied to make the photo clearer with multi-frame synthesis and machine learning.

Basically, Samsung devices will recognize an unobscured moon and then use other high-resolution images and data about the moon to synthesize a better output. The result isn't an image captured by the device's camera but something new and fabricated.

Overall, this system is clever because the moon looks the same no matter where it is viewed on earth. The only thing that changes is the color of the light reflected from its surface and the phase of the moon itself. Enhancing the moon in a photo will always be a straightforward calculation.

Both Samsung and Apple devices take a multi-photo exposure for advanced computations. Both analyze multiple captured images for the best portion of each and fuse them into one superior image. However, Samsung adds an additional step for recognized objects like the moon, which introduces new data from other high-resolution moon images to correct the moon in the final captured image.

This isn't necessarily a bad thing. It just isn't something Samsung hasn't made clear in its advertising or product marketing, which may lead to customer confusion.

The problem with this process, and the reason a debate exists, is how this affects the future of photography.

Long story short, the final image doesn't represent what the sensor detected and the algorithm processed. It represents an idealized version of what might be possible but isn't because the camera sensor and lens are too small.

The impending battle for realism

From our point of view, the key tenet of iPhone photography has always been realism and accuracy. If there is a perfect middle in saturation, sharpness, and exposure, Apple has trended close to center over the past decade, even if it hasn't always remained perfectly consistent.

We acknowledge that photography is incredibly subjective, but it seems that Android photography, namely Samsung, has leaned away from realism. Again, not necessarily a negative, but an opinionated choice made by Samsung that customers have to address.

For the matter of this discussion, Samsung and Pixel devices have slowly tilted away from that ideal realistic representational center. They are vying for more saturation, sharpness, or day-like exposure at night.

The example above shows how the Galaxy S22 Ultra favored more exposure and saturation, which led to a loss of detail. Innocent and opinionated choices, but the iPhone 13 Pro, in this case, goes home with a more detailed photo that can be edited later.

This difference in how photos are captured is set in the opinionated algorithms used by each device. As these algorithms advance, future photography decisions could lead to more opinionated choices that cannot be reversed later.

For example, by changing how the moon appears using advanced algorithms without alerting the user, that image is forever altered to fit what Samsung thinks is ideal. Sure, if users know to turn the feature off, they could, but they likely won't.

We're excited about the future of photography, but as photography enthusiasts, we hope it isn't so invisible. Like Apple's Portrait Mode, Live Photos, and other processing techniques — make it opt-in with obvious toggles. Also, make it reversible.

Tapping the shutter in a device's main camera app should take a representative photo of what the sensor sees. If the user wants more, let them choose to add it via toggles before or editing after.

For now, try taking photos of the night sky with nothing but your iPhone and a tripod. It works.

Why this matters

It is important to stress that there isn't any problem with replacing the ugly glowing ball in the sky with a proper moon, nor is there a problem with removing people or garbage (or garbage people) from a photo. However, it needs to be a controllable, toggle-able, and visible process to the user.

As algorithms advance, we will see more idealized and processed images from Android smartphones. The worst offenders will outright remove or replace objects without notice.

Apple will inevitably improve its on-device image processing and algorithms. But, based on how the company has approached photography so far, we expect it will do so with respect to the user's desire for realism.

Tribalism in the tech community has always caused debates to break out among users. Those have included Mac or PC, iPhone or Android, and soon, real or ideal photos.

We hope Apple continues to choose realism and user control over photos going forward. Giving a company complete opinionated control over what the user captures in a camera, down to altering images to match an ideal, doesn't seem like a future we want to be a part of.

Wesley Hilliard

Wesley Hilliard

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

20 Comments

I wonder if they also use past pictures of your friends and family to optimize new pictures of the same people.

I have to admit, in the comparison photo, the iPhone shot might be closer to reality but the Samsung shot looks more natural to me, and I think most people would prefer it, even if the average participant in the AI forum might not. It’s even less burned out on the tops of the barrels. I think a person’s eyes would adjust to the weird white balance in that room, and you’d end up seeing it more like the Samsung rendition.

You’re not quite understanding the line that’s being crossed here: Samsung is introducing foreign imagery to the shots you are taking. Apple is not. With Samsung, you cannot be sure that what you’re seeing is what YOU captured. This is a completely different class of modification vs what’s going on with Apple’s computational photography. Apple is enhancing or otherwise applying modifications to image features that already exist. Samsung is introducing image features your image MAY NEVER HAVE HAD.

I think people would be less concerned if the scene optimization were to be applied after the image was taken and presented as what the camera itself was capable of. Also, as others mentioned, there is a difference between enhancing an image with filters and replacing the content of an image. Again, if the system retained both versions and the enhanced version was done in post processing, I don't think this would be an issue. As it stands, it really misrepresents what the camera system in the Samsung phones is actually capable of.