Internal documents and anonymous sources leak details of Apple's internal ban on ChatGPT-like technology and the plans for its own Large Language Model.

Large Language Models have exploded in popularity in recent months, so it isn't a surprise to learn Apple could be working on a version for itself. However, these tools are unpredictable and tend to leak user data, so employees have been blocked from using competing tools.

A report from The Wall Street Journal details an internal Apple document restricting Apple employees from using ChatGPT, Bard, or similar Large Language Models (LLMs). Anonymous sources also shared that Apple is working on its own version of the technology, though no other details were revealed.

Other companies have also restricted the use of LLM tools internally, like Amazon and Verizon. These tools rely on access to massive data stores and constantly train based on user behavior.

While users can turn off chat logging for products like ChatGPT, the software can break and leak data. Leading companies like Apple to distrust the technology for fear of leaking proprietary data.

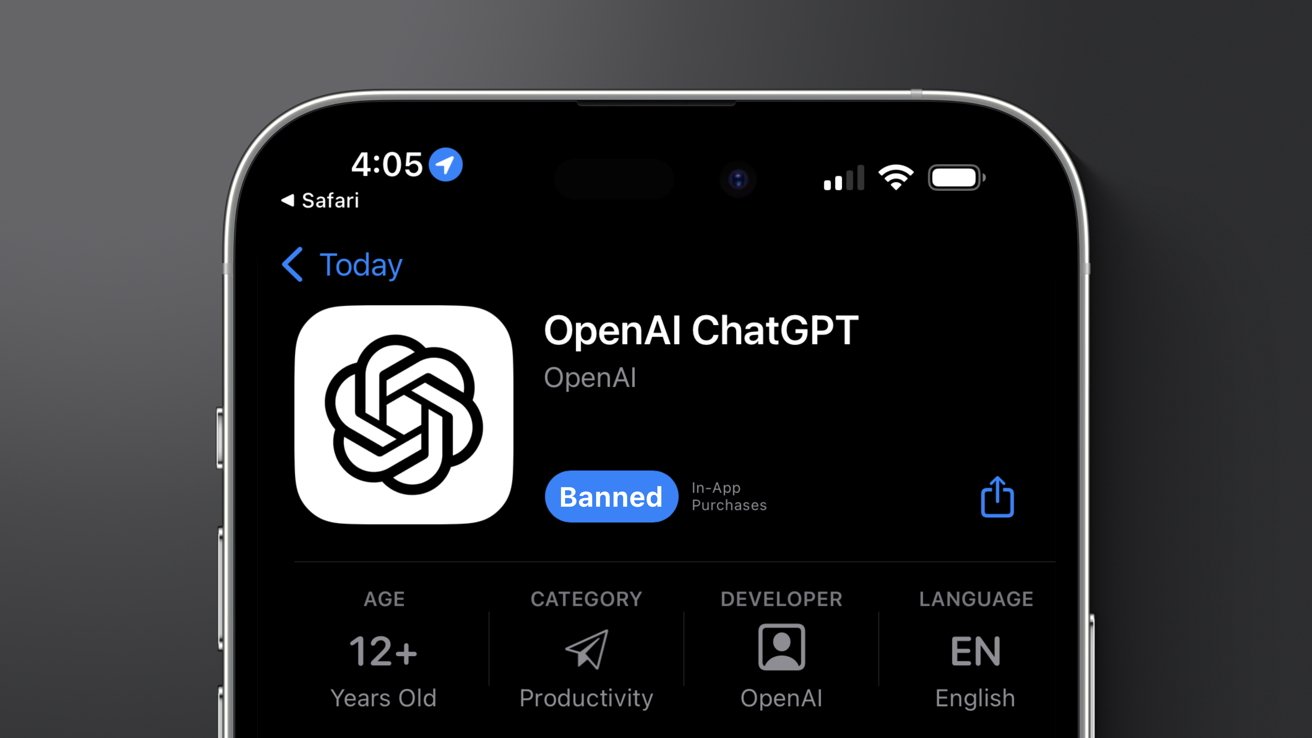

If you're not an Apple employee using a work iPhone, the ChatGPT app just launched on the App Store. Look out, though, there are many imitation apps trying to scam users.

Apple and the "AI race"

Apple works on a lot of secret projects that never see the light of day. The existence of internal development of LLM technology at Apple isn't surprising, as it is a natural next step from its existing machine learning systems.

The buzzword "Artificial Intelligence" tends to be thrown around rather loosely when discussing LLMs, GPT, and generative AI. It's been said so many times, like during Google I/O, that it has mostly lost all meaning.

Neural networking and machine learning are nothing new to Apple, despite pundit coverage suggesting Apple is somehow behind in the "AI" race. Siri's launch in 2011, followed by advances in computational photography on iPhone, shows Apple has always been involved in intelligent computing via machine learning. LLMs are an evolution of that same technology set, just on a much larger scale.

Some expect Apple to reveal some form of LLM during WWDC in June, but that may be too soon. The technology has only just begun to accelerate, and Apple tends to wait until its internal version matures before releasing such things.

Apple's fear of data leaks by LLMs isn't misplaced. However, as evidenced by Thursday's reporting, the data leaking problem still seems to suffer from the human element.

Wesley Hilliard

Wesley Hilliard

-m.jpg)

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

William Gallagher

William Gallagher

-m.jpg)

25 Comments

I worked on LOLITA (Large-scale, Object-based, Linguistic Interactor, Translator, and Analyser) at the University of Durham (UK) in the 1990's. Half my undergraduate education was in AI. Then.

LOLITA read the Wall Street Journal everyday and answered questions on it. It could answer questions in Chinese as easily as English, without any translation. My role was to optimise the 'dialogue' module.

The difference between those efforts and the latest LLMs, and other buzzwords, is that LOLITA attempted to model a deep, semantic understanding of the material while current AI only uses big and now-abundant data to imitate a human response.

As ever in the UK, research funding ran out and no attempt was made to exploit the project commercially. In the US, I feel it would have been a little different and the World may have been different.

Neural net based AI only replicates all the mistakes and failings of the average human. AI can achieve so much more.

“Algorithmic Insights” would be a more accurate description of “AI”.

“Regurgetative AI” more accurate than “generative AI”.

I'm using the bing chat feature almost every day now. It’s useful, but it’s more like the next step in search engines than the precursor to Mr. Data.

"despite pundit coverage suggesting Apple is somehow behind in the "AI" race" is clearly written to suggest that you don't agree that Apple is behind in the AI race. Well, I'd love some of what you're drinking, because from my perspective, Siri is a woeful piece of garbage whilst I have had several helpful interactions with ChatGPT 4, Bard, and Bing. I also have several pieces of Art on my wall that were generated by DALL·E 2.