New technologies are coming in virtual reality headsets, and terms are getting bandied about like everybody already knows what they are. Here are most of the terms you're hearing, and what they mean

Rumors suggest that Apple VR will have an 4K display for each eye, a powerful M-series processor, and extensive user tracking features. It could cost up to $2,000 in its first iteration.

The following terms apply to the headset market in general and have been used, at least in part, to describe upcoming features for Apple's headset. These terms are lightly technical, but aren't self-explanatory at first glance.

All the terms are listed alphabetically. We'll be adding new terms as they are introduced.

What is augmented reality?

Augmented reality, or AR, refers to a software overlay that is independent of the real world. Consumers got their first taste of AR products through things like the Nintendo 3DS, PS Vita, and Google Glass.

Apple has pushed hard for augmented reality to become an integral part of its operating systems. However, it hasn't moved beyond being an interesting party trick, for the most part.

Users can use augmented reality on their iPhone or iPad today for various gaming experiences or art installations. Apple has even added an AR direction mode to Apple Maps, though it is limited and requires the user to hold their iPhone up in the air to view the information.

Augmented reality differs from mixed reality, which uses information from the real world to produce 3D overlays. AR is more passive, meaning it will look more like a heads-up display, not a fully-rendered video game.

For example, "Pokemon Go" will use a device's LiDAR to find a flat surface and place a Pokemon there to interact with. It doesn't change what kind of environment is viewed through the display — the software just adds the creature irrespective of what is surrounding it.

It is expected that Apple will use augmented reality in a limited fashion for its first virtual reality headset. It may allow for some real-world image passthrough to help users set up rather than offer full AR experiences.

What is eye-tracking?

Eye tracking uses the exact movement of the user's eyes to animate an avatar or control movements. This is different from foveated rendering or field of view.

For example, a VR headset with eye tracking can be used to animate an avatar's eyes within a virtual meeting room. It enables a more realistic depiction of the user's emotion or viewing direction.

This is also useful for judging when a user is trying to see outside their field of view without turning their head. The VR experience can adjust to show that region or navigate a menu with minimal user interaction.

What is extended reality or XR?

Extended reality is a blanket term that refers to multiple technologies that involve augmented reality, virtual reality, or mixed reality. Companies generally refer to XR when discussing wearable displays, but it can also be used to discuss on-device systems like iPhone's AR functionality.

Generally, all of these terms are used interchangeably, though sometimes incorrectly. Extended reality is a technology set, not the technology itself. Someone wouldn't be "in XR" like they would be "in AR" or "in VR" though, it technically could be used that way.

What is field of view?

Field of view is the area visible in front of the user's eyes due to the available screen real estate. VR headsets generally have a set field of view that is very wide, so users feel like they are "inside" an environment rather than viewing a screen.

Since VR screens are so close to a user's eye, they are able to take up the entire range of vision. However, they are still physical objects that don't move, so a user can still perceive the edge of a VR screen if they look for it.

Generally, users will only use a small area of the screen directly in front of their eyes, with less detailed rendering occurring at the edges.

What is foveated rendering?

Foveated rendering enables the VR headset to control what is being rendered based on where the user is looking. This is different from eye tracking because the information is being used to control environment renders, not avatar renders.

Rather than render a scene with exact pixel-perfect precision across the entire VR display, foveated rendering ensures only the region in front of the eyes is fully rendered to save computing power.

Advanced algorithms determine where a user might look next to prepare other regions for more advanced rendering. This should lead to a seamless experience, though it is highly reliant on the processing power of the headset.

What is hand and body tracking?

Hand and body tracking are self-explanatory, though how it is accomplished differs from headset to headset. Early VR headsets relied upon colorful LEDs or multiple cameras in a room to track a user's movement. However, this has advanced to placing sensors directly into the headset.

Apple's VR headset is expected to operate independently from other products, so it will likely track the user's movements without an advanced external camera rig. Instead, the headset will be able to see the user via cameras or other sensors, like LiDAR.

Gyroscopes can also be used to track the user's head position, and outward-facing LiDAR can map the immediate area in a room to help avoid object collision.

Controllers are also useful for tracking a user's hands. Rumors aren't clear on whether Apple will develop a VR controller or not. The company may be confident in its headset's ability to track hands without them.

What is haptic feedback?

Haptic feedback refers to a device's ability to react to software-based interactions with a physical response. Basically, think of the vibrating motors in a controller that tell you when a character has been damaged in a game.

Apple uses haptic feedback in the iPhone to simulate key presses or other functions. When implemented correctly, the user will notice haptic feedback as part of the interaction but disassociate it from a vibrating motor.

A VR headset could take advantage of haptic feedback to alert the user of impending object collision, or software could take advantage for simulating parts of a game. For example, haptic feedback might tell a user something is behind them.

Haptics are also useful in VR controllers. The controller might vibrate to indicate their in-game sword has collided with an object, or perhaps, they've highlighted a menu item with a virtual pointer.

What is LiDAR?

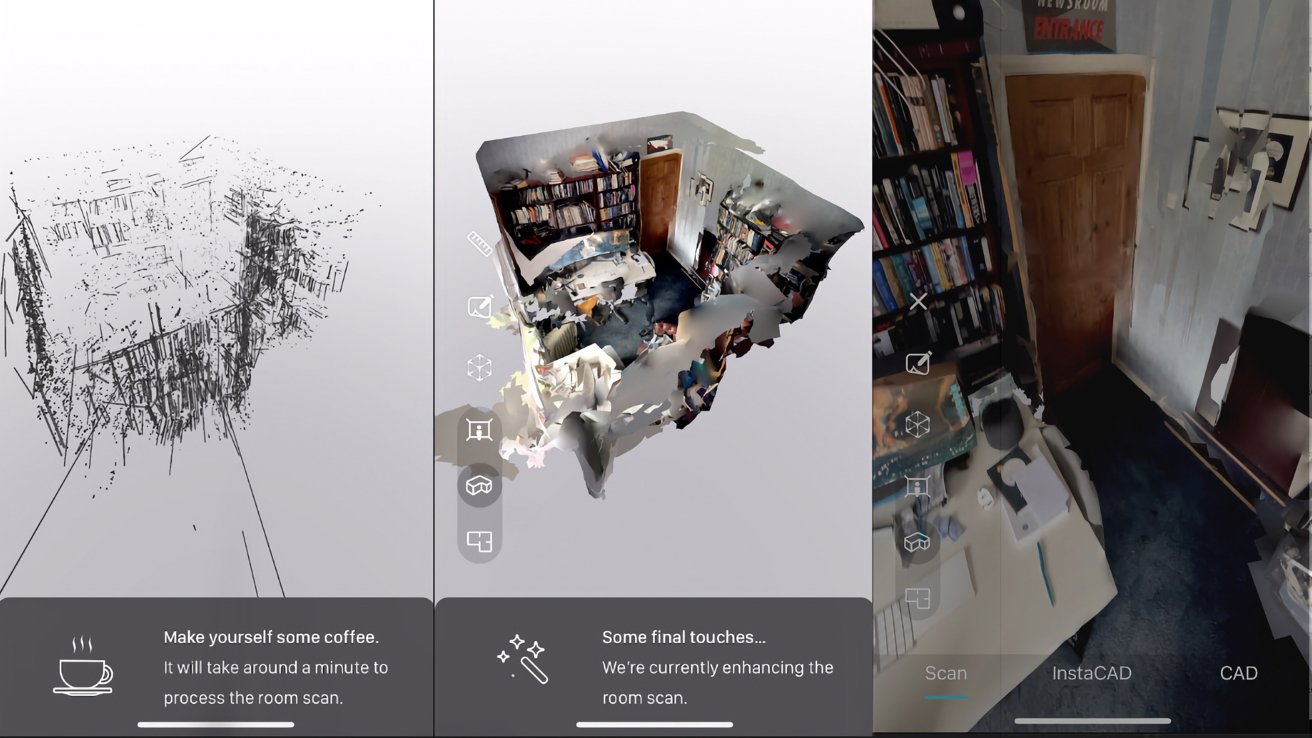

LiDAR, or light direction and ranging, is a sensor used to create 3D representations of a physical environment for software. On the iPhone or iPad Pro, LiDAR sensors have generally been used to quickly find flat surfaces for augmented reality experiences.

In a VR headset, LiDAR can be used to map a room, so the software knows where objects are. This information can be used to help the user avoid collisions while using the headset.

More advanced applications of LiDAR could provide real-time information so a mixed reality experience can be rendered. Or, the sensors could be used to track a user's hands or body as they move.

What is mixed reality?

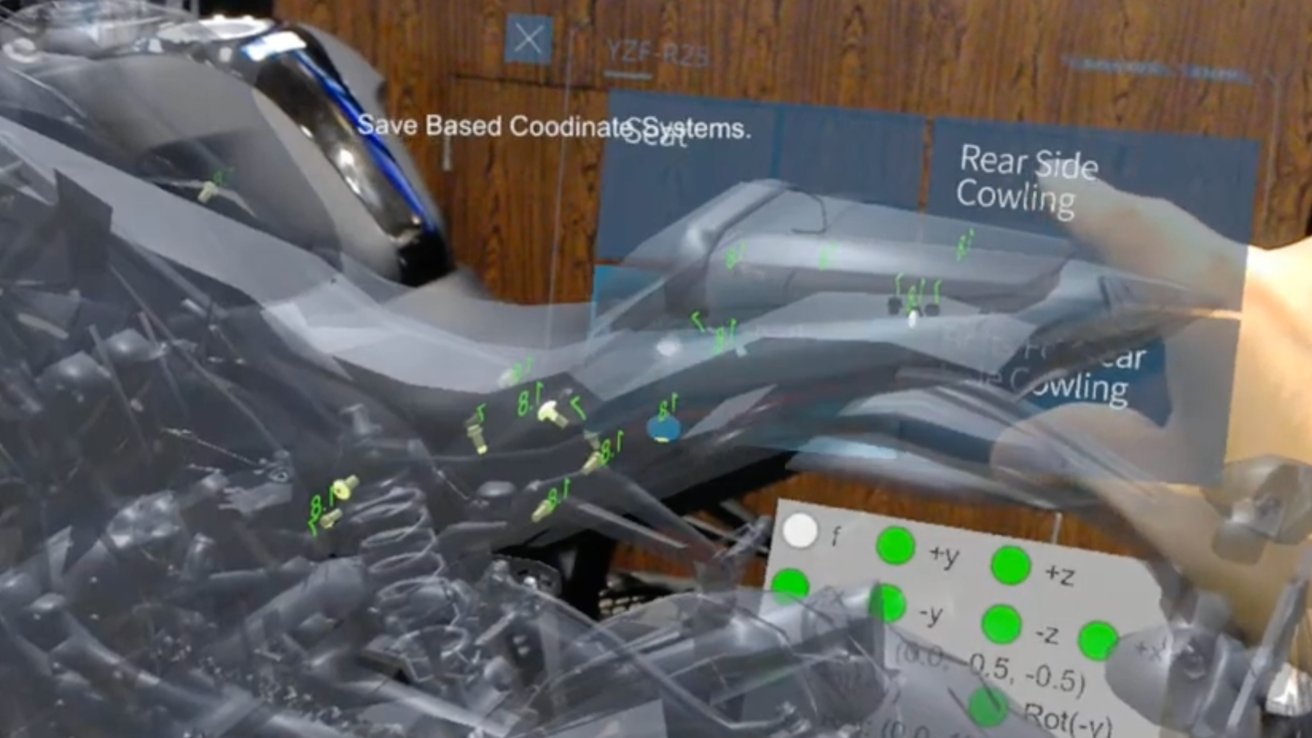

Mixed reality is a more advanced technology that combines the real world with software within the VR headset. Users would still be within a completely enclosed virtual reality experience, but real-world objects would be represented by virtual ones in real-time.

If augmented reality is just software overlaid on the real world, mixed reality is software that is aware of the real world. For example, mixed reality would see a motorcycle you're working on and point out exactly what parts you're adjusting in real-time, versus augmented reality just overlaying a list of steps.

It is different from virtual reality, too, since VR experiences are completely unaware of the outside world. You're placed inside a pre-rendered world for VR and don't see the world around you within the experience.

Mixed reality requires a lot more computational power than augmented reality or virtual reality because it is using both technologies in real time. Imagine converting your home into a jungle in virtual reality, but every piece of furniture is represented by a tree or bush in the simulation. A culmination of AR and VR tech.

What is Spatial Audio?

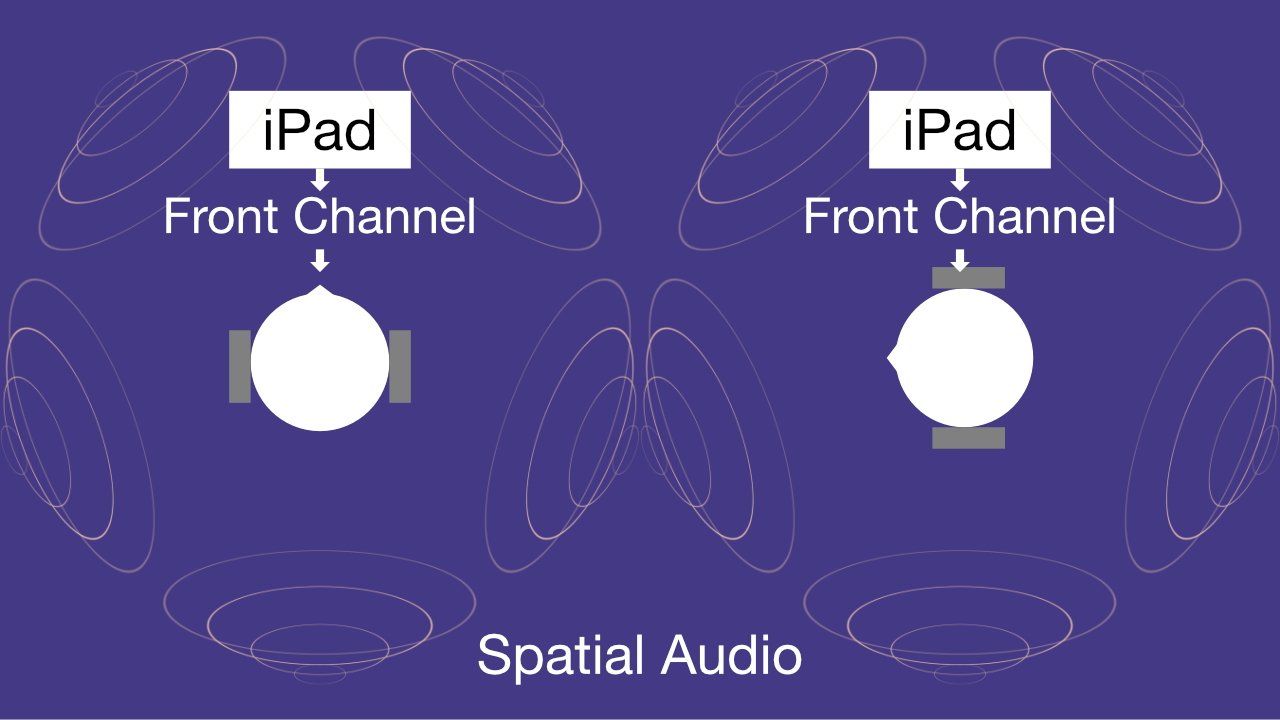

Spatial Audio is Apple's branded implementation of directional audio that accounts for sound origin, distance, and direction within a 3D space. So, music or media that take advantage of Spatial Audio will sound like it is coming from all around you, not just in front of you.

Apple uses Dolby's existing audio formats to recreate audio in a 3D space. Spatial Audio is different from standard Dolby Atmos tracks, for example, due to how Apple processes the files. It can use device gyroscopes to let the user "move" through the 3D audio space with head tracking.

Spatial Audio will likely play a pivotal role in virtual reality experiences. Modern Apple headphones like AirPods Pro 2 and AirPods Max can take advantage of the format, though it isn't clear how Apple will address audio in VR if a user doesn't have AirPods.

What is virtual reality?

Virtual reality, or VR, is a completely encapsulating software experience that doesn't account for the real world, nor is the real world visible when in use. A headset obscures the user's view entirely as software is shown on displays that are inches away from the user's eyes.

The software is shown in a pre-rendered state that is only vaguely aware of the user's location. Sensors on the headset can help alert the user to a potential collision with real-world objects, but the VR experience is not affected by this.

Virtual reality differs from augmented reality because it completely takes over the user's vision rather than overlaying information on the real world. Virtual reality becomes mixed reality if the headset is able to create software renders within the real world while accounting for surrounding objects.

What is xrOS or RealityOS?

Apple is rumored to be working on an operating system that is built for augmented reality and virtual reality. It would be used in its first VR headset and has been referred to as RealityOS or xrOS.

The operating system will likely take on aspects of Apple's other software, so users are instantly familiar with how to interact with software and menus. Apple has been pushing developers to build AR experiences already, so the step up to VR will likely be a small one.

While there are still hints to RealityOS in Apple's iOS, the final name is expected to be xrOS, which stands for "extended reality operating system." A single OS for AR and VR could bridge the gap from the Apple VR headset to a future set of AR glasses that have been dubbed "Apple Glass."

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

-m.jpg)