Adding to rumors of Apple's augmented reality ambitions, a report on Wednesday claims the company is working to bring unspecified AR features to its first-party camera app on iPhone.

Citing a single source, Business Insider reports Apple is looking to integrate limited AR functionality into the iOS Camera app. The project is said to involve new hires and technology from recent acquisitions in the AR/VR space.

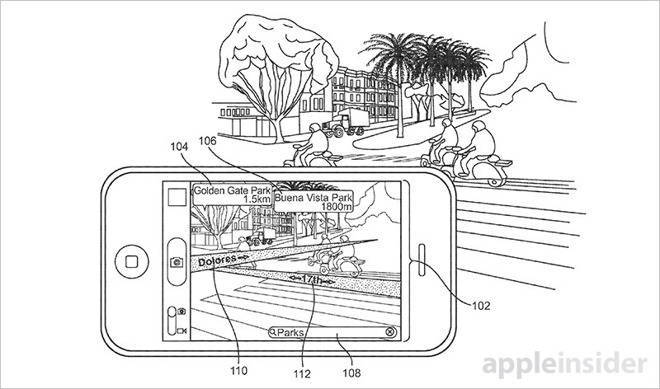

Though details are sparse, the report claims Apple's goal is to implement technology that will allow iPhone to recognize real-world objects. Object recognition is an important step toward realizing a working AR system. Modern AR solutions use imaging technology to overlay interactive information onto the physical world, a process that in some cases requires creating a digital map of a user's surroundings.

The report goes on to say Apple's AR implementation might be capable of recognizing and manipulating people's faces, a feature made popular by Snapchat. Facial recognition is already active in the iOS 10 Photos app, which employs the technology for picture management.

Once AR features roll out in the Camera app, Apple plans to make the technology available to developers through iOS APIs, the source said. The process would be similar to once closed APIs like Touch ID, and more recently Siri.

Today's news comes on the heels of a report claiming Apple is working on a pair of smart glasses that could support AR functionality. Early indications suggest the project in its current form is merely a heads-up display along the lines of an advanced Google Glass device.

Whether the secret wearable initiative will progress to integrate augmented reality features remains unknown, though a recent hire hints at a product much more ambitious than a simple HUD.

According to his LinkedIn profile page, self-professed head-worn display specialist John Border joined Apple in September as a "senior optics manufacturing exploration engineer." Border is also an expert in plastic optics manufacturing, camera systems and image sensors, all necessities in creating a viable AR platform. Prior to taking a position at Apple, Border served as chief engineer at San Francisco-based Osterhout Design Group, a small firm that developed and markets AR-infused smart glasses.

Apple is known to be working on augmented reality solutions, though it is unclear in what form factor that product will arrive. CEO Tim Cook first confirmed Apple's interest in AR during a quarterly investors conference call in July, and has since reiterated the company's bullish stance on the budding technology on multiple occasions.

Beyond a growing pile of AR/VR patents, including IP covering an AR mapping system for iPhone granted last week, Apple has built out a team of industry specialists through strategic hires and segment acquisitions. Last year, the company purchased motion capture specialist Faceshift, machine learning and computer vision startup Perceptio and German AR firm Metaio and former Google collaborator Flyby Media.

Mikey Campbell

Mikey Campbell

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Andrew O'Hara

Andrew O'Hara

12 Comments

"Apple's AR implementation might be capable of recognizing and manipulating people's faces, a feature made popular by Snapchat"... Apple was doing this in Photo Booth ten years ago.

First I've heard of this John Border hire. Those glasses he was working on give me hope that Apple is going ahead with their own glasses project, with their own patents going back years.

3D video and/or stereo AR in glasses is going to be the biggest thing ever, bigger than the iPod anyway.

Portrait mode functions like a 3d scanner - using the two cameras to sense the location of objects in a field of depth, the blur feedback provides a means for the user to fiddle the viewfinder until the effect is accurate - this is a form of training the algorithm, it's quite likely that this feature has been training the software necessary to create good-AR for Apple. Apple also have PrimeSense's technology which utilises IR to help identify objects in space, but the applications of that seem more limited.

Added as a feature to the native Camera app? Meh. Should be a standalone app, IMO.

I don't yet understand how AR relates to taking picture or capturing video? That is unless the AR in Camera app is cosmetical, for novelty, whereas a navigation AR feature, like the patent illustration shown in the article, would certainly not reside in the Camera app, I'd imagine.