"Great artists steal" in the sense of recognizing good existing ideas and building upon them. Now that Google has laid out its hand at IO17, detailing its plans for Android O and the search giant's various apps and services, what great ideas can Apple borrow for its own products? Here's a look.

Google Lens

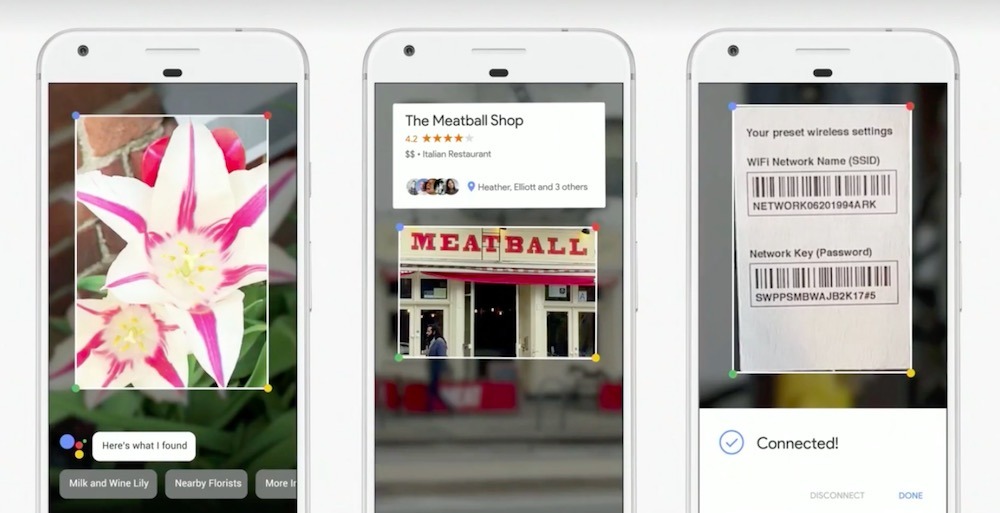

One of the flashiest things Google showed was Lens, an app that makes use of Augmented Reality, OCR and (2017's Buzzword) Machine Learning to identify objects using the camera and provide contextual data about them. It makes for a cool demo, but is this app— built upon the very nifty Word Lens AR translation app Google acquired three years ago— really a core foundation of Google's Android development platform?

It's notable that Google's approach to AR (so far) seems to be self-contained— a feature, not a third party platform. The company described Lens is a visual search engine (similar to its audio search approach in Assistant) rather than being a framework for building out third party AR apps. Google's Android and ChromeOS are presented as open platforms but the company is really built around proprietary services: search and paid placement advertising.Google's Android and ChromeOS are presented as open platforms but the company is really built around proprietary services: search and paid placement advertising

And while described as one of the coolest things shown at IO17, Google Lens also bears a lot of similarity with existing AR search apps, including Amazon's Firefly feature on the failed Fire Phone of 2014— which was effectively a search engine attached to a store.

There are a variety of other mobile apps that similarly identify products, language, locations or other data using the camera. Will Apple's approach to AR be similar?

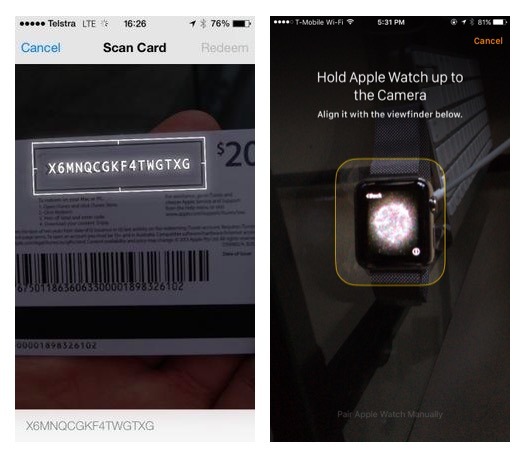

Apple already uses optical processing to do practical things such as redeem iTunes gift cards (since 2013), or pair an Apple Watch using the iPhone camera (since 2015). At WWDC, Apple will likely show off at least some of its new work in the area of AR and camera machine vision, related to a series of recent acquisitions.

But rather than only launching another AR app or Lens-like service, Apple could be expected to deliver a platform for third party AR apps, in the pattern of its previous efforts to turn Maps, iMessages, Siri, Apple Watch and Apple TV into development platforms, not just products. Apple's most lucrative Services business— the App Store— comes from building a development platform for iOS.

The next iPhone is also expected to include 3D imaging to support sophisticated AR. In addition to building its own first party software for AR, iOS 11 could present an alternative "camera app" for iOS aimed at working as an imaging sensor rather than being oriented around capturing photos or videos.

Such a "non-photos camera app" could expose an entire platform of AR/ML/OCR-oriented imaging apps in once place, letting you point your phone at anything and get a variety of interpretations from whatever AR apps you've installed, each leveraging all the sophisticated image signal processing logic Apple builds into its Application Processors.

Google Photos

Google Photos introduced a couple interesting features (including the potentially rather dangerous idea of automatically sharing photos it thinks are of a given contact to that person) but it, too, is an app driving data to Google, not a development platform. Photos took up a sizable chunk of the company's keynote just to show off, essentially, face recognition. That's not really new.

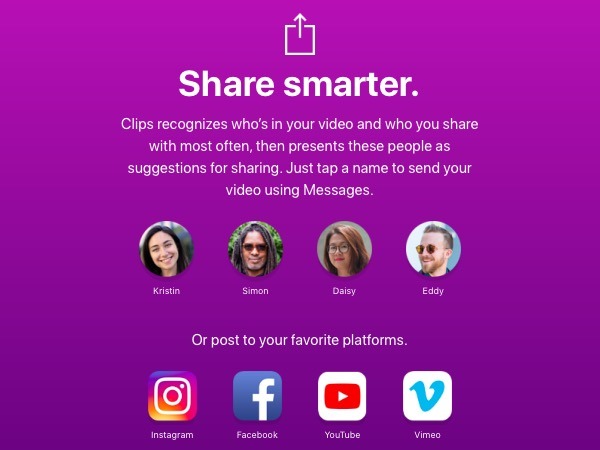

Automating the process of sharing photos based on what's in them is something that could be done using Workflow OS-level automation. It's also already something Apple does in its new Clips app, which recommends contacts to send your captures to based on face recognition. Apple didn't throw an extended event for Clips; it just made it available.

The design of Apple's Clips also suggests that it was created to be a platform: the filters, titles and jingles download when first requested. It's not hard to imagine Apple opening up ways for developers to build their own Core Image filters or to incorporate iMessage App Store Stickers. iOS could also extend FaceTime to apply the same OS-level, real time filters and Stickers, similar to what Snapchat has popularized on mobile over the last five years, and the way Apple's own iChat Theater did on the Mac ten years ago.

iChat Theater (and Photo Booth) both introduced some simple AR effects for applying real time video filters and green screen-like backgrounds, more than ten years ago. As iOS devices get more powerful, it's surprising that FaceTime hasn't already picked up where those apps left off.

Apple's lapse in promoting and incrementally building upon key Mac innovations— including Quartz Compositions and Core Image effects— has effectively lead a generation of Millennials (and older pundits with short memories) to associate much of what Apple introduced ten years ago with Snapchat. Hopefully Clips signals an attempt to turn this around. Clips isn't "Apple's Snapchat," it's the drop-dead simple, mobile version of Motion.

Google fluffs the cloud

Google Photos new ability to effortlessly share albums with family members is something Apple hasn't shown off before (Apple Photo's iCloud Sharing is not automatic and has other limitations), but it's a technically minor feature that could be dribbled out as a x.1 feature update were it from any other app developer.

Apple could apparently use iCloud's new iOS 10 shared data mechanism— used to power shared Notes and in the collaboration features of Pages, Keynote and Numbers— to selectively share specific photos or digital films with other iCloud users, including dynamically created albums built using face recognition or location data.

Ideally, this could be done using higher quality photos, too (iCloud sharing scales pics down significantly, although not quite to the same dire quality of Facebook). There's also reason to believe that iCloud could be extended to support better social sharing features.

Google's professionally-printed Photo Books were first shown off by Steve Jobs back in the days of Mobile Me. He hasn't been able to present on stage since iPhone 4s launched in 2011. That was half a decade ago. You want applause for that in 2017, Google? Seriously.

Along the same lines, Google's Jobs— an app that searches for employment listings— is also something you might not need to go over in detail in front of thousands of developers and live stream to a global audience. Google said its keynote would be 90 minutes, then spent two hours going on about routine apps. That just looks sloppy. It also looks like the company didn't really have much to show so it padded with basic fluff.

VPS: the hot new tech for shopping in retail stores

Another idea Google presented was "VPS," an indoor alternative to GPS, based on Google's Project Tango work in AR to identify location from visual cues using the camera. Despite dancing around with Tango since 2012, Google is only just now outlining an application of it as a way for smartphone users to be guided to a particular product shelf inside a store. What can Apple learn from this?

Very little. Apple already has a microlocation strategy: iBeacons, introduced back at WWDC13 using standard Bluetooth. In 2015, Google attempted to copy iBeacons with its own Eddystone BLE beacons, but that apparently never gained traction. Rather than doubling down on wireless transmitters to accurately locate users inside, Google demonstrated something this year that doesn't require the installation of beacons.

Our new #Tango-enabled Visual Positioning Service helps mobile devices quickly and accurately understand their location indoors. #io17 pic.twitter.com/1pYlCGM8eg

— Google (@Google) May 17, 2017

Instead, VPS requires that users walk around a retail store with their phone out, camera pointed ahead, sort of like Google Glass without the glasses. This seems smart until you think about it briefly. It also bets that shopping in big retail stores is the future. As presented, VPS seems stupendously dumb— and displays an ignorance of technological and societal trends on the level of last year's bozo Project ARA.

Apple should take care to note that showing off poorly conceived ideas in a way that makes them look techy and cool is still dumb. Hopefully it won't waste our time with this kind of stuff at this year's WWDC. If there's a practical application of AR, show it instead of expecting us to believe that the future involves blindly navigating big box retail using one's phone as a water witch visual divining rod.

Google Assistant on iOS

Another idea Google presented involved taking a key competitive advantage tied to its own Android platform and giving it away to others. Google Assistant debuted last year as the search giant's intelligent, conversational alternative to Siri, initially exclusive to Pixel phones and integrated into its Allo messing app and Google Home appliance.

It has since also made the feature available to other premium Androids (running Android Marshmallow or Nougat), and now it's available on iOS. Can Apple learn something here?

Probably not. Google appeared to overestimate the potential of "premium Android" as a platform. Globally, the company announced at IO17 that only 100 million Android devices can currently run its Assistant, giving it an installed base smaller than the Macintosh. Compared to the "2 billion active users" Android claims, that's a very small population.Apple's installed base of premium iOS hardware is around nine times as large as the fraction of Android users who have higher-end hardware running a fairly up-to-date version of Android

At the beginning of 2016, Apple noted an installed base of "over 1 billion active devices." Most of those are iOS (there's an estimated 100-150 million Macs and 400 million iPads, suggesting about half a billion iPhones in active use), and the overwhelming majority of those are running Apple's latest iOS 10.

In other words, Apple's installed base of premium iOS hardware is around nine times as large as the fraction of Android users who have higher-end hardware running a fairly up-to-date version of Android.

In more affluent nations (including Austrailia, the U.S., Great Britain and Japan) iPhone accounts for 40 to 50 percent of all smartphones sold, regardless of price. Apple sells the lion's share— around 80 percent or more— of the world's premium class phones and tablets, including over 80 percent of US tablets above $200, according to NPD.

Plenty of pundits have wondered aloud about why Apple hasn't ported iMessages, Photos, iMovie, GarageBand or other iOS-exclusive apps to Android. One key reason is strategic (to give iOS differentiated, superior advantages), but the other obvious problem is that the valuable demographic that does exist on Android (and can run modern software) is actually quite small.

That explains why Google has struggled to get its Allo, Duo, Google Maps, Android Wear and now Assistant installed on iOS, why Microsoft ported Office to iPad years before it targeted Android, and why Apple's only apps for Android were acquired with Beats or help migrate users to iOS. For all the talk of Android's huge market share and installed base, there's rarely much consideration of the real quality and value of those metrics.

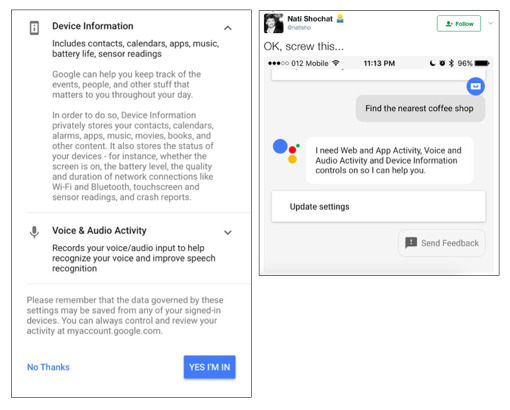

Google Assistant as a search engine

At IO17, Google's voice Assistant was demoed as a text-oriented bot— effectively exposing the service as a conventional search engine. Apple's Siri already lets you edit its interpreted voice requests, but adding a fully text-based way to interact with Siri (similar to Spotlight Intelligence, with a wider scope) would be a welcome addition in iOS 11. Along those lines, Apple has already patented the idea of integrating Siri into iMessage conversations.

The other false narrative fronted as a problem for Apple in regard to Siri is that its efforts to protect users' privacy are harming its ability to collect and analyze data from users, causing it to fall behind Google in "machine learning." Apple shattered that notion last year in outlining differential privacy.

The Google Assistant app hits the AppStore: https://t.co/ovdVBOSszU

— Nati Shochat (@natisho) May 17, 2017

However, Google appears driven in the opposite direction. Rather than seeking to respect local data, Assistant demands broad access to a wide swath of user data including web history, app activity and device information. And as noted by Benedict Evans, it also ignores local contacts and calendars with the expectation that all your data is stored in Google's cloud services.

Like Now before it, Google Assistant ignores the calendar & contacts on your phone. Only works once you've bought into all other G services

— Benedict Evans (@BenedictEvans) May 17, 2017

Microsoft brought its Office apps to iOS to earn subscription revenue. Google Assistant (like Allo, Duo and Google Maps) is free because the company desperately wants the valuable data iOS users have.

Further, the fact that Google is pushing to get Assistant on iOS now, in advance of spreading it to the "other 95 percent" of Android's "two billion" global installed base, says something about both how valuable those other 1.9 billion lower-end Androids are— as well as how pointlessly difficult it is for Google to bring modern new software features to that outdated, underpowered installed base.

The lesson Apple might take away here: don't chase Google's low-end, high-volume mobile device model, but rather copy Google's intent of focusing on iOS instead.

Android O-riginal?

Apple isn't the only company that can come up with new ideas, and frequently implements good ideas first delivered elsewhere. Last year iOS 10 Messages borrowed ideas like Stickers and app integration seen in other popular IM platforms, for example.

In contrast, Google's previously outlined Allo and Duo were just a shameless rip-off of iMessage and FaceTime without really adding anything, making it effortless for Apple to leapfrog Google's low bar for innovation. Google previously did the same kind of carbon-copy cloning of Apple's implementation of Apple Pay and CarPlay while its Pixel phone looked like a straight-up frankensteiPhone 6. The company's other hardware, Google Home, is just an echo of Amazon's "smartphone? here's a landline with Siri!" strategy.

That makes it rather eye-rolling that this year Google's headlining "look at me" features in Android O included Apple Data Detectors renamed as "Smart Text Selection," iOS 8's web browser-like forms AutoFill in apps, iOS 9's iPad floating Picture-In-Picture feature and Find My Device, an iOS 4.2 feature Apple released in 2010 which Google first copied three years later.

Google has belatedly pretended to also care about things like Activation Lock and fingerprint biometrics (and security in general) but has sought to portray its own identical versions patterned after Apple as being completely independent and original work. Come on Google: slide that stuff out on page two, and lead with something amazing that you did on your own.

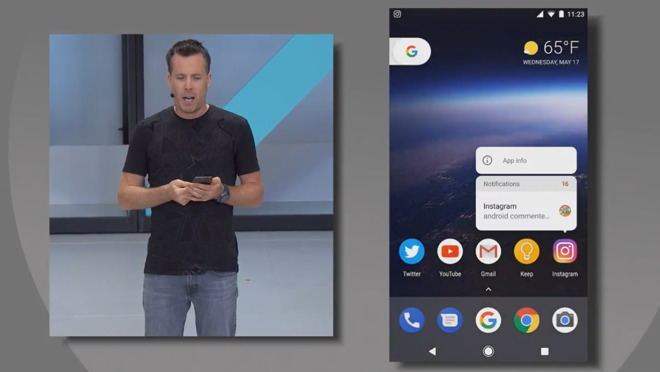

The more original Android O ideas were less exciting: Notification Dots— which present a given app's notifications using a sort of fake 3D Touch (below) — is arbitrarily different from iOS, but looks like it presents a cluttered and clumsy interface.

Due to very slow (and slowing) penetration rates, It will be years before most Android devices adopt this, and by then it might be changed. In contrast, Apple will likely make improvements to 3D Touch— which an increasingly large proportion of the iPhone installed base now supports.

Google Play Protect offers virus scanning of Android apps from the Google Play store, needed only because Google doesn't really vet its apps on the other end. Smart Replies in Gmail sounds like an effort to repeat Machine Learning— buzzword of the year— rather than being some sort of really interesting innovation.

Android Go seeks to make Google's platform work better on low-end hardware, but Apple doesn't have this problem. iOS is already far superior to Android in the way it manages memory and other resources, and Apple's installed base is effectively all modern, premium hardware compared to Android.

Google invents the driverless Keynote

In previous years, Google ambitiously sought to reinvent television, improve upon Apple's iPad in tablets, deliver a new wave of premium "Silver" smartphones, pioneer wearables with Glass and Android Wear, make a bold impact in the enterprise, rethink netbooks without Windows, make Ara modular component phones and deliver a new video game console.

However— despite droning on for two solid hours— Google's IO 2017 Keynote offered no ambitious strategy for turning Android into anything more than what it already is: a lowest common denominator platform for basic phones aimed at emerging markets where most users lack the luxury of an expectation of privacy and will endure surveillance advertising if necessary.

This year's Android O release is at best getting some utilitarian updates while much of the focus was directed upon interesting apps— albeit nothing that's really a platform. Why drag developers out to a keynote to show them Lens, Photo, YouTube and a Jobs app? Additionally, many of the features Google showed off during the IO keynote were simply an effort to claim credit for things that have already been done.

Is this really the keynote address representing the world's most widely spread development platform, or just a company picnic thrown by a bunch of engineers who are cool with just coasting along selling ads online?

We have another two weeks to see what Apple has outlined for its own Worldwide Developer Convention to be held during the first week of June, but Google seemed to offer more warning signs about complacency at IO17 than any real framework of strategic innovation for Apple to build upon.

GPS without a destination

Google's IO17 seemed to exert the level of excitement of a keynote by Samsung or HP: a series of presentations that appear to say "look at everything we worked on!" without any strategic emphasis or a particular destination in mind. It's also a reflection that the company lacks Apple's Ability to Say No.

The event also provided mounting evidence that Google has little real ability to deliver on its initiatives. Google's chief executive Sundar Pichai was supposed to bring some adult supervision to Android and turn its freewheeling tinkering— subsidized by a reliable advertising profits engine— into actual deliverables.

Three years ago at IO14 Pichai belatedly began addressing efforts to appeal to the enterprise in Android 5 Lollipop, and over the next year he focused on broadly deploying the company's Android 6 Marshmallow software via Android One, an affordable handset reference design that could be produced for as little as $100.

Last year Pichai's Google radically changed direction to aim at premium-priced consumer hardware with its own expensive Google-branded Pixel phones and a new vision for smartphone-based Virtual Reality that upgraded Cardboard into a fabric headband with Daydream.

Instead, Android L, M and N releases have each dropped to new lows in penetrating the installed base. IO14 didn't result in the enterprise taking Android seriously. IO15's Android One was soundly ignored by the very SubContinent it was aimed at and IO16's Pixel was commercially a bust.The primary reason for Google regularly shifting away from its previous year's strategy for a new one is the fact that each of those strategies failed miserably

Other turns suggested at previous Google IO events have similarly ended up at dead ends, including Project ARA and its plans to host Android apps on ChromeOS. Somehow nobody at Google runs any of its ideas past a basic sanity check. A phone that comes apart? Android's huge library of smartphone Java applets on netbook hardware nobody is currently using outside of American K-12 schools?

The company's annual refocusing appears to reflect a shallow interest in hardware, which is understandable given that Google earns virtually nothing from the end users it supposedly sells Android products to; Google's real customer is its advertisers, from whom it makes virtually all of its income.

Of course, the primary reason for Google regularly shifting away from its previous year's strategy for a new one is that each of those strategies failed miserably. This year, it appears Pichai's teams decided to give up ambitious pretense and just come out as an army of ad engineers who build novel ways to shamelessly surveil users for advertising purposes inside apps that require broad access to all your data, while at the same time pretending that Android is something other than an open implementation of iOS without rigorous engineering, quality assurance, or even any real plan.

Let's hope Apple doesn't copy Google's arrogant complacency.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Malcolm Owen

Malcolm Owen

Oliver Haslam

Oliver Haslam

Amber Neely

Amber Neely

Marko Zivkovic and Mike Wuerthele

Marko Zivkovic and Mike Wuerthele

Andrew Orr

Andrew Orr

96 Comments

This article demonstrates everything I hate about Apple, elitism. Focus on those with money, rather than those that do not.

Those cheaper android phones are still powerful devices, this article makes them out to be no more than a calculator.

I thought Google also announced an easy way to update android which includes even down to graphics drivers, did I dream that?

WHY THE FU** IS THE "I" lower case??

Nothing pisses me off more than unoriginal, lazy copy cats who have to take everything from Apple literally to an "i".

There's absolutely no reason for that letter to be lower case. ZERO.

Those products are Apple knockoffs. They will NEVER be "elite" or real iPhones/iPads/Macs/Apple Watch.

No matter how much these companies spend on commercials and manipulate weak minds they will NEVER be real products.

How about before they spend all the time on new features, they spend 5 minutes removing the 100MB download limit from the Apple App Store. (Apple requires a wifi connection. I have unlimited LTE data.)

I can't tell you how stupid it is to still have this tiny limit... Yes, there is a (every changing) workaround but it's incredibly annoying.

Also, I can't install CRITICAL IOS updates with this limit in place, and there is no workaround. I'm using with Apple (rather than Android) because of the superior security. Apple's pissing that advantage away.