Apple's digital assistant Siri continues to lag behind the Google Assistant, a group test reports, one which also reveals improvements in properly understanding queries and providing correct responses has generally improved across all digital assistants.

The Digital Assistant IQ Test by Loup Ventures repeats a similar trial from April 2017, tasking Apple's Siri, Google Assistant, Amazon's Alexa, and Microsoft's Cortana with responding to a series of questions. While last year's test solely used smartphone-based assistants, Alexa's iOS app allowed it to be included in the roster.

A total of 800 questions were asked to each assistant, with grades provided based on if the question was correctly interpreted, and if a correct response was provided.

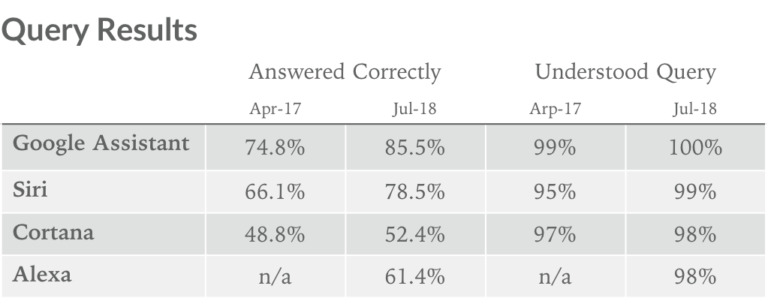

The Google Assistant topped the list, providing correct results 85.5 percent of the time, with Siri in second place with 78.5 percent of queries answered correctly. Alexa scored 61.4 percent correct, while Cortana rounded out the list with 52.4 percent.

There were improvements across the board, with Google Assistant up from the 74.8 percent it scored last year, while Siri and Cortana's scores from 2017 were 66.1 and 48.8 respectively. The ability for assistants to understand the queries has also improved, with Siri shifting from 95 percent understood to 99 percent, and Google moving from 99 percent to 100 percent.

"Both the voice recognition and natural language processing of digital assistants across the board have improved to the point where, within reason, they will understand everything you say to them," analysts Gene Munster and Will Thompson write.

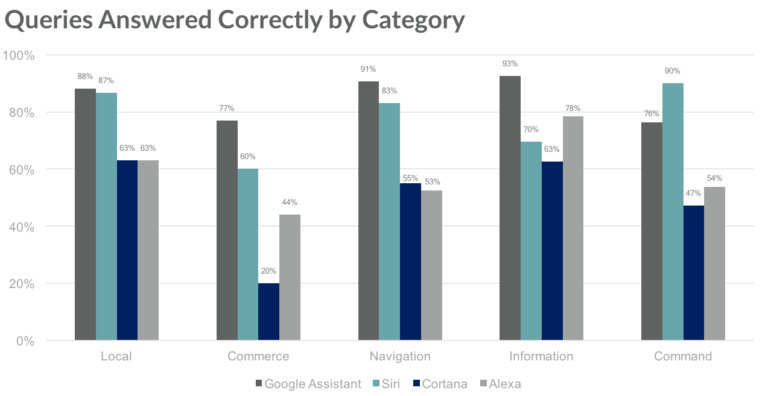

As part of the testing, the questions were broken up into one of five categories, testing their abilities to interpret local knowledge, commerce, navigational, informational, and command queries. It is noted the questions were modified for this year's test to "reflect the changing abilities of AI assistants," changes which appeared to cause drops in results for navigation queries, but still saw improvements for the other categories.

On a category basis, Google Assistant leads over all others, except for command queries, which is dominated by Siri.

"We found Siri to be slightly more helpful and versatile (responding to more flexible language) in controlling your phone, smart home, music etc" states the report. "Our question set includes a fair amount of music-related queries (the most common action for smart speakers). Apple, true to its roots, has ensured that Siri is capable with music on both mobile devices and smart speakers."

The testing used iOS apps for Cortana and Alexa, which the analysts claim are not entirely reflective of their capabilities when used on other platforms. One example, the Alexa app's inability to set reminders, alarms, or send emails, impacted its Command category performance. As Siri and Google Assistant are baked into iPhones and Android smartphones, it is suggested Cortana and Alexa are fighting an uphill battle when compared on smartphones.

The analysts are hopeful that voice-based computing will improve over time, as it is a feature about removing friction from a user's standpoint. Along with the ability to understand conversational-style queries that follow each other, as well as routines and smart home scenes, Siri Shortcuts is also highlighted as another friction-reducing feature to create "mini automations" that could be triggered by command.

Paymenta and ride-hailing are two promising areas for future vocal development, due to requiring little to no visual output to be performed. While Siri and Alexa were able to hail a ride, and Siri and Google Assistant capable of sending money, the firm expects such features to be adopted by those that do not already offer them.

Malcolm Owen

Malcolm Owen

-m.jpg)

Charles Martin

Charles Martin

Christine McKee

Christine McKee

Wesley Hilliard

Wesley Hilliard

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

24 Comments

In short, Siri showed biggest improvement in understanding and correct response, but still lags Google in response rate. I depend on Siri more and more, yet these must be lab conditions, as Siri certainly doesn't understand me correctly 99% of time. I hope Munster improves these comparisons (at least from what I glean by these articles) as assistants take on more tasks such as comparison shopping and maintenance of device OS chores. Also if he can make tests on how fast they can be trained and how durable that training is.

Well I dunno if I'm stuck on some 2003 version of Siri alpha but I'm nowhere near the 99% for "understood query", it's less than 50% for me.

Great job Siri.

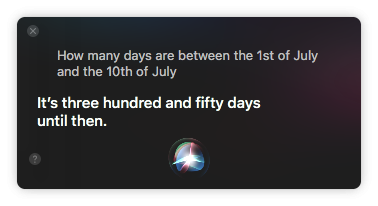

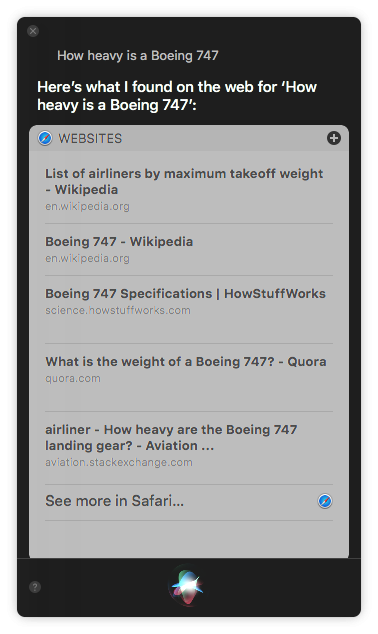

Unless I need an answer to the most basic of questions, I just don't bother anymore. It takes longer to ask Siri and wait for its incorrect response than to search myself. They seem to have abandoned Wolfram Alpha integration which was a great Siri knowledge filler. Almost everything now is "here's what I found on the web for..." For another example: I ask what the temperature is in Canterbury, and despite actually being in Canterbury, UK it decides I'd rather know the temperature in Canterbury, New Zealand. Same if I ask for directions from anywhere in the UK to Canterbury. It tries to get directions to Canterbury, NZ, and then says it can't get directions to there. Brilliant.

So I have no idea where these statistics are coming from, but anecdotal evidence from myself and a few friend's usage shows its understanding is pretty awful at best.

I don’t need stats to prove what i know...siri sucks....this reports must come from Fox news, home of the alternate universe