Calls between iPhone users could benefit from automatic audio filtering to improve the quality of the sound heard by each user, a system that would only come into effect once it knows one user is having trouble hearing what is being said by the other.

Phone calls have historically endured numerous issues that can make the call difficult to hear, ranging from the quality of the connection to, for the most part, external factors. Callers in a noisy environment or with too quiet a voice may not necessarily be heard by the recipient, and could result in a request to repeat what was just said.

Audio processing technology to improve audio isn't a new concept, and to a point can work on a phone call, with audio processing algorithms such as blind source separation, pickup beamforming, spectral shaping, and acoustic echo cancellation used alongside noise reduction to good effect.

Even so, there isn't necessarily a desire to have these algorithms at work at all times, as they typically only need to be enabled for specific circumstances. There is also the fact that processing the sound can also affect the call in unwanted ways, distorting the speech further.

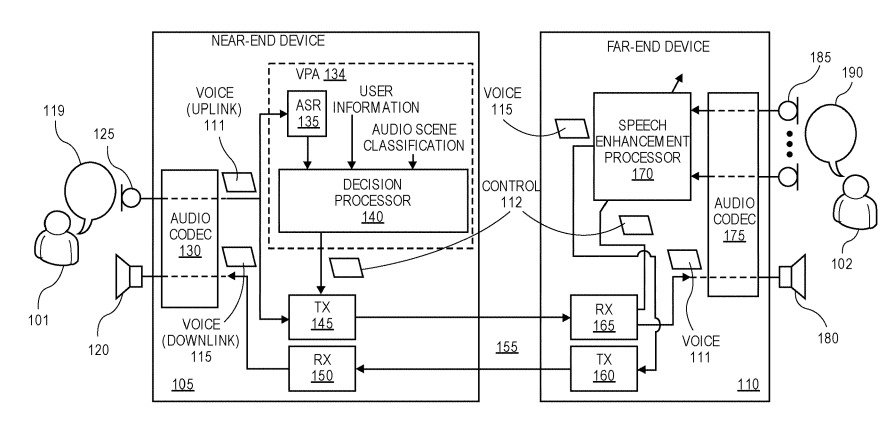

In a patent application published by the US Patent and Trademark Office on Thursday, Apple's filing titled "Transparent near-end user control over far-end speech enhancement processing" suggests how one smartphone in a call can be triggered to perform specific speech enhancement tasks on audio it picks up by the other smartphone.

The recipient's smartphone will listen out for trigger phrases in that user's conversation that it could interpret as requiring audio processing assistance. Once detected, a signal is sent to the other phone in the call, which performs the required processing for the remainder of the conversation.

The trigger could consist of an explicit request to the user's virtual assistant, such as "Hey Siri, can you reduce the noise I'm hearing."

The "transparent" portion of the title relates to a continued monitoring of the user's speech for utterances that indicate issues with hearing the other person, such as "I can't hear you," "Can you say that again?", "It sounds really windy where you are," and "What?" While not a direct command in either case, the system would determine the existence of each utterance as a problem that needs rectifying, and sends a signal over.

Furthermore, the utterances or triggered phrases would be compared with a database, which has different processing techniques associated with them. Depending on the words used, different commands can be sent to the far-away smartphone to process the audio.

The near device's system could also make processing requests based on messages it receives from the far-away phone, such as an alert that there are multiple talkers on one end of the line. This could trigger an automatic response for blind source separation to be turned on.

The decision processor, the system that determines what processing should be used via the database and other means, could also listen to the general audio environment to determine other effects that could impact a call, and automatically apply the required filtering.

Apple files numerous patent applications with the USPTO on a weekly basis, but while there is no guarantee that such a system would be made available in a future product or service, it does advise of areas of interest for the company's research and development efforts.

The proposed system has some promise, but would have an uphill battle to be used across the mobile industry. It would ideally require all mobile device vendors to adopt the system, or else it will only work on devices from the same manufacturer, while rival firms may not necessarily want to provide control over their device's audio processing to another company's smartphone.

It would also need phone networks to alter how they handle calls, to allow the signals to pass through between the devices.

Arguably Apple already has a communications network that it could implement such a system, in the form of FaceTime. As it is already an Apple-specific system, it also means Apple already has extensive control over most of the connected hardware, making the ability to transmit processing signals and to perform them relatively trivial.

Malcolm Owen

Malcolm Owen

-m.jpg)

Wesley Hilliard

Wesley Hilliard

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Amber Neely

Amber Neely

6 Comments

FaceTime has become nearly unusable for me due to Apple’s excessive noise suppression. If there is any background sound (let alone noise) whatsoever, everything the other person says is cancelled out, and must be repeated. It’s really awful.

The phone call is the most frustrating, flaky and archaic tech in the iPhone.

Hows that for irony.