In 2005, Steve Jobs announced that Apple would transition Macs to use PC-standard Intel x86 processors. Fifteen years later, Apple appears poised to make another CPU shift that could prove to be far more significant in the future of technology. Here's why.

Rapid changes in the chip industry over the past five years

Five years ago, I wrote "Five barriers that might hold Apple back from moving Intel Macs to custom ARM chips." While those factors did explain why Macs haven't transitioned to ARM processors yet, things have materially changed over the last five years.

Apple originally moved Macs to Intel for good reasons. In 2006 Apple didn't have any significant in-house chip design team and it didn't have vast capital to go out and develop its own chip technology. Leveraging the work Intel had already done— and had available to sell— not only made sense, but was by far the best of the very few options available to Apple at the time.

However, after launching iPhone in 2007 and kicking off investments in custom ARM application processor design that enabled it to deliver the 2010 iPad using its own custom A4 chip rather than an Intel processor, Apple began incrementally changing the rules of the game.

By 2015, Apple was one of the world's leading mobile chip design firms and— thanks to massive volumes of profitable mobile device sales— had $150 billion in cash to embark upon ambitious new projects. Fast forward to today: Apple has delivered five additional new generations of A-series chips that are now easily rivaling Intel's mobile notebook processors.

Apple's move from being entirely dependent upon Intel chips for the future of its Macs to its status today at being superior to Intel in building a decade of advanced A-series mobile application processors bears some resemblance in the earlier history of Microsoft shifting from being the Office app vendor dependent on the Macintosh in the mid 80s to becoming the leading OS vendor in the PC industry just a decade later.

Intel isn't maintaining a comfortable lead in processor technology

Meanwhile, Intel hasn't delivered a correspondingly massive new jump in x86 processor efficiency or compute power capable of maintaining a competitive margin well ahead of Apple's internal A-series application processors used in devices from iPhones to iPad Pro. The case for also moving Macs to a custom Apple chip keeps getting stronger.

Additionally, the WinTel PC sales that have been driving Intel's x86 chip development since the 1990s have plateaued for several years now. Apple's Mac sales growth has been outpacing the larger PC industry in nearly every quarter for many years. With very little new growth in PC sales volumes, the collapsing prices of PC notebooks are not fueling huge new leaps in research and development. Instead, Intel has focused on building other types of processors that can make money in more attractive markets.

Intel attempted to enter the mobile application processor market in its Atom x86 partnerships with Google and Android, and has tried to shore up sales of premium chips for PC servers. Outside of conventional x86 CPUs, Intel acquired Infineon to enter the baseband processor market. These modem chips pair a dedicated ARM CPU with radio circuits that manage a mobile device's wireless capabilities.

Intel's most recent efforts to gain relevance in mobile broadband chips gained a temporary win after Apple adopted its modems for iPhones. But now that future iPhones will use Qualcomm modems and eventually Apple's own, Intel is losing all of the mobile business as well, prompting it to drop out of the 5G race entirely.

As Intel's overall performance as a PC chipmaker fades, another factor previously cited as a reason Apple might not want to leave x86 is also looking less important. By standardizing on x86 Macs, Apple could have potentially dual sourced chips from both Intel and AMD. Yet Apple never did this, and in parallel also never had any real issues in delivering its own A-series chips without maintaining multiple sources.

After fighting over modems, Apple is ready to own its own supply

To understand why Apple might want to own its own supply of Mac processors rather than relying on Intel, consider its recent history in fighting to manage its supply of mobile modem baseband processors from Qualcomm and Intel.

Apple executives explained in court testimony during the Qualcomm trial that they had sought to use Intel modems in the 2013 iPad mini 2, but that Qualcomm had flexed its power like "a gun to our head" to keep Apple exclusively dependent on Qualcomm's chips.

As contractual contentions built, Apple became increasingly interested in alternatives to Qualcomm, and hoped that Intel could prove to offer a viable roadmap. In 2016 and 2017, Apple began dual sourcing modems for its iPhones using both Qualcomm and Intel baseband processors. However in 2018 Qualcomm refused to sell Apple its chips entirely, forcing iPhones to use Intel's modems.

Yet while Intel's existing modems were only marginally behind Qualcomm's, it appeared that Qualcomm could gain a much larger lead in the upcoming shift to 5G modems, threatening to leave Apple's future iPhones behind Qualcomm-based Androids in the transition to 5G.

As it became clear that Intel couldn't deliver its own 5G modems in a competitive timeframe, Apple settled with Qualcomm and erased any hope for Intel, preferring to instead cope with a short term dependence on Qualcomm modems while it assumed an internal plan to build its own future path for iPhone modems.

If Apple could feel confident investing in its own baseband modem development independent of Intel— a very specialized business it has never previously undertaken— it should feel far more confident in building its own application processors for Macs, given that it entered the custom CPU design business over ten years ago and has been leading the mobile industry in custom mobile CPUs.

Apple's A-series mobile chips have muscled past rival application processors from Texas Instruments, Nvidia, Qualcomm, and Samsung, and have kept well ahead of parallel custom ARM developments including Huawei's Kirin. Given that there is more money in the mobile market for phones and tablets than there is in global PCs, Apple can clearly leverage its massive, highly lucrative and very unique position in mobile to adapt its existing CPU and GPU technologies to build processors for its Mac notebooks. The only question is, "does it want to?"

Evidence Apple is building towards a non-Intel future for Macs

On the hardware side, Apple has gone well beyond simply building its own custom CPU for mobile A-series chips. The A-series chip package also now includes Apple's own GPU, as well as a custom memory controller, storage controller, a Secure Enclave for managing authentication related to Touch ID and Face ID, custom Image Signal Processing supporting advanced camera features, custom encryption silicon for boot security, full disk encryption, and advanced codecs for decrypting audio and video.

Many of these features are also incorporated into Intel's x86 chips. Yet rather than growing increasingly dependent upon Intel's custom silicon, Apple has been adding its own custom T-series chips to modern Macs. The latest T2 chip supports iOS-like features including Touch ID, Touch Bar, FaceTime camera features, secure boot, disk encryption, advanced media decryption and compression.

Macs do continue to use integrated GPUs from Intel or dedicated GPU hardware from AMD. But here too, Apple has introduced an independence layer with Metal. Developers on both iOS and Macs write to Apple's Metal APIs, which take advantage of whatever GPU is available. This makes it increasingly possible for Apple to also introduce its own GPUs in future Macs and continue to support existing software.

Software support for a specific CPU architecture has long been a factor that favored the status quo of specific architectures like Intel's x86 or the ARM architecture. While it's not too hard to develop a new CPU architecture with a technically superior design, it has historically been very difficult to shift the installed base of software to support that new silicon.

Intel itself ran into this problem in efforts to replace x86 with the enhanced RISC design of its i960 and i860 or the similarly all-new architecture of its Itanium IA64. Apple's efforts to introduce PowerPC with Motorola and IBM similarly found that one of the biggest problems in introducing new chip architectures was being able to deliver and distribute enough native software that could run on them acceptably fast.

In introducing iPhone and then iPad, Apple created massive interest in writing new software for ARM architecture chips. Apple made this easy by delivering all of the necessary compiling infrastructure to enable programmers to write to Apple's APIs, which could then simplify the work of handling future processor transitions, such as when Apple introduce its new 64-bit A7 in 2013.

Google's Android and Microsoft's Windows Mobile were even more ambitious in working to support multiple processor architectures. Yet the problem remained that software specifically compiled for one chip— as Microsoft was doing— wouldn't run on another phone, or that software in Google's generic bitcode for Android wouldn't be optimized to run fast on any specific processor.

When Microsoft attempted to release Windows RT running on ARM processors, the new machines couldn't even run existing Windows software. Meanwhile, although the majority of Android phones were all using ARM processors, the "run anywhere" nature of Android meant that none of it was optimized to run anywhere specificaly. iPhones with similar processing power and less RAM still run comparible software better than similarly specced Android phones.

Apple's approach was to optimize its compilers to run iOS apps on a specific chip architecture, with the ability for Apple to transition that software to a new, optimized architecture as needed. Again, this enabled Apple to introduce the first 64-bit mobile ARM chip and rapidly ensure that software was compiled to take advantage of it.

Additionally, Apple continuously enhanced its ability to deliver optimized code specific to a user's hardware. Rather than making the user figure out which version of software to buy, the App Store can itself determine and deliver the code needed to run on a specific device. A user can buy one app and have optimized versions delivered to several different devices automatically, without even knowing anything about the underlying hardware on them.

All of this work on iOS can be translated to Macs. The App Store plays a big role in distributing the correct version of software to new hardware. That means Apple could introduce a mix of ARM and x86 models, and handle the distribution of optimized software through the App Store, solving a problem that has long stood in the way of switching away from x86 without some sort of emulation or translation.

In parallel, Apple has also enabled developers to take their existing iOS apps and adapt them to run on macOS Catalina via the new Catalyst. This will increase the range of titles available, independent from the issues of processor dependence. Over just the past two years, Apple has also radically enhanced the App Store for both iOS and Macs, delivering a curated experience that makes it easy to discover new titles.

Combined with new initiatives like Apple Arcade, this is creating a perfect storm of software availably for Macs just as the tools to deliver architecture-specific code are being perfected. And on top of this, Apple is also making progress in enterprise sales, creating the strongest market yet for Macs even as Windows and x86 chips fade in importance.

So we are now approaching the ideal conditions for Apple to introduce new Macs without x86 chips. It could be that Apple intends to release an entry level notebook with a beefy version of the A14X chips headed to future iPad Pros, potentially with a similarly scaled up Apple GPU.

It's also possible that Apple could make an even bolder transition a new CPU chip architecture capable of delivering a bigger jump in processing power. We've already seen Apple's efforts to build its own custom GPU— effectively a massively parallel processor tuned to doing the repetitive tasks common in rendering graphics— as well as deliver the new Neural Engine first introduced in the A12 Bionic, specifically tuned for AI processing.

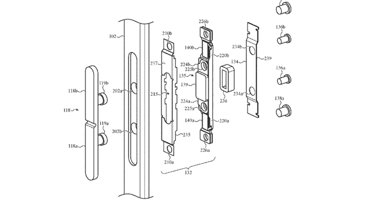

Apple also just introduced a new Field Programmable Gate Array (FPGA), a custom chip that can be optimized for specific tasks. It will ship this on the Afterburner card for new Mac Pros, enabling its super fast Intel hardware with powerful GPUs to run dedicated software on yet another type of custom processing hardware.

These developments indicate that rather than just dropping out the Intel CPU for an ARM CPU, Apple could instead increasingly shift modern Macs into a mesh of custom silicon engines that each specialize in certain types of tasks. And it has already done that to a point with its latest T2 Macs, and in particular the Mac Pro with an Afterburner.

Future Intel Macs could ship with a sidecar custom chip supplying an Apple GPU, Neural Engine and a FGPA processor like the Afterburner. Apple can certainly now also ship non-Intel Macs with an ARM CPU and not worry about optimized software support. Further, Apple could also develop a new custom CPU architecture that goes beyond the ARM architecture, which initially designed for use in mobile devices.

If Apple were to develop its own significantly different new CPU architecture, that's a move that could also be extended to iOS devices, resulting in a proprietary processor family running all of Apple's devices. That could prove to be a major competitive advantage, and its a move we've already seen in Apple's GPU and other custom silicon work.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Chip Loder

Chip Loder

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Christine McKee

Christine McKee

Andrew O'Hara

Andrew O'Hara

Andrew Orr

Andrew Orr

-m.jpg)

75 Comments

Hot air

Nonsense and typical columnist speculation. There is no evidence that any of this is true or that it would make any sense to do.

I was totally with you until this “

I don’t think Mac software has had any sort of transition forces that would allow the type of distribution of different binaries that iOS devs had forced on them to great affect. I haven’t seen the tech that takes current Mac software and gets it to run on ARM. Not saying it can’t be done, and certainly Catalyst and SwiftUI are going to help for new development, but there are still technical hurdles that Apple hasn’t revealed how to jump yet.

Also not mentioned is the loss of Boot Camp... which Microsoft is slowly solving, though.

Overall I’ve been convinced for a long time that the transition is inevitable and everything Apple has done this year has only reinforced it. I actually imagine the loss of 32-bit apps will be more disruptive than the transition to a mix of ARM and Intel Macs on the market. I also think skepticism will be easily won over with Battery life (along with convincing evidence of compatibility and performance).

Nice to read if you are locked in the thermal corner of a prosumer laptop. But sorry, from the iMac Pro perspective I see no interest at all in this prospected evolution.

I can only imagine that the author is being paid by the word.