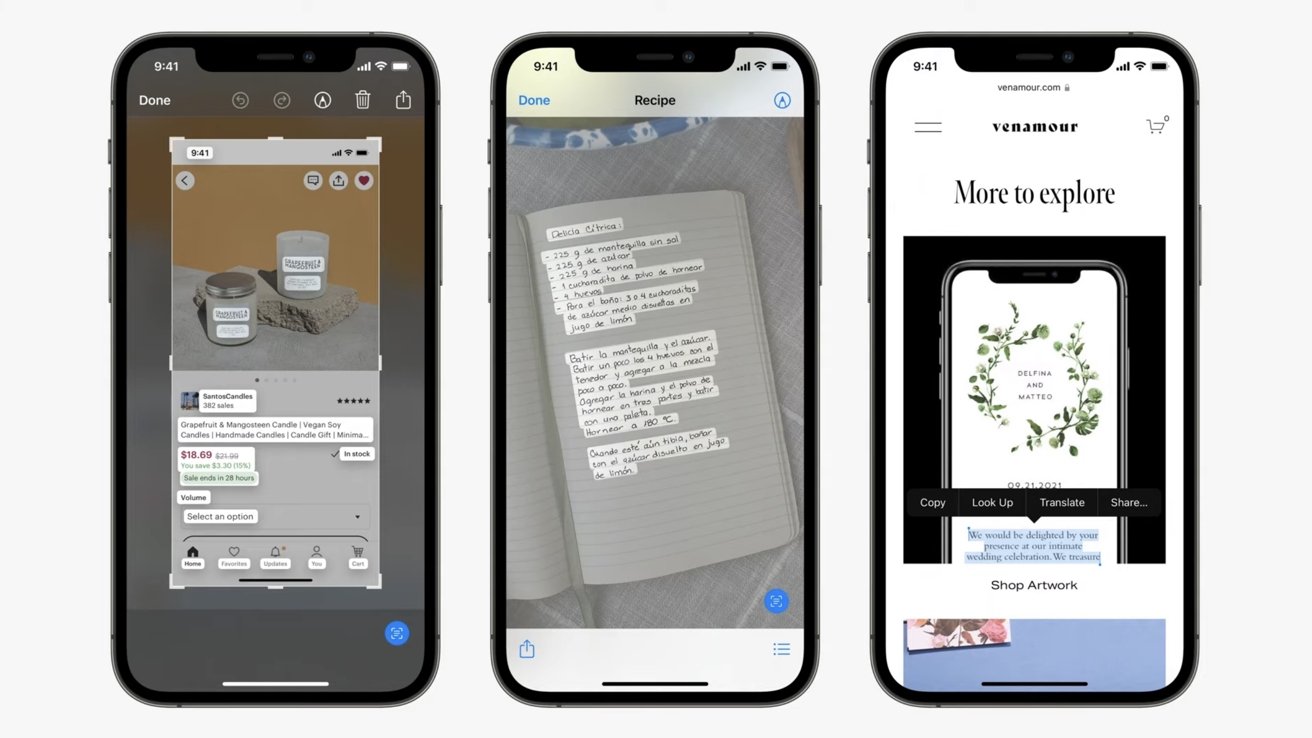

Live Text is a new feature in iOS 15, iPadOS 15, and macOS Monterey that allows users to select, translate, and search for text found within any image.

One of the new features announced at WWDC this year was Live Text. Live Text is coming to iOS 15, iPadOS 15, and macOS Monterey which allows users to select, copy, look up, and even translate text found in any image.

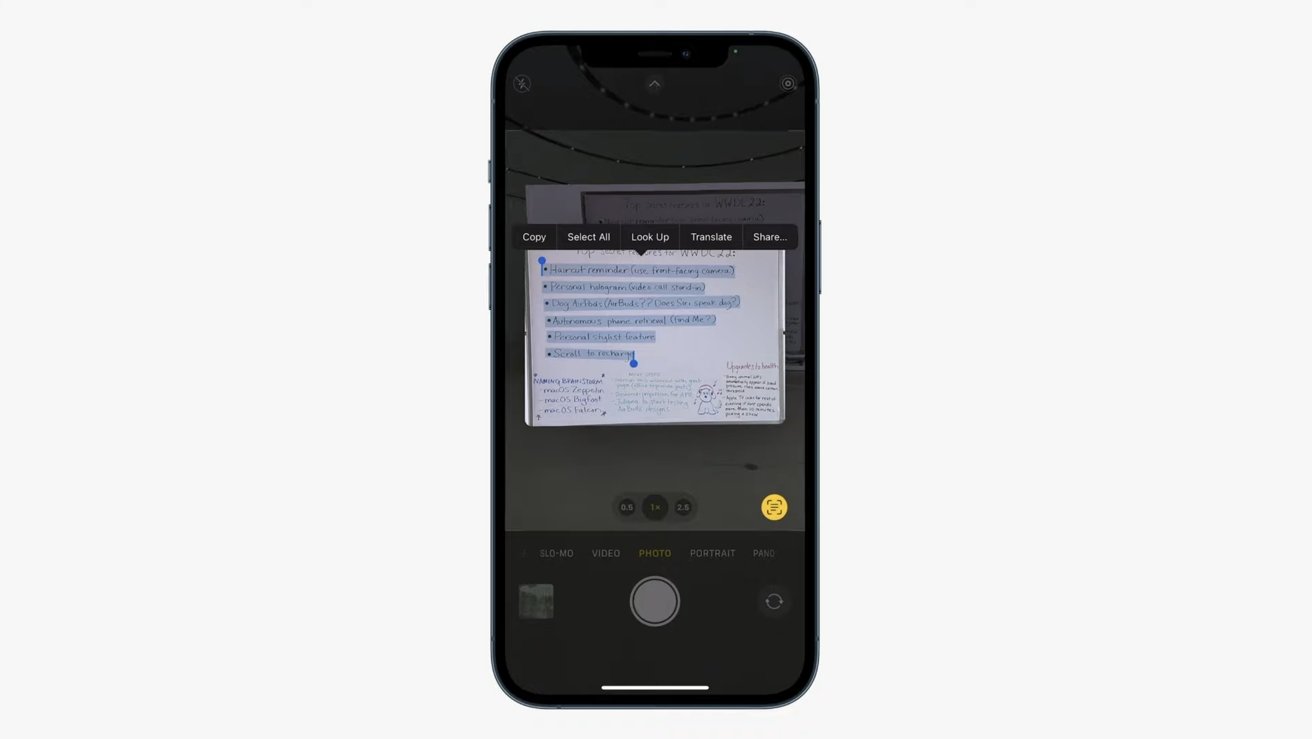

The demo used during the keynote was a meeting whiteboard with handwritten text. Opening the camera app on iPhone and pointing it at the whiteboard, a small indicator appears in the bottom right corner showing text is recognized in the viewfinder.

Tapping the indicator then allows you to copy or share the text wherever you'd like. During the demo, Apple VP Craig Federighi pasted text in a Mail message with bullet formatting included.

Not only does this work when capturing a photo, but Live Text can be used with any photo found in your Photos app to select and share text. Any time the indicator appears in the lower right corner of the screen, some text can be picked from the image.

Combined with system-wide translation in the new versions of iOS, iPadOS, and macOS, users can select text in a photo and tap the Translate command to read it in another language without ever leaving the image. For those traveling internationally, this feature could assist with reading street signage, menus, and many other use cases bound only by imagination.

If an image includes a phone number or street address, users can tap on the text in the image to make a call or search for a location. Even a restaurant sign can prompt a search in maps for that place of business, and getting directions is just a few taps away.

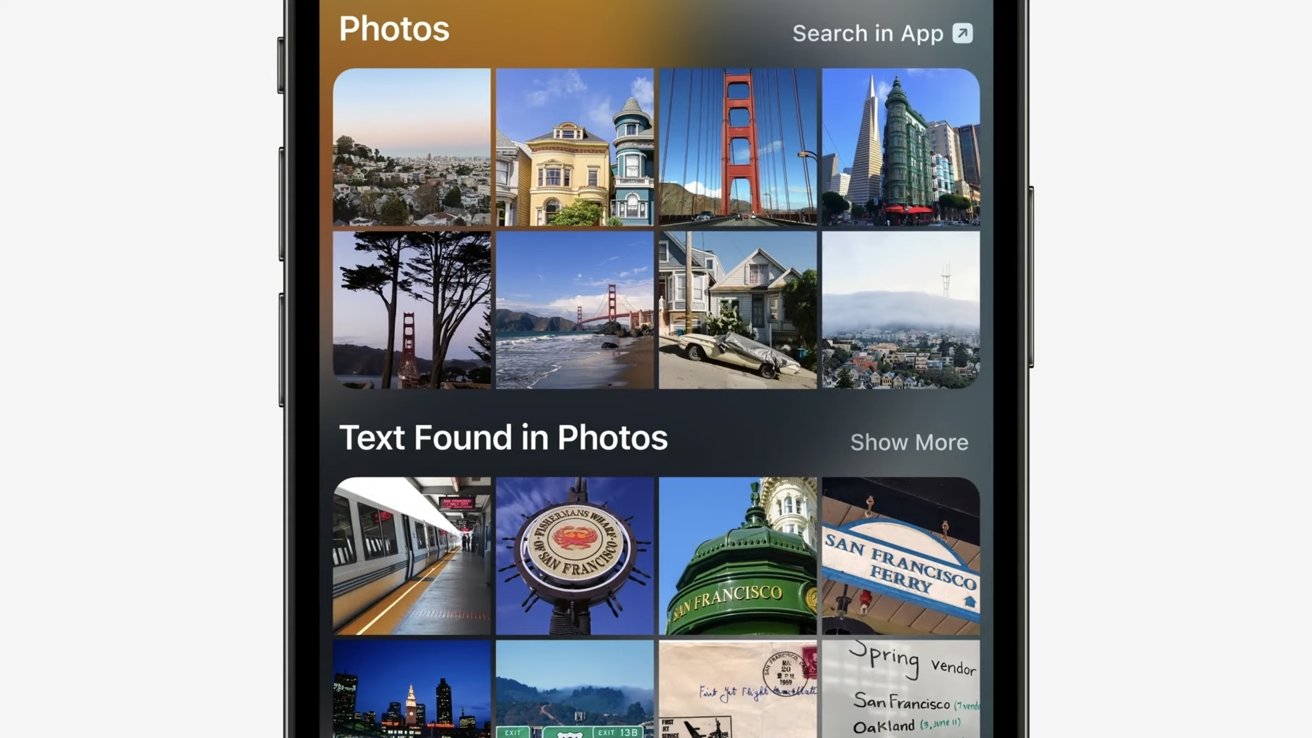

Live Text will also make words captured in a photo searchable with Spotlight. Searching for a word or phrase will reveal photo results which users can tap to jump directly to that photo in their library.

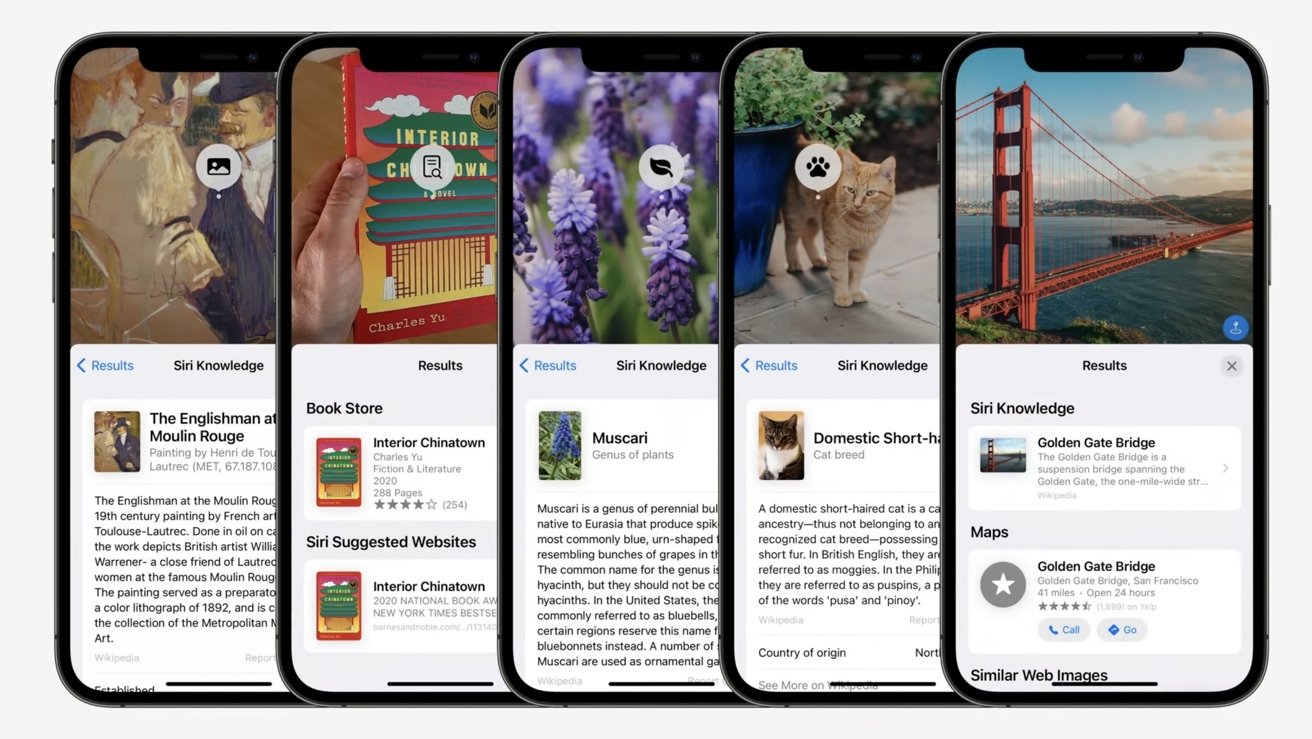

In addition to text features, Apple has incorporated a look-up feature for objects and scenes. When pointing the camera or viewing a photo of art, books, nature, landmarks, and pets, the device will display relevant information with links to learn more.

Live Text will recognize seven languages at launch, including English, Chinese, French, Italian, German, Spanish, and Portuguese. This feature also requires the A12 Bionic chip or newer, which was found in the iPhone XS.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Stephen Robles

Stephen Robles

-m.jpg)

Malcolm Owen

Malcolm Owen

Wesley Hilliard

Wesley Hilliard

Sponsored Content

Sponsored Content

Andrew Orr

Andrew Orr

William Gallagher

William Gallagher

9 Comments

It would be great if one could search text from photos in one’s library.

I've been using a third party app on my Mac called TextSniper to capture text from images. It's great to have Live Text since it will work on all my Apple devices. Looking forward to it!