Apple's child safety initiatives explained with Jason Aten on the AppleInsider Podcast

On this special episode of the AppleInsider Podcast, guest Jason Aten joins us for a detailed explanation of Apple's new Child Safety initiatives, including sensitive photos in Messages, search, and CSAM in iCloud Photos.

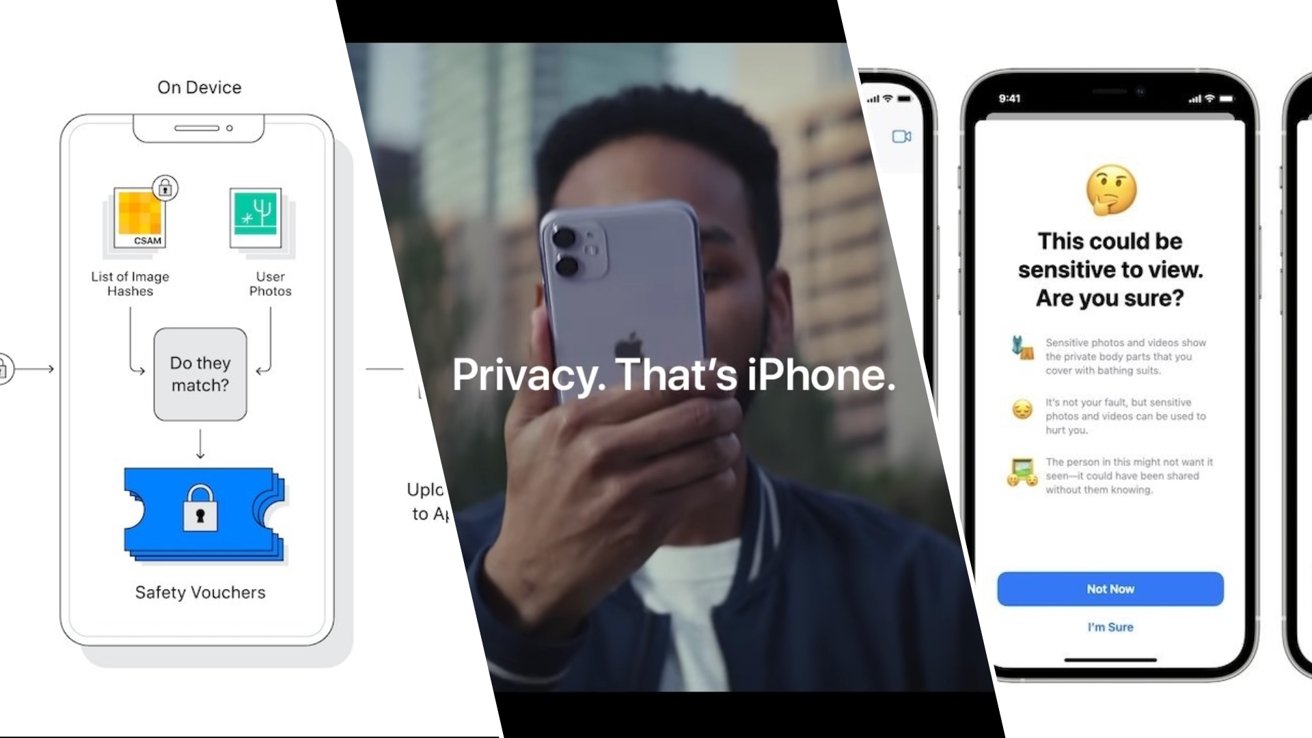

Apple announced a suite of new tools specifically to protect children and reduce the spread of child sexual abuse material (CSAM) online. These tools were met with backlash as some details were left out of the original documentation Apple provided.

These child safety measures can be understood in three parts. First, Siri and Search on Apple devices running iOS 15 later in 2021 will surface resources and organizations for victims of abuse, and those who may be searching for CSAM content online.

Communication safety in Messages is a second part of this initiative that aims to detect explicit and sensitive content being sent specifically in the Messages app. Users up to age 17 and are part of an iCloud Family group who attempt to send this content will be shown a warning about the nature of this media. For children age 12 and under, a notification will be sent to parents that a sensitive piece of media has been communicated.

The same occurs when a photo or video is received, where the image is obscured and a full-screen notice is shown when a user attempts to view. A notification is also sent to the parents of the recipient if the user is age 12 and under.

This safety measure works in both iMessage conversations and SMS texts because it is a feature of the Messages app. Devices running iOS and iPadOS 15 will use on-device machine learning to detect sensitive content in the Messages app alone. No images or media will be viewable by Apple or any outside organizations.

CSAM detection in iCloud Photos has been the most controversial feature in Apple's announcement. Partnering with the National Center for Missing and Exploited Children (NCMEC), Apple has created an elaborate cryptographic method of hashing the database of CSAM content from NCMEC.

This obscured database of hashes provided by NCMEC will be on-device. If a user chooses to utilize iCloud Photo Library, any images that directly match the hashed content from the NCMEC database will be flagged.

Once a certain threshold of flagged images is reached, a human reviewer from Apple will confirm that CSAM material has been found on a user's device. Should it be confirmed, NCMEC will be notified.

In this special episode of the AppleInsider Podcast your hosts delve into great detail on these new features and the privacy implications raised with the system. We also cover the additional FAQ document Apple released Monday.

Apple's Child Safety initiative interview transcript

Stephen Robles: Welcome to the AppleInsider podcast. This is your host, Stephen Robles, and today this is a special interview episode as we're going to cover Apple's new child safety measures that were announced last week. It was kind of late in the week, didn't make the show for the Friday episode, but I think there's enough material here to discuss this for a full episode and joining me so we can explain this in depth and give our thoughts on it is special guest Jason Aten who's been on the show before. Jason, thanks so much for joining me today.

Jason Aten: Absolutely. Thanks for having me.

Stephen Robles: So, so much hullabaloo. I don't know a better word right now describe this, but Apple announced this new child safety measures, and we're going to go into detail of the three aspects cause there's really three different features apart of this.

This wasn't, you know, any kind of like video thing, they didn't do any interviews. It was really just a link on their website and then PDFs talking about it. And then just a flurry of questions, many conversations on Twitter. Me and you were talking about it. We actually were in a, a group tweet thread with like Nilay Patel and Rene Ritchie, because it was just so much.

First of all, I think the announcement of this could have gone a lot better. Even today, as we record, there was an FAQ PDF that Apple released to kind of supplement and answer some of the questions that people had. I really feel like it would have been beneficial to have that on day one as well.

Jason Aten: Yeah, definitely.

Stephen Robles: So let's talk about the three features. I'm going to go in the order of least controversial to most and hopefully that's also the same order as easiest to explain to most difficult.

Jason Aten: I think you're right. I think that the least controversial of these things are pretty easy for people to understand. And then it just starts to sort of go downhill from there.

Stephen Robles: Exactly. So the first feature that Apple announced is child safety and Siri and search on the iPhone, iPad, and I presume Mac as well. I think it says it on the footnote. You know, this is all part of the new operating systems that are coming out in the fall; iOS 15, Mac Monterey.

And the first features that in Siri and search on your devices, that if users search for anything related to CSAM— if you've seen that acronym around in the last week, CSAM— Ill explain it exactly once and then just refer to it as CSAM going forward, but it's child sexual abuse material.

And so this is very sensitive material, obviously nefarious in nature. And if anyone searches or performs search queries for material like that, that there will actually be a warning on the device that explains that this topic is harmful and problematic to the user that is searching it. And so that's one part of the Siri and search feature.

And then the other one is if someone is a victim of these kinds of things or exploitation, that Siri and search will provide resources where a child or someone who knows a child can report these things and get resources on where to seek help. Does that sound like I covered that, Jason?

Jason Aten: Yeah, and I think the one thing I would add is, I think you sort of said this, but just to expand it slightly more. If you are someone who is searching for something that would fit that category, it will both warn you that it's illegal and it does give you a link for you to get help. Like, it's not just trying to say, Hey, you're doing something wrong.' It's trying to say, We understand that there are people who might be struggling with this so click here' and there's anonymous guide and lines, that sort of thing for adults who might be having what it calls at risk thoughts and behavior.

Stephen Robles: Right. And I think there's contact information and information about organizations that could help, you know, whether that's counseling, things like that. So that's Siri and search.

That's kind of that whole feature. That's pretty simple. I feel like, I guess we could just kind of share our feelings about it now. I feel like that's reasonable. I see nothing that anyone would question that this is, there's no bad aspect to this. I mean, do you feel that same way?

Jason Aten: I think you're right. And I think though, this is a weird thing, because like, as a journalist and somebody who writes about this, you always want to like experience these things firsthand, just so you can see how they work.

But this is something I don't want to experience firsthand, but I do believe that Google will do the same thing. For example, if you type a search into Google search engine that it detects as being in this category, it will do the same thing and warn you and also provide links to info. So like at this point, Apple is simply being consistent with what the search ecosystem is already doing.

So it's not very controversial. It's just trying to incorporate that into Siri.

Stephen Robles: Right. And even in the more controversial aspect of all this, which is the CSAM detection and iCloud photo library, we'll talk about how other companies are doing this and have been doing it for years, honestly. People don't realize that.

We'll get to that in a moment. The second feature that Apple announced, they call it "Communications Safety in Messages." This feature applies to child iCloud accounts who are part of an iCloud family. And it refers to images that are sent and received in iMessages, in the messages app. And I believe it also works for SMS texts sent in the messages app. Is that accurate?

Jason Aten: Yeah. So it's not just a feature of iMessage, it's an actual feature of the app.

Stephen Robles: Right, of the messages app. And what this feature does, it uses a technology which is different from the CSAM detection and iCloud photo library, which we'll talk about in a moment, but it uses machine learning to identify sexually explicit photos that are being sent and received from a user.

And if the messages app detects that a child has received an explicit photo, the photo will actually be obscured or blurred out and there'll be a warning. The child can actually tap on the photo and a message pops up that say, this looks like sensitive material. Do you still want to view it? If they say yes, a second message appears that their parents will be notified.

That part only applies to children 12 years old and younger. If the child is 13 to 17 years old in an iCloud family, a notification is not sent to parents. So we can talk about that in a minute. But this technology is actually using machine learning to analyze the actual photo sent. And this is a distinction that will play into the last part of the conversation, talking about CSAM and iCloud photo library, but it's using it to detect what the image looks like, and it detects it when it is received.

So if a child receives an explicit image, or if a child is trying to send an explicit image to anyone else, the same warnings will appear that this looks like an explicit image. Are you sure you want to do this? And a notification will be sent to the parents in your iCloud family. Again, if you are 12 years old and under. Does it sound like I got everything there?

Jason Aten: I do, I think you did get everything. And I actually haven't heard anyone really express concern about this particular feature. I do just want to say that it's sort of the next level closer to what we're going to talk about in a minute in that as a parent with two daughters who are, who both have iPhones, I'm like, "Yes. Please!" Right? So much.

But it does start to— when you combine it with the other things that were introduced at the same time, it does sort of start to make you wonder, like, this is another example of Apple starting to insert itself onto your device. In a very parenting way. It's so our daughters, in addition to having iPhones they both have AirPods, right?

And AirPods will give them a warning if they've been listening to their music too loud. Good thing. Like absolutely like a good thing for your phone to do, because it's trying to prevent you from damaging your ears without you even really realizing it's happening. But it does start to make you realize, like, my phone is keeping an eye on me. It's starting to parent me.

But as a parent, I'm really glad that tool exists, but I'm still sort of, especially when you think about what comes next, I gotta be honest. It's like, huh, Apple is actually using machine learning to evaluate photos that are sent. And obviously these are to young children, so I think we should do everything that we can to protect them.

I just did want to raise that, even though I haven't heard any criticism about this particular thing, maybe it's because it was so overshadowed by everything else that came. It, it is an interesting thought that like it's inserting itself in this way.

Stephen Robles: Right now, two other things I forgot to mention. One, this is optional. You don't have to enable the communication safety in your iCloud family. If you choose not to. Which again, there's a difference between opt-in and opt-out in the latter feature that we'll talk about. And also all of the machine learning recognition of photos is happening on device.

And Apple is trying to be very clear about what's happening on your device, what's happening in the cloud or on their servers. And this is one of those things happening directly on device. No information is sent to Apple, Apple can't see these photos that are in your iMessage or any messages conversation on your phone.

They're totally obscured from Apple. They can't see anything there. They're not notified of anything that is being sent. This is strictly happening on device for the sender and the recipient. And it happens twice. So if a photo is sent, that's explicit, the sender gets the notification that they're sending something and the recipient, but that recognition has happened on each individual device separately.

So it's not like it went to the cloud or in the iMessage and then Apple recognized it was explicit and it pushes it down. No, it happens on each device.

Jason Aten: Yeah. I was just going to also say, and I think he brought up an important point that there's the only notification that happens in this particular case is to the parent if it's notified, there's no automatic reporting to any other entity, whether that's Apple or anyone else.

Stephen Robles: Right. And this does not break the end-to-end encryption built into iMessage. That was, again, something Apple wanted to make clear that iMessage conversations are totally encrypted still. My only comment on this feature is I wish that as a parent, I could actually choose the age that I want the force to notification to parents to be enabled.

You know, the difference between 12 and 13, this is very different for every child. You know, if I feel like my kids still has to ask to buy an app in the app, I would also like to get a notification if an explicit images sent to them or they try to send another one. So my only ask would actually be a little more oversight, I guess to say is allow me to choose a higher age for this to continue to be in effect, at least the forced notification to parents.

Jason Aten: Yeah. I would agree with you on that. Cause like we have a 13 year old and we have an 11 year old, but I feel the same way about what the boundaries should be for the two of them. The difference in their age doesn't really dictate that.

When you said that, though, it actually made me think that, I guess maybe this should be viewed as a fourth layer in that protection, because for example, on our kids' iPhones they can only contact people we've approved for them to contact anyway. And so we would be having a very different conversation. Like people we've approved them to contact have been sending them this kind of stuff? And so I'm not as worried about the random person that they meet at school sending them something because that person has to already be in their contacts.

So I do feel like in that regard, or a different level of that protection. It's not the first line of that protection.

Stephen Robles: That's a good point. So this was not as controversial, but I'll say one more time as we go into the last section, the distinction is this technology, the safety communication in messages is using machine learning to analyze the content of a photo.

Apple is not matching these photos to some database. Every photo sent that possibly is explicit is being analyzed for explicit material on its own. There's no database or hashing or anything like that. And so that's a distinction between this communication safety and messages and the last feature, which is CSAM detection in photos.

Now there's a lot of complicated technology behind this last system, and I'm going to do my best to just try and explain it at a high level. Just know it's using cryptography and all this kind of stuff, but basically Apple is working with an organization called NCMEC.

Jason Aten: National Center for Missing and Exploited Children.

Stephen Robles: Thank you. So NCMEC has a database of images or material that is known to be abuse material. That they know is explicitly nefarious and bad and evil and all that kind of stuff. And they have this database that they can recognize NCMEC when these photos are out on the internet.

And so Apple has partnered with them and come up with a complicated cryptography hashing scheme, where in iOS 15 later this year, I don't think it's going to come out exactly when iOS 15 launches, it seems like it be a later update that this database of material from NCMEC is going to be translated into an unreadable hash, and that's a cryptography term for, it will not be images put on your devices, but this database of hash information. And this hash information is going to be on all devices running iOS and iPad iOS 15.

Now it's not like someone could go into the device or even by accident or really in no way see any of the images that NCMEC is using in its database. There's not like actual images on your device on it so I just want to make that clear, but this database of hashes or cryptographically obscured material. It will be on the device for the purpose of matching it with other material. Does it have I sound like I've gotten it so far?

Jason Aten: Yes. And the only thing I wasn't clear by what you said so far, and I was actually just looking at my notes because I believe that this won't only be on your iPhone and iPad, I do think it applies in Monterey as well.

Stephen Robles: Okay. Yeah. There's all these footnotes about, you know, where this stuff is going to be. It's a little obscure, but these updates will be in iPadOS, iOS, watchOS, and macOS Monterey. So it does seem like it will be everywhere, but again, it's one of these things where that asterisk is at like the very bottom of the page.

And it's like, does this apply to all three? This does apply to one or two of these features. So that's where the NCMEC database of images that is cryptographically hashed and obscured will be on-device. Now from there, if a user uses iCloud photo library. And this was question that happened last week, and I'm actually kind of glad we're doing this early this week after Apple has specified some of the information that people had questions about.

If you use iCloud Photo Library on device, there will be a matching of the hash material from NCMEC to images that are about to hit iCloud Photo Library on device, it will match and see if the hash pattern or whatever you want to call it. If it matches hashing and recognition on actual photos, that that will create a voucher to say, "There might be some sensitive material or CSAM material on this person's device being uploaded to iCloud Photo Library."

If that happens once, nothing happens, it could be a false positive. It could be, uh, just didn't recognize it properly. And so if it's just, once nothing happens now, Apple has said there is some threshold for if it happens a certain number of times that Apple will be notified that it's hit that threshold. Unfortunately, correct me if I'm wrong, but Apple has not made that threshold clear.

Jason Aten: Yeah. And that's, I think for obvious reasons, because hypothetically, if you knew that if you had 50, it was going to be the threshold. So if you're someone with bad intentions you would just have 49. I don't know. I think that there's a reason that Apple has not said what that threshold is in the document. There's some expanded technical documentation that they released alongside with the FAQ, which I know that we'll get to, that does go a little bit more in depth in terms of what they call "the intersection." So it's kind of like, they break this into a bunch of parts and if enough of the parts are positive, then it can be decrypted. So it's not just like Apple sitting there, like, oh, we're looking to see how many of these come in.

It's actually a function of the crypto graphic piece of this, that enough of the parts have to have matched in order for the encryption key to actually even unlock it. If that makes sense.

Stephen Robles: Yes. And even if there's a number of matches below the unknown threshold. Apple cannot see any of the images on your device or in your iCloud Photo Library.

No image can be seen or unobscured until it passes that threshold. Now, once that threshold is passed, let's say it's 20— that's unknown, that's not the actual threshold— but just for the sake of argument, let's say that there have been 20 matches between the NCMEC hashed database and actual photos on your device, Apple will be notified and that triggers a human review seemingly from an Apple employee to whether these images are actually matched. Once Apple reviews it by a human. Then it could go to a law enforcement notification that this person has this amount of material on their device, but there is a human review. And as far as I know that human review is done by Apple, not another organization.

Jason Aten: Yeah. And I do want to just add a little bit of color to a couple of those. If the first one I wanted to say you, you mentioned something early on about the evaluation process. If it appears that there is sexually explicit material, that's actually, it's even more restrictive than that. It's only if there is actual material that matches the known database.

So it, hypothetically again, it feels weird to like, I'm not trying to justify this, but someone could have an infinite amount of material that they should not have, but if it doesn't match this known database, then the system wouldn't be detecting it. It's only detecting things that are in the actual database.

That was the first thing. So the second thing is, according to the conversations that I've had with Apple, it is a human employee of Apple that reviews it. And that's partially because of a court precedent. I think the case was Ackerman, which prevents automatic review and submission to NCMEC.

So Apple wouldn't be sending it to law enforcement, anyway, Apple would be reporting it to NCMEC who then would report it to law enforcement, but there cannot be an automated reporting to NCMEC. A human being actually has to look to verify it first, before it goes to them. Bbut according to my understanding, it is an Apple employee and I actually asked like, so how is that possible?

Because isn't it illegal to look, isn't that the point that's illegal, look at this stuff? And I'm sure Apple's lawyers have figured this out. Whatever system they have set up, it is an Apple employee that would then review. Again, only the images that were flagged. They don't just like start looking through your library. And actually what I was told is that it's a visual derivative. It's not even the actual image. That's enough of the image to tell if it matches and then it's like a yes or no thing. And if it's a, yes, it gets reported. And if it's a no nothing ever happens.

Stephen Robles: Right. And so, again, to your point, these are very specific images from the NCMEC database.

And as far as I can understand, like the hashing process, if a photo, let's say from the NCMEC database was cropped or color adjusted, maybe to black and white, the hashing would still recognize it. If it's a cropping or color adjustment of the exact same photo. But if, for instance, the NCMEC database had a photo of a water bottle from this angle and you took a second photo from a different angle, it would not match those hashes. Does that make sense?

Jason Aten: Right. So it's not looking for either the same subject or the same environment. It's actually matching actual content. Like, is this a duplication of this exact file?

Stephen Robles: Right, which is different. And this is the distinction I was trying to make earlier from the Communication Safety in Messages that Apple is doing. Cause that is image content recognition using machine learning. This, CSAM detection and photos is not, this is exact hash matching of imagery from the NCMEC database.

Not some kind of guessing of, "Is this the same object or the same person?" It is strictly matching the database exactly.

Jason Aten: Correct. Yep.

Stephen Robles: So that's basically the system, a couple of things I wanted to state. One, one of the confusion was if you turn off iCloud photo library, Is this hashing match between the NCMEC database and the photos on your iPhone still going to happen?

Nilay Patel was very vocal saying Apple did not explicitly say this originally, but in this FAQ document that Apple released Monday morning of this week, they do say if you disable iCloud Photo Library, that no matching of photos on your device to the NCMEC database will happen. It's still seems as though the NCMEC hashed database will be on device, but Apple is saying that the process for matching database to images is not going to occur if iCloud Photo Library is disabled on your iPhone or iPad or Mac.

Jason Aten: Yeah. And the only thing that's still a little bit unclear cause, and I read through the FAQ this morning and tweeted up some of the highlights. You know, one of the first things that says by design, this feature only applies to photos. That the user chooses to upload to iCloud Photos.

It does not work for users who have iCloud Photos disabled. The slightly gray area is it does not say that your images won't be hashed, right. It just says that they won't be matched to the database and then a safety voucher. I'm willing to give, actually, I don't know if I'm willing to say this, I'm willing to give Apple the benefit of the doubt that we might be trying really hard to read much more deeply into this then, but to be clear, what it says is that the feature only applies to photos that the user chooses to upload to iCloud Photos.

In theory, if you do turn off iCloud Photos, which is a setting where your entire photo library is uploaded to iCloud, if you choose to turn that off, then this matching process does not happen. What's maybe still a tiny bit unclear is, do your images still get hashed on your device? Because to clarify for anyone who's still wondering about the hashing, the same process that happened on the CSAM images, where they were hashed, then on your device, Apple hashes your images, and literally just looks to see do the two numbers match. And if they do this whole thing goes forward. If they don't, then nothing happens. What is not a hundred percent clear is, are they still hashing your images? And just nothing happens with them because they stay on your device?

I don't know. And I haven't had a chance to ask.

Stephen Robles: Right. And just one concern, like I had initially before I understood the whole system, you know, you might be hearing this and you know, what happens if I take a picture of my kids in the bath or whatever, which is something a lot of parents do. Or a thought I had is there's been times where one of my kids gets like a bug bite and it looks weird and I'll take a picture of it and maybe send it to a doctor or a family member.

And the concern was like, is that somehow going to be flagged? And no, because the difference is Apple is not doing some kind of image and subject recognition or, "What is this photo?" It is literally matching the exact photos from the NCMEC database to photos in your iCloud Photo Library. And this does not apply to photos that you would say send via iMessage to a family or friend or photos you would email.

This is strictly on the photos in your iCloud Photo Library. That would be right before they upload to iCloud is when this hashing match will take place, if you have that enabled.

Jason Aten: Yeah. And so if you take a photo right now of your child, because they had a bug bite, it's literally impossible for that photo to have accidentally been put into the NCMEC database because the NCMEC database exists in the past, right.

Something and it literally images that have been essentially, I don't want to say confiscated because obviously digital things, it's hard to say confiscated because they still exist out there. But by law enforcement, when they have, for example, prosecuted someone for distributing this type of material, they take that material, they put it in the database so that they can then identify it in other places.

Stephen Robles: Right. So finally, last piece of just information before maybe we start sharing our personal feelings about it. This CSAM image detection has been used by a lot of other companies before Apple. Google uses it. Facebook uses it.

Google on their website, they also have a child safety article and they say actually since 2008, they've been using a hashing technology looking for this kind of imagery to identify it in like their search, on YouTube content, all that kind of stuff, images and videos. And then in 2013, they even expanded it.

And on Facebook uses this as well for content posted to their sites. So this CSAM technology and looking for this kind of content is not unique to Apple and has been used for years by other companies previously. And I think this kind of content is one of those things where we can at least universally get behind the idea that these, this is bad content, you know, the kind of stuff that NCMEC is in, they're having in their database, is reprehensible like this is, we're talking about children, we're talking about explicit stuff. Like this is really bad. And that part can be agreed.

The question became, and some people were raising this later, and Apple answered this in their FAQ was, "What happens if a government of another country or our government here in the US wants to add different kinds of imagery to the database of hashes that are going to be on device? What happens if the government in China wants to have certain political imagery or have certain symbolism flagged and have that as part of this matching system where the Chinese government could be notified if someone has these photos on their device?"

And Apple, this is in their FAQ that was posted earlier this week, it says "Apple will refuse any such demands." That's a quote from their FAQ article. They go on, "We have faced demands to build and deploy government mandated changes hat degrade the privacy of users before and have steadfastly refused those demands. We will continue to refuse them in the future. Let us be clear. This technology is limited to detecting CSAM stored in iCloud, and we will not see to any government's request to expand it."

So that was from the FAQ. So I feel like they made it clear again. I feel like it still starts to creak in the door a little bit because this system exists.

Jason Aten: And I do think one piece of this that I think is worth mentioning is, because you talked about a couple of the other companies and how that they're dealing with this Facebook famously reports, 20 million reports of CSAM material every year, because of, because Facebook messages is not encrypted, right. They can literally see what Facebook, I guess they have a feature where you can turn it on in Instagram messages, that kind of thing.

So other companies are, and I think this is good that they're doing that. I'm not, I'm not criticizing Facebook. I've criticized Facebook plenty, but not for this particular thing. But Apple confirmed to me and to other people that it has actually been doing CSAM detection, just not in your iCloud Photo Library in the past. Apple doesn't report nearly as many.

I believe that it has been in the past limited to email when it was sent, uh, Gmail does this as well, that if people are sending this type of content via email at the same databases used to detect the trafficking of this kind of material. And while this is the part that was a little bit fuzzy, I also believe it was somewhat involved with things that were stored in iCloud, but not in an iCloud photo library. Right? Cause you can use iCloud is just a cloud storage thing.

So this isn't even new necessarily for Apple. What's new is how they're doing it on device. For the government thing, Apple's response does sort of seem to be, and, and this is something that Apple even said, I'm paraphrasing, of course, but basically, We could copy all the data off your phone, and have a backdoor to your encryption and send it to the government if we want it to already, but we're not. And we've told you we wouldn't and you know that we aren't.'

So it's all just a degree of policy at this point. So the fact that we've never done it in the past, Maybe give us the benefit of the doubt that we don't intend to do that, which I guess it depends on where you stand on Apple.

Stephen Robles: It's how much you trust Apple basically, right? Your level of trust to the company.

Jason Aten: Right. And this is at this point only in the United States. So the big conversation has been about what if it happened? What about China? Well, they're not doing this in China at this point. And I believe that the reason that they're using the NCMEC database is it's an existing thing, Apple doesn't have the ability to add images or hashes to that database, they literally get it from NCMEC. So if a government came along and said, please stick this image in the database, they'd be like, we can't because we don't, we don't put anything in the database. We literally just get a string of hashes and then we put them on phones and compare the stuff.

That doesn't mean that I think Apple's off the hook because my biggest perspective on this is not necessarily that the thing Apple's doing is bad because I think we can all get behind eradicating this kind of content from the face of the earth, I just think they completely botched the entire rollout and that's what's caused the issue.

Stephen Robles: Well, in the other trust aspect is, the database is provided by NCMEC a third-party organization and so not only do you have to trust Apple and what it's doing, but if they're just blindly taking this database from NCMEC, because it's just a bunch of hashes, it's not even images, then there is also trust that has to be placed on NCMEC.

And I don't know a ton about the organization from what I've heard recently is that they're extremely trustworthy. They do incredible work and they are worthy of trust. They are doing great work in this area of trying to eliminate this kind of content, but it does introduce a third party into the Apple ecosystem that kind of, we now have to trust as users because that's, you know, it's their database that they're using.

One other piece of information I just want to mention is Apple did state and as far as false positives, cause this might be another emotional concern as you're hearing about this system, Apple says that the system is very accurate and quote, "An extremely low error rate of less than one in 1 trillion account per year."

I don't know how they get that number. I mean, there's not 1 trillion accounts, but whatever information that they're using, it's again, the error rate is very, very low, even before it gets to the human review process inside Apple.

Jason Aten: So what they're saying, because I asked about that as well, is that, well, basically what they're saying is they expect zero, right? because there are a billion iCloud accounts and so it should take them a thousand years before there's a false positive. I'm not a statistician, but I think that that's what that means. It's not one in a trillion photos that will be detected as a false positive it's one in a trillion accounts, which means that they don't expect any accounts to exceed that threshold.

Like, I don't know what the threshold is, but again, if it was 20, you'd have to have 20 false positive images to reach that threshold, to flag that account, they're saying that's never going to happen. And literally the response I received from Apple is one in a trillion means we're confident i's not going to happen.

And if it does in the first year, then there's a big problem with the way we designed the cryptography. Yeah.

Stephen Robles: So that's the system though, that was Apple's clarifications in their FAQ. Now there's been a ton of criticism from all kinds of places. The CEO of WhatsApp, which is a part of Facebook, attacked Apple over this as a privacy concern. There's been a petition signed by many, Tim Sweeney, the CEO of Epic Games has spoken out against this about privacy and all that. I mean, you had some great articles on inc.com. We'll put links in the show notes, but what is the gist of the argument from these opponents, from what Apple is doing?

Jason Aten: So I think it's helpful to break the criticism into three groups.

One, I think the first group of people who are maybe people who don't understand exactly what has happened. Maybe they've read an article and they, it just all seems like, wait a minute, what is Apple doing? I thought Apple was all about privacy" So I think that's the first group of people. And those are probably more in the category of users.

Maybe some people in the media, random people on Twitter. I don't say that derogatorily. I just mean like, yeah, they're not necessarily people who I studied the issue. They just heard it.

That's the first group, the second group are people you just mentioned, Will Cathcart who's the CEO of WhatsApp.

My article that I wrote was, you know, Will Cathcart is criticizing Apple over privacy. Does he know he works for Facebook? Right? Like it doesn't, I don't understand. Or people like Tim Sweeney who is literally suing Apple right now. So obviously he wants to make some hay here or, you know, there was an article in the Washington Post from Reed Albergotti, whose headline was something like, "Apple is snooping into your Phone to find Sexual Predators."

So it's these people who clearly have some sort of an agenda against Apple because Tim Sweeney and Will Cathcart are very smart guys. They understand exactly what's happening and they're just choosing to represent it in inaccurately disingenuously.

And then I think the third group of people who have criticisms, and I think I would probably put myself into this category are people who have genuine privacy concerns. The Electronic Frontier Foundation had a really good article that went through this. The one I feel like they missed is the fact that Apple has already been doing some CSAM detection like we talked about, but I do think it's legitimate to have some of those privacy concerns and to make Apple, I think that was Nilay's point in the Twitter thread is make them say on the record, what this is. Can I really turn this off? Then say it on the record. Don't just beat around the bush or use generalizations. People who have genuine concerns.

As for that second group, which is what you were describing, it's hard to take it seriously because it doesn't feel like you're really concerned about the thing you're really more concerned about just scoring some points.

Stephen Robles: Yeah. And you know, I'm looking at this Washington Post article right now and I'll put the link in the show notes, but you know, the headline of, "Apple is prying into iPhones to find sexual predators, but privacy activists worry governments could weaponize the feature."

You know, Apple is trying to address some of the concerns, even in a headline with like the FAQ, talking about the government stuff, but also the prying into iPhones. You know, again, it's a weird nuance because the system is very complicated.

You know, you can't boil down the cryptography and the redundancies on false positives in a headline and describe what's what's going on. But I will say just emotionally, like from myself again, I am not worried about any kind of matching to this content in my iCloud Photos, I don't have any of that, so I'm not necessarily worried about that, but it does feel a little weird knowing that this hashed database from NCMEC just kind of sitting on all my devices, once it updates to whatever I was 15 version Apple distributes. And it seems like the first time there's a third party thing on my iPhone. The first time I turn it on, that is not from Apple.

And again, I understand the entire system and that's not like there's some folder of images on my phone that I can access. It's much more complicated and obscured than that. But it just feels a little weird just to me personally, do you have any concern about that?

Jason Aten: I think to some extent, the answer to that is yes, and for a similar reason to when we were talking about even the iMessage thing, that it feels like Apple is inserting itself somewhere, I guess one of the pieces that's worth considering is if you look through that FAQ, one of the things that Apple specifically said is that possession of this particular type of content is illegal and that Apple is obligated to report any instances we learn of, but then there's a question of like, but what if you just didn't learn it?

Like if you just leave it alone, you wouldn't have learned about it. I do think because it's Apple, people expect something different. It's not that they don't expect Apple to do something about this type of content. It's just that they, Apple has always operated differently. Right? The weird thing for me was the day before this broke, so I guess it would have been maybe Wednesday or Thursday, I had written an article about Facebook, you know, exploring ways to analyze messages that you send in WhatsApp, which are encrypted so that it could find ways to show you ads in WhatsApp, right? Right. They're using what's called homomorphic encryption.

And no one even like blinks when they've read about an article like that, because we just expect it from Facebook. Like of course they want to read our stuff because they want to show us ads, which by the way is really like mind blowing that the CEO of WhatsApp decided to stick his nose in this because I like really, but anyway, you don't expect that sort of thing from Apple, a company that literally hangs up billboards that say, "What happens on your iPhone stays on your iPhone." And again, those who are criticizing Apple over privacy concerns in good faith are not for this type of content. You can be against CSAM and also have an issue with the way that Apple handled it.

And I feel like that's the part people are like, does this represent a shift in Apple's stance on privacy? And does it open the door to other features in the future that might actually go farther? That said, I just wanted to say one thing about that Washington Post headline, because Apple has definitely taken a stance that they don't want you to think of this as scanning.

Even though it seems like a distinction without much of a difference, Apple defined scanning very specifically. I think about it like when we talk about tracking. Apple defines tracking as collecting people's information and their user activity, and then sharing it with a third party and Apple doesn't share things with third parties.

So even though Apple might track your app store downloads and show you ads based on that, it's not sharing it with any third party. So it doesn't call that tracking. Apple defined scanning as evaluating the images for a match and then producing a result. But because the result is secret and neither your device nor Apple knows what the result is, they, they don't want you to think of it as scanning.

I bring that up because to anyone who just heard me say that, they're like, no, they're scanning my phone. Right? None of that matters. It's a very technical and very complicated issue. And even very smart people, they hear very technical and complicated issues and all of a sudden they hear the word scanning or they're reading that someone is matching images on my phone and none of the other stuff matters.

Stephen Robles: Right. And like Rene Ritchie in our tweet conversation, you know, he was very specific to say, they're not scanning. They're not scanning, obviously that's the same line that Apple wants us to hear. They're not scanning. It's hard still to not feel like that. You know, like what, like what you were saying.

A piece of information I thought was interesting, this was actually from Gruber's article and I'll link to his as well. But to date, Apple has already reported 265 cases of CSAM material, I guess, using other methods or whatever, I'm not sure how, but to NCMEC. So Apple reported 265 cases to NCMEC while Facebook using their CSAM technology has reported 20 million cases to NCMEC.

So it's not like there have never been reports and notifications sent to NCMEC about people's content. You know, it's just, now it's kind of at the forefront, you know? No, one's talked about CSAM before last week. No one probably even heard of it, you know, at least unless you're in that world of like NCMEC work in that field like that, but no one has heard about it.

And so now everyone has seen it, knows it, and you know, there's this emotion of I didn't know about it before. Do I need to know about it now? And should I be concerned about it? And you know, I think that's what people's initial reaction is. This is new information for a lot of people and there's that emotional reaction.

Now this quote, this was, uh, Erik, I'm going to try and pronounce his last name, Neuenschwander, I think that's right. Apple's chief privacy engineer. He said this, "If you're storing a collection of CSAM material, yes, this is bad for you. But for the rest of you, this is no different." And so like we're saying by all accounts, that seems to be fair and true.

If you have a whole collection of this, these images and material that NCMEC has flagged as nefarious, yeah, you should worry about this, but that is very, very small percentage of people. And if you're worried about having it, you probably don't have it. You know, it's kind of like one of those things.

So I dunno. I mean, do you, in your conversations with just normal people, not just on Twitter, but maybe in person or family and friends, has anyone asked you about this and what kind of concerns have they expressed?

Jason Aten: Literally, no one has asked me about this that's not a tech person or on Twitter or a journalist, like no one, because most people don't spend nearly as much time following what's happening.

People like you and I, but that said it's not something, you know, we went to dinner for my parents' anniversary yesterday, and it's not like at the dinner table where like, so what do you think about Apple's new CSAM? Everyone would be like, I'm sorry, what? I don't even want to define the term, but I will say that for, you know, even my wife who, because of what I do, pays attention to things. And she's like, I think I vaguely saw that, but she doesn't follow Twitter. Most people, it's not going to be something that they pay any attention to or it won't change their life in any way. At this point, I will say with the exception of it does give other companies like Facebook or Epic ammunition to try to chink some of the armor away.

And people may hear those types of things. Where it could affect people's lives is if some of the privacy concerns are accurate, that this is just the first step in what happens next. We could get to a point where we look back and it's kind of the slow boiling frog type of a situation where we're like, "You know that Apple company we used to love because they were the company that just didn't meddle in our business? Yeah, man. Now every time I send a message, I have to get it approved by machine learning or something like that." I don't think that we're going to go there. I honestly wish that Apple was a little bit more clear about things like this, but I really wish that they would have handled the roll out better.

But I we'll still give them enough of the benefit of the doubt because in the 20 years I've been using Apple products, I feel like that's enough time to gauge where they stand on these types of things.

Stephen Robles: Right. And I don't think this is suddenly changing Apple's stance or mission on privacy and security.

I mean, let's not forget all the features they announced it WWDC like iCloud plus private relay and obscuring your IP address from trackers and all the other privacy and security things that they announced. Now, one thing Gruber recommended in his article, which I did not even think about was he would love to see complete iCloud end to end encryption.

Meaning when your phone gets backed up to high cloud, that it's all encrypted because right now aspects are encrypted such as iMessages on iCloud, but not the entirety of your iCloud data and such. So I would like to see that in the future. And I would also like to see again, the botched rollout is past, you know, we can't go back in time.

And I don't know if this is the kind of topic where Apple would do some of these like random interviews with tech journalists and pundits, I feel like if anyone, it would be Gruber, you know, they would probably, you know, get on a zoom call with him, but I think it would be good sooner rather than later for us to hear from, maybe Craig Federighi or some other like privacy VP in Apple, that kind of, I don't know. We can hear them talk about it and hear their heart for it. Cause again, like everybody can get behind the kind of material they're trying to eradicate. You know, everyone knows this is terrible, but the method, it would be nice to hear them explicitly state some things.

Like Nilay has been pressing, like really clarify, like if you're going to release white papers on the technology, get all the way down into the weeds and say, are we hashing your photos if you use iCloud photo library or not? Get, get all the way down there for it.

And, you know, for people who are like, I don't want to participate in this system, you know, you have two options. 1) don't use an iPhone, but you probably will have some kind of CSAM thing on your Android phone or other device if you use it. So, you know, if you don't wanna participate, you just don't use an iPhone or 2) turn off iCloud photo library. And I got in a couple of tweet conversations with people saying, "Well you can't do that. To not use iCloud photo library, it's just impossible." Well, it's not impossible. You can go to the settings app on your iPhone and iPad and Mac. You can go to iCloud, go to photos. And turn off iCloud photo library. It would be incredibly inconvenient. It would be a huge benefit now missing from iCloud if you pay for that service, but you can disable iCloud photo library, and instead use the Google photos app to upload your entire camera roll or use the Dropbox app to upload your stuff there.

But you also have to be mindful that Google and Dropbox and whoever else you choose to upload your photo library to might be doing the same thing, but not on your device, they just might be doing it in the cloud.

Jason Aten: Yeah. And I, I think that this is a great example of a very, very difficult situation. The type of content that we're talking about is horrifying and anything that can be done to eliminate that is a step in a good direction. The question is how many steps do you have to take before you start to violate the privacy of people who have nothing to do with that type of content? And the reason I think it's an issue for Apple is just the history and the stance that they've taken people in.

Again, I put this all back, back on the rollout, the way that it was rolled out. Most people would have probably given them the benefit of the doubt if they would've just been transparent about some of these questions that they've now answered in this FAQ.

Real time follow up, Nilay just responded saying that, you know, this is not Apple explicitly saying you can completely turn off local hashing of your photos, which I had literally just sent them a little bit ago, you know, make them say it on the record.

And I agree it would be helpful for them to say, are the images still being hashed? That sort of opacity just serves to create a little bit more doubt in people's mind. And that I think is a difficult thing for people who have put so much trust in the company to now sit there and be like, "Okay, but now, now you're making me wonder."

The alternative as you described as you're, I still think you're better off with Apple than anybody else, because it's like, you know, Google for all we know, I don't know this for a fact, but is already scanning your images in the cloud because they don't have a way to do that on an iPhone differently. Apple is not going to let them download a database and do this thankfully. Right. That's a positive thing that Apple would never let anybody else do that.

So I still think that this is a more privacy protected way than you might experience elsewhere. The question is just, it's not, should they be detecting this type of material, it's, "Okay, but how far into my life are you going to go?"

Stephen Robles: Right. Listeners, I'd be curious, those of you who tuned into this podcast and have now listened to this, what is the concern level?

You know, I'm curious on a scale of one to 10, this entire CSAM detection hashing system, has this raised concern at all for privacy and security with Apple, or do you just remain unchanged? I don't know. Let me ask you Jason, on a scale of one to 10, what is the level of concern about these announcements in this system in particular?

Jason Aten: I think on the particulars of this situation, my level of concern is pretty low. I think personally because I just have zero concern that I'm going to ever get flagged for this type of a thing. So in that regard, I'm not concerned. I think that whatever happens next will be a big indicator of whether that concern stays at like a one or a two, or is like a seven.

Does that distinction make sense? Because yeah, I'm not really, like I've read through the documents, I understand what they're doing, but I would agree that there's just enough vagueness in some of the statements. That they're leaving themselves enough wiggle room that they can have plausible deniability in the future to say, well, we didn't promise you that this wasn't going to happen.

Stephen Robles: Right. I mean, they've seemed to be explicit about the whole government oversight thing, even in other countries or here, but to your point. Yeah, like right now, now understanding the entire system, it's like one or two. But I don't think the FAQ PDF that they released Monday morning is enough. You know, I do think there needs to be an additional communication.

I really think some kind of video interview would be good. If not a video interview, some kind of like explainer from, you know, the Apple's privacy team about it. You know, like a WWDC breakout session style. I don't know, but I think there needs to be more communication before we get to iPhone launch in iOS 15.

And I'm sure Apple knows this because you don't want to go into a fall where you're going to be releasing all these new products. And this is still in the air of concern. Again, Apple's products are usually so covered by the media that maybe it doesn't matter, you know? Cause like the whole, Hey debacle, you know, WWDC right after that kind of washed it away.

So maybe that's the case here, but I don't know. I feel like Apple's opponents are going to be loud about this until there's more clarification.

Jason Aten: Well, first of all, I will say that if anybody from Apple is listening and they want to give an interview, I'm sure Stephen will be happy to talk to you, but my DM are open on Twitter.

I'd love to talk to you. I'm happy to have that conversation. Tim Cook, just hit me up. If you're listening, I will open my DMs for you period. Anyway, but I will also say that I, and I've written this a lot of times that trust is your most valuable asset and it that's especially true for Apple.

When people say that they love Apple products because they are simple to use or they just work. Right? That's the thing, it just works. That's a level of trust. What they're really saying is I trust that when I opened the box, it will just work the way that I expect it to. I trust that when I put my data on there, it's going to be safe. I trust when I give it to my kid, I don't have to worry about where they're going to end up because they have these controls.

I trust that I can let my grandma use this iPhone and she's going to be able to figure it out. Like that's all trust. And so I think that the important thing here is just, like you said, Apple has to, they can't get out ahead of it because they're already behind. But I do think it would benefit them to do something, to have a conversation with somebody to just sort of lay out, hey, this, you know, we still believe that privacy is a fundamental human right.

But we also believe that at the same time, we can do something to fight this horrible content that exists on the internet. So here's what we're doing. I think that that will go a long way towards rebuilding, even if it's a small amount of trust that's been dinged because of this. You can't really quantify what that means in the long-term of a relationship.

How many people might not update to iOS 15? I don't know how many people might skip the iPhone 13 because they come pre-installed with it? I don't know. But I think that they, that it seems like such a no-brainer and so simple to just have that kind of a conversation to do a video interview with somebody to do that sort of thing and just speak transparently and say, "This is why we're doing this. This is what it means. And yes, we still believe in these things."

Stephen Robles: Absolutely. Well, I think that's a good stopping place.

Listeners, again, I would love to hear from you guys. What is your concern? What do you feel? What other questions might you have just in case Tim Cook comes on the AppleInsider podcast, or maybe Tim Cook and Jason Aten we do a three-way, I'm totally down for it.

Let me know, but reach out. You can tweet at myself and you can follow @jasonaten on Twitter. He's a great followed there. Where else can people read your work, Jason?

Jason Aten: I publish a column every day at inc.com. That's probably the best place to read all of the thoughts that I have about topics like this.

Stephen Robles: Awesome. And we'll put links to that in the show notes as well.

Jason, thanks again for coming on the show.

Jason Aten: Thank you very much.

Links from the show

- What you need to know: Apple's iCloud Photos and Messages child safety initiatives

- Apple expanding child safety features across iMessage, Siri, iCloud Photos

- Internal Apple memo addresses public concern over new child protection features

- The Backlash Over Apple's iPhoto Scanning Could Have Been Avoided. It's a Masterclass in Ineffective Communication | Inc.

- The CEO of WhatsApp Attacked Apple Over Privacy. He Seems to Have Forgotten He Works for Facebook | Inc.

- Apple's Decision to Scan Your Photo Library for Exploitative Content Isn't a Privacy Problem. It's Much Worse

- Apple's New Child Safety' Initiatives, and the Slippery Slope | Daring Fireball

- Apple is prying into iPhones to find sexual predators, but privacy activists worry governments could weaponize the feature I Washington Post

- Nilay Patel's Tweet

- Apple's Child Safety Initiative

- Apple's Expanded Protections for Children FAQ

Support the show

Support the show on Patreon or Apple Podcasts to get ad-free episodes every week and early access to the show!

Subscribe to AppleInsider on:

More AppleInsider podcasts

Subscribe and listen to our AppleInsider Daily podcast for the latest Apple news Monday through Friday. You can find it on Apple Podcasts, Overcast, or anywhere you listen to podcasts.

Tune in to our HomeKit Insider podcast covering the latest news, products, apps and everything HomeKit related. Subscribe in Apple Podcasts, Overcast, or just search for HomeKit Insider wherever you get your podcasts.

Podcast artwork from Basic Apple Guy. Download the free wallpaper pack here.

Those interested in sponsoring the show can reach out to us at: [email protected]

Follow your hosts

Keep up with everything Apple in the weekly AppleInsider Podcast — and get a fast news update from AppleInsider Daily. Just say, "Hey, Siri," to your HomePod mini and ask for these podcasts, and our latest HomeKit Insider episode too. If you want an ad-free main AppleInsider Podcast experience, you can support the AppleInsider podcast by subscribing for $5 per month through Apple's Podcasts app, or via Patreon if you prefer any other podcast player. AppleInsider is also bringing you the best Apple-related deals for Amazon Prime Day 2021. There are bargains before, during, and even after Prime Day on June 21 and 22 — with every deal at your fingertips throughout the event.

Stephen Robles

Stephen Robles

William Gallagher

William Gallagher

Chip Loder

Chip Loder

Andrew Orr

Andrew Orr

Marko Zivkovic

Marko Zivkovic

David Schloss

David Schloss

Malcolm Owen

Malcolm Owen