As part of Global Accessibility Awareness Day, Apple has announced a slew of new iOS 16 featuresiPhone and Apple Watch users who are deaf, hard of hearing, blind, or have low vision.

Apple has repeatedly been honored for its accessibility work, and has previously marked Global Accessibility Awareness Day by promoting its work in the field. Now it's announced a whole series of accessibility features that will be coming "later this year with software updates across Apple platforms."

"Apple embeds accessibility into every aspect of our work, and we are committed to designing the best products and services for everyone," Sarah Herrlinger, Apple's senior director of Accessibility Policy and Initiatives, said in a statement. "We're excited to introduce these new features, which combine innovation and creativity from teams across Apple to give users more options to use our products in ways that best suit their needs and lives."

Apple says that the new features utilize Machine Learning to help everyone "get the most out of Apple products." Apple says that the new features utilize Machine Learning to help everyone "get the most out of Apple products." While Apple has not specified that the release will be part of iOS 16, it's probable that most will be present at the launch of that in September or October.

Highlights of the new features include Door Detection for helping people with low vision, while VoiceOver is being expanded, and hard of hearing users will be able to see Live Captions of what is being said around them.

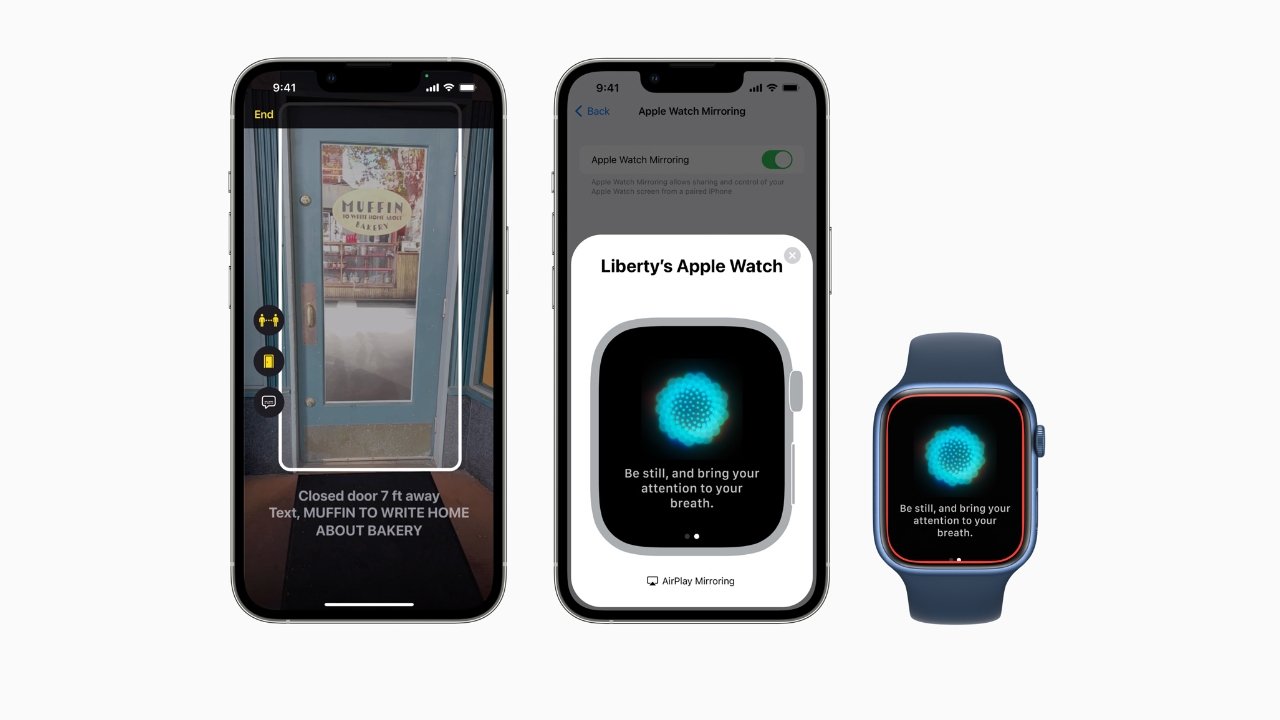

Door Detection

Door Detection is a "cutting-edge navigation feature," says Apple. It automatically locates doors "upon arriving at a new destination," and as well as showing the distance to a door, will describe it. That includes whether "it is open or closed, and when it's closed, whether it can be opened by pushing, turning a knob," and so on.

As part of this new feature, Apple is adding a Detection Mode to Magnifier, Apple's Control Center tool for helping low vision users. This Detection Mode will use Door Detection, People Detection, and Image Descriptions, to give users rich details of their surroundings.

The feature requires LiDAR, which currently limits it to the iPhone 12 Pro, iPhone 12 Pro Max, iPhone 13 Pro, iPhone 13 Pro Max, and iPad Pro.

Physical and Motor Accessibility features

A new Apple Watch Mirroring feature is to help "users control Apple Watch remotely from their paired iPhone."

This means the iPhone's accessibility or assistive features such as Voice Control, can be used "as alternatives to tapping the Apple Watch display."

Apple is also building on its previous AssistiveTouch feature where users could control the Apple Watch with gestures. "With new Quick Actions on Apple Watch," says Apple, "a double-pinch gesture can answer or end a phone call, dismiss a notification, take a photo, play or pause media in the Now Playing app, and start, pause, or resume a workout."

Live Captions

Available in beta form later in 2022, Live Captions will initially be supported only in the US and Canada. It will also require iPhone 11 or later, iPads with A12 Bionic processors or later, and Apple Silicon Macs.

With the right hardware, users will be able to use Live Captions to "follow along more easily with any audio content," says Apple. That's "whether they are on a phone or FaceTime call, using a video conferencing or social media app, streaming media content, or having a conversation with someone next to them."

During a group FaceTime call, the new Live Captions will also automatically attribute its transcribed captions to the right caller, making it clear who is saying what.

On a Mac during a call, Live Captions include the option to type a reply, and have that text spoken aloud for other people in the conversation.

More features

Alongside these headline features, Apple has also announced a series of settings and options that are designed to help with accessibility needs.

- Buddy Controller - combines two game controllers into one, so a friend or carer can help play

- Siri Pause Time - "users with speech disabilities can adjust how long Siri waits before responding to a request"

- Voice Control Spelling Mode — lets users dictate spellings letter by letter, for custom or unusual words

- Sound Recognition - iPhones can be trained to recognize a user's home alarm or applicances

- Apple Books - the app is adding custom controls for finer adjusting of line, character and word spacing

Celebrating Global Accessibility Awareness Day

Separately from the new features for Apple devices, the company is bringing SignTime to Canada from May 19. Already available in the US, UK, and France, the service connects "Apple Store and Apple Support customers with on-demand... sign language interpreters."

Canada is getting American Sign Language (ASL) interpreters, while the UK has British Sign Language (BSL), and France has French Sign Language (LSF).

May 19 is Global Accessibility Awareness Day, but throughout that week, Apple Stores are running sessions about accessibility features. Apple's social media channels such as YouTube will be "showcasing how-to content."

Then Shortcuts is shortly to gain an Accessibility Assistant shortcut, which will "help recommend accessibility features based on user preferences," on Mac and Apple Watch.

Apple Maps is launching a new National Park Foundation guide called Park Access for All, while Apple Books, Apple Podcasts, and the App Store, are showcasing "accessibility-focused" stories.

At the same time, Apple TV+ is also showcasing hit movies "featuring authentic representation of people with disabilities," which includes Apple's Oscar-winning "CODA" film. Apple Music is highlighting its "Saylists" playlists, which each focus on a different sound and are intended to be sung along to for speech therapy.

Lastly, while all Apple Fitness+ trainers are required to learn sign language, trainer Bakari Williams is going to use ASL to highlight the service's many accessibility options.

William Gallagher

William Gallagher

-m.jpg)

Brian Patterson

Brian Patterson

Charles Martin

Charles Martin

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

3 Comments

I hope Apple let’s Siri control a lot of these features. As an elderly person myself I know that using Siri voice to control features is a big help. And I’m really hoping that eventually Apple will bring back Siri’s ability to search photos so that it doesn’t have to be done manually.

For "Live Captions" in FaceTime, will that work for multiple users at the same time, even when FaceTime is muting one of the people, as it often does when two people are speaking? If yes, then this will fix FaceTime's most annoying problem, because it will let me "hear" things that are being muted by FaceTime.

Buddy Controller sounds absolutely awesome. What a great idea!