Accusations that Apple paid off benchmark developers so its iPhone can beat Samsung's latest models are unfounded, and based on tribalism. Here's why.

Social media complaints about Samsung's S23 Ultra doing worse than the iPhone after the introduction of Geekbench 6 has led to accusations of bias favoring Apple. In reality, it's just an issue in how benchmarks are perceived as the be-all-and-end-all of a smartphone's worth.

Since the introduction of Geekbench 6 in February, fans of Samsung and Android have taken to Twitter and other public forums to complain about its results. Specifically, the internet beef is about how Samsung's Galaxy S23 Ultra scores versus the iPhone 14 Pro lineup.

A compilation of the charges by PhoneArena lays out the complaints as chiefly being how the scores have drifted further apart with the introduction of the new Geekbench 6.

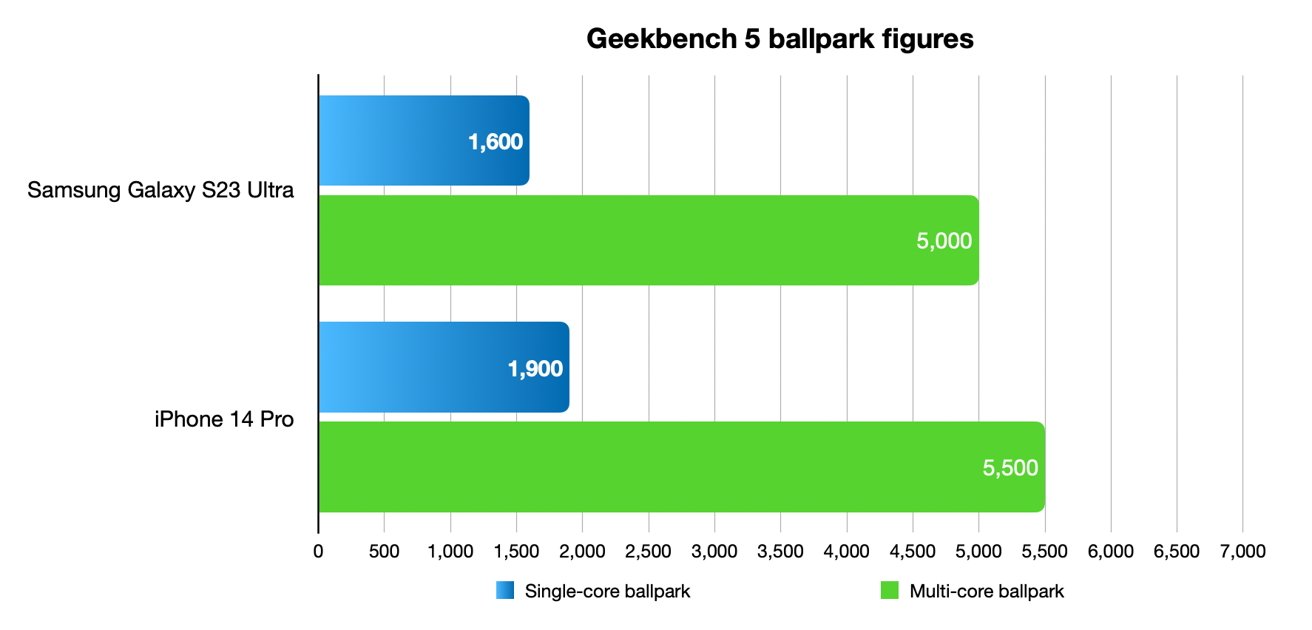

Under Geekbench 5, the Galaxy S23 Ultra would get around 1,600 for the single-core score and 5,000 for the multi-core, in the ballpark of the iPhone 14 Pro's 1,900 and 5,500 scores.

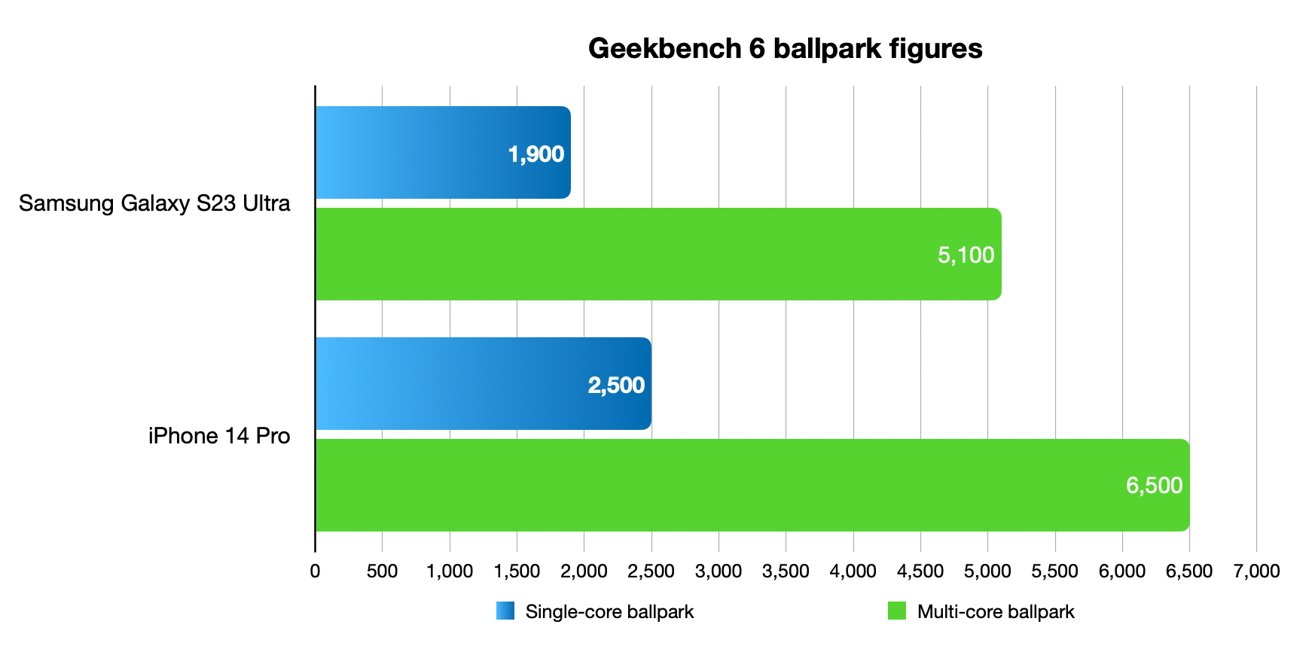

When tested using Geekbench 6, the Galaxy S23 Ultra would manage around 1,900 for the single-core test, and 5,100 for the multi-core. Meanwhile, the iPhone 14 Pro manages 2,500 for the single-core result and 6,500 for the multi-core.

In effect, the iPhone is 18% better in single-core and 10% better in multi-core than the Samsung under Geekbench 5. Switching to Geekbench 6, the lead has increased to 31% and 18% respectively.

Social media inhabitants claim that this change in score must mean there is some sort of bias in play towards Apple. Surely a reasonably close race in Geekbench 5 should still be equally close in Geekbench 6, the tweets argue.

Therefore, to these people, there's some level of pro-Apple bias. As is nearly always the case, someone has already accused Apple of paying GeekBench off to bump up the results.

The game has changed

The first thing to consider is what goes into a benchmark itself. A synthetic benchmark performs a variety of tests, with results compiled into a final, singular score.

These tests don't change throughout the life of the benchmark generation. So, there's a level of consistency in testing between devices over a long period of time.

However, benchmark tools do have to update every so often to match trends in hardware specifications, and the type of tasks that a user can expect to undertake with their devices.

The release of Geekbench 6 did precisely this, with alterations to existing tests and the introduction of new tests to better match what is possible with a modern device. That includes new tests that focus on machine learning and augmented reality, which are considerable growth areas in computing.

"These tests are precisely crafted to ensure that results represent real-world use cases and workloads," the description for Geekbench 6 reads.

Machine learning is a growth area and is capable of creating "Art," so shifting a benchmark's focus in that direction makes sense.

Machine learning is a growth area and is capable of creating "Art," so shifting a benchmark's focus in that direction makes sense.Think of it as a race between a sprinter and someone into parkour. The race may normally be something like the 100-meter dash, which the sprinter is used to, but changing to something like a Tough Mudder obstacle course will probably end up with a different result.

If you take away nothing else from this piece, here's the main bullet point. If you change what's being tested, of course the results are going to be different.

It's no different than if you were to compare the results of Geekbench 5 to those of other benchmark suites. Since there's different tests and an alternate weighting of each going into the final scores, you'll find differences in performance between devices to also vary between benchmark tools.

If you think of Geekbench 6 as being a completely different benchmarking tool to Geekbench 5, the differences in performance can be more reasonable to understand.

Yes, a change in weighting to make some areas more important to a score than others can cause scores to change. But, so long as it doesn't affect the ability to directly compare a score against others from the same generation of the app, it's not really a problem.

The need for trust

Benchmark tools are in a privileged position, in that they are an entity that entirely relies on the trust of users to be truthful in the results they provide. The developers say that a set of known tests will be performed by the tool, and that they will be performed in a certain way, every time.

By and large, benchmark tools thrive on this credibility, that there's no company-specific bias at play. The results that come out are considered to be legitimate, and that there's no foul play at all.

If, hypothetically, a benchmark developer was offered a huge bag of money to throw the results in one manufacturer's favor, it would be possible to accomplish. Except that the difference in result compared to the rest of the benchmarking industry will probably and suddenly cause users to question the results that test brings.

Such a situation will break trust in a benchmark tool's results since other results will be put into question.

Benchmark developers therefore have a need to reduce any bias in test results, so they can be as accurate as possible, to keep the credibility and trust it's built up.

Wait a hot minute, or two

That credibility takes time to form, which can be a problem for benchmark tools in the beginning.

After a year of operation, tools like Geekbench can build up a collection of results that users can refer to. With Geekbench 5 being so highly used by the media and enthusiasts, that collection is massively important.

However, as we've discussed, Geekbench 6 is not Geekbench 5, and it's only been out for a few weeks. It hasn't built up that catalog of results to be able to adequately enable comparisons between a wide spectrum of devices yet.

Unfortunately, that means people will be trying to compare Geekbench 6 results against Geekbench 5 until that catalog is fleshed out enough to matter.

This is a problem that won't be solved immediately, as it relies on the results gathered from millions of tests using the tool. That can take months to come into existence, certainly not the two weeks that have elapsed since the release of Geekbench 6 itself.

Wait a few months, then take a look at the benchmarks. If Geekbench 6 is trustworthy, you're going to see the same sort of trends across all of the devices tested by it.

A warning from history

With benchmarks considered a main way to compare one device against another, that can lead some to think it is the ultimate arbiter of what's the best smartphone that you can buy.

As we've just spelled out, a benchmark should only be a small part of your overall buying decision, and not the entirety of it. This prioritizing of benchmarks as the "most important thing" has already led to weird situations in the past.

Take the example of reports from March 2022, when Samsung was caught adjusting how its devices work specifically with benchmarks in mind.

To keep smartphones running cool and without issues, a smartphone producer can choose to limit the processing capability of their devices. This makes sense to a point, in that a red-hot smartphone isn't desirable to consumers, nor is one that can drain the battery.

At the time, Samsung was caught subjecting a long list of apps to "performance limits," namely throttling them for just such a reason. Except that benchmark apps like Geekbench 5 and Antutu didn't get throttled at all, and ran unrestricted.

To the end user, this would mean the device would benchmark well, but in actual usage, would end up functioning at a much lower level of performance than expected for many normal apps.

This is effectively shortchanging the end user by making them believe that the device runs faster than in reality, at least under benchmarks.

Benchmarks are not the real world

The whole point of a benchmark is that it gives you a standardized way to compare one device with another, and to generally know the difference in performance. The key is standardization, and like many areas of life, that's not necessarily going to lead to a true reflection of something's capabilities.

This specialization even goes down to the specific benchmark itself, as while Geekbench is a more generalized one, there are others with specific audiences in mind.

For example, many gamers rely on in-game benchmarks such as the one in Rise of the Tomb Raider. This makes sense as a benchmark, since it being an actual game, it can better test just elements of a device's performance with a gamer's needs in mind.

Meanwhile, though Cinebench offers testing focused on GPUs, it's largely more useful for those working in 3D rendering, since it caters more to that field rather than to general 3D needs.

There's also browser-based benchmarks, but while useful for those working in online-centric fields, they won't be that useful for those working in 3D or are avid gamers.

Ideally, users need to choose the benchmark tools that meet their needs. Geekbench is a simple and generalized test suite, but while it isn't the best for specific scenarios, its ease of use and general-purpose nature makes it ideal for mass-market testing, such as in publications.

Even so, regardless of what benchmark you use, you're not going to get a complete rundown for your specific needs. You'll still get an indication, but no certainties.

That sprinter is great at short-distance races, but they probably won't be that good at doing their taxes, or in knowing where the eggs are in a supermarket. Knowing how they place in a race won't help get your accounting done any faster, but you'll know at least that they are physically fit.

Likewise, a smartphone can do well at accomplishing specific tasks in a benchmark, but it's still an approximation of what you want to do with the device. For example, you may prioritize the time it takes to perform biometric unlocking, or the image quality of the camera.

A benchmark tool will only give a general guide to how a smartphone is compared to another under specific conditions. It won't tell you how well it will fit in with your life.

Malcolm Owen

Malcolm Owen

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Christine McKee

Christine McKee

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

14 Comments

This is hilariously funny. When the Samsung/Android fanboys were basking in the benchmark superiority of their devices they were fine. Now that they have been caught or have fallen behind they simply can’t accept reality. What to do? What to do? Start a disinformation campaign of course and accuse Apple of skullduggery when Samsung did the exact thing they now accuse Apple of. I guess once a liar always a liar.

Wait for the usual suspects to come and explain how this is a tempest in a teapot and how Samsung is truly unbeatable no matter what benchmarks say. And you know who I mean.

Geekbench has seemingly wiped version 5 results from its website, just weeks (not months) into releasing version 6. I don't recall this happening so quickly to version 4 results when version 5 was released.

I'm not a fan of having a single number include machine learning. ML has nothing to do with my real-time use of a device. I think that performance measure should be reported separately. Sure you can dig into the individual benchmarks that comprise GB6 (and earlier), but everyone center's their focus on the top-level figure.