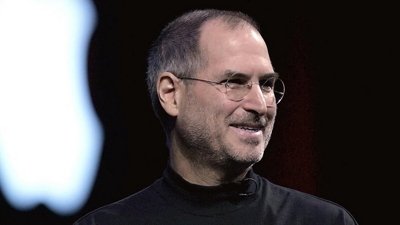

Artificial intelligence is the industry buzzword these days, and while Apple is remaining tight-lipped on the subject, CEO Tim Cook promises it's an integral part of its products.

Apple held its quarterly earnings call on Thursday evening where journalists get a chance to ask CEO Tim Cook and CFO Luca Maestri questions about the earnings and company. While many result in negligible replies, some answers provide insight into where things may be going.

A few questions directed the call towards AI and Apple's investments in it. Apple's leadership was clear on the company's stance on AI — it wasn't just being developed, it was already part of everything.

"If you kind of zoom out and look at what we've done on AI and machine learning and how we've used it, we view AI and machine learning as fundamental technologies and they're integral to virtually every product that we ship," Cook replied to a question about generative AI. "And so just recently, when we shipped iOS 17, it had features like personal voice and live voicemail. AI is at the heart of these features."

Later in the call, Cook was asked about a roughly $8 billion increase in in R&D spend and where that money was going. Tim's response was simple, among other things, it's AI, silicon, and products like Apple Vision Pro.

These comments are in line with what Cook and the company have said about AI in the past. Plenty of features in iOS already rely on machine learning and even AI, like the new transformer language model for autocorrect.

Some assume that Apple's AI efforts will lead to a more powerful Siri. Though it isn't clear if or when such a project will surface.

Wesley Hilliard

Wesley Hilliard

Charles Martin

Charles Martin

Mike Wuerthele

Mike Wuerthele

Marko Zivkovic

Marko Zivkovic

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Amber Neely

Amber Neely

-m.jpg)

11 Comments

and yet... When my iPhone does a voice-text transcription of voicemail message, it spells my name wrong. A whole lot of intelligence there!

Likewise, I’m going to go out on a limb and declare that in my view that Pi and the speed of light c are fundamental constants of our universe.