The 3D Touch pressure sensitivity offered in some iPhone models could be improved upon in future models, with Apple examining the use of VCSEL technology to monitor deflections in a surface caused by a finger press, or even to analyze and monitor effects of the Taptic Engine.

The addition of 3D Touch to the iPhone introduced more ways for users to interact with notifications and apps, such as by making it possible to perform simple app actions without launching the app and going through a longer process. Such a system requires the use of a pressure-sensing display, but while it is adequate for normal use, Apple thinks it can go one stage better.

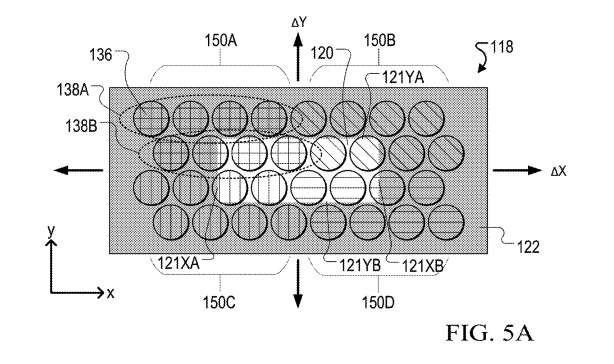

A patent granted by the U.S. Patent and Trademark Office on Tuesday titled "Motion sensing by monitoring intensity of light redirected by an intensity pattern" describes how the displacement of a mass can be tracked via the use of a pattern applied to a surface.

In the unusually technical patent, Apple explains that a pattern could be produced and placed onto a surface it would want to monitor. The pattern, potentially made using infrared-absorbing ink, is applied across the entire surface and produced so that there is a variation of the pattern at different points, making it easier to track.

The pattern could also be made to have both light-absorbing and reflective elements, coated with a "multilayer anti-reflection coating," or to have varying levels of reflectivity.

Using a pair of vertical cavity surface emitting laser (VCSEL) arrays, light is emitted to illuminate the pattern in two different directions, with light reflections picked up by a pair of photodetectors. The important element is that each VCSEL is paired with a photodetector, and each VCSEL illuminates the pattern in such a way that it is detected by its paired sensor, not the other.

When pressure is applied to the surface, deforming it, each sensor can track the changes in the pattern, and in turn can be used to monitor how much deflection there is in the surface. In the case of a display, it maybe possible to determine not only the amount of deformity, but also where exactly the center of the deformation is based due to having data from two axis.

Apple believes such a system could avoid existing displacement measuring systems that require the use of conventional "Hall sensing," which uses the detection of a magnetic field to determine changes in an object's positioning relative to a sensor. It is suggested that Hall sensing can have "displacement sensitivity dead zones," and can also be interfered with by external magnetic fields, an issue for a device like an iPhone that has multiple magnets and electronics generating such fields in the first place.

The patent does mention the use of the system in association with haptic feedback systems, though it is unclear exactly why. It is plausible that it could be used to monitor the effects of the haptic feedback on a surface, depressed by a finger or not, or simply to minimize any detrimental effects that haptic feedback could have on a user's interaction with the display.

Apple files numerous patents applications with the USPTO on a regular basis, and while they can be used to determine areas of interest for the company, it is in no way guaranteed that such systems would be implemented in future products and services.

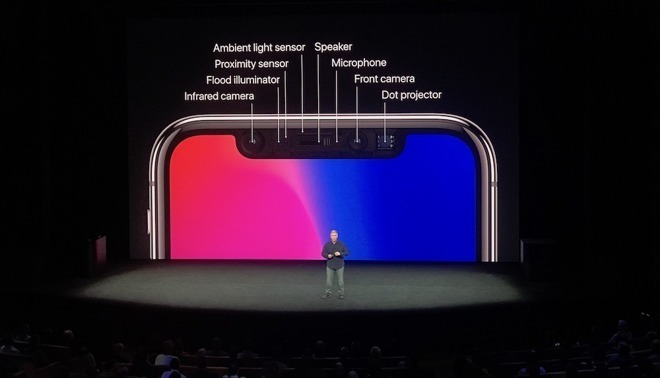

VCSELs are already an important part of Apple's products, with the best-known use being Face ID, where it is used to scan the user's face for biometric authentication via the TrueDepth camera system. The component is important enough that Apple used its Advanced Manufacturing Fund to invest $390 million in Finisar, a producer of VCSELs.

One patent application in December suggested Apple was looking to using VCSELs in other ways in the iPhone, employing them to aid communication between the camera sensor and other components. In theory, this could reduce the number of electrical signals that could affect Hall Effect sensors used for the Optical Image Stabilization system, at the same time as potentially reducing the size of the camera bump.

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Charles Martin

Charles Martin

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

9 Comments

Anyone who thinks Apple is ditching 3D touch is out of touch with Apple.

Well sure, but who hasn't spitballed exactly this patent idea with their friends over a beer? These patents are so obvious nowadays.

"Improved touch capabilities" Sounds like a marketing ploy to sell more phones or use your pinky.

Lets face it other then improving battery life there is not much left to improve.

While I'm sure Apple has thought of this, why can't the IR camera and the proximity sensor be combined? If the IR camera shows no image, then shut off the screen. If it shows anything, then turn the screen on.

Can someone explain why this wouldn't work?