ARKit is Apple's framework and software development kit that developers use to create augmented reality games and tools. ARKit was announced to developers during WWDC 2017, and demos showed developers how they could create AR experiences for their apps.

Apple has pivoted hard into augmented reality even if it isn't a feature that impacts most users even today. AR experiences are available everywhere, but really only for those looking for them.

While AR hasn't taken over the world by any stretch, there are signs that Apple's push for AR features and hardware will ultimately lead to a set of AR glasses, dubbed "Apple Glass." Until then, experiences offered by games like "Pokemon Go" and shopping apps like Amazon are how we use AR now.

What is augmented reality?

Augmented reality – often stylized as AR – is the act of superimposing a computer-generated image into the "real world," usually via a smartphone camera and the screen. People often compare AR to VR – or virtual reality. Virtual reality, however, is the act of creating a fully simulated environment that a user can "step into."

For example, ARKit is the framework that powers the iOS app Measure. Users can take measurements of rooms or objects using their device's camera.

While AR is primarily confined to smartphones and tablets right now, many industry experts are bullish on the future of AR glasses or headsets. Microsoft HoloLens and Magic Leap One are examples of two head-mounted products today that use AR technology.

ARKit Features

ARKit uses Visual Inertial Odometry (VIO) to accurately track the world, combining camera sensor data with CoreMotion data. These inputs allow the iOS device to accurately sense how it moves within a room, eliminating the need for additional calibration.

Either camera is used to capture a live feed of the surroundings, tracking differences in the picture when the angle of the iOS device changes. Combined with the movements detected within the CoreMotion data, ARKit recognizes the motion and viewing angle of the iOS device to the environment.

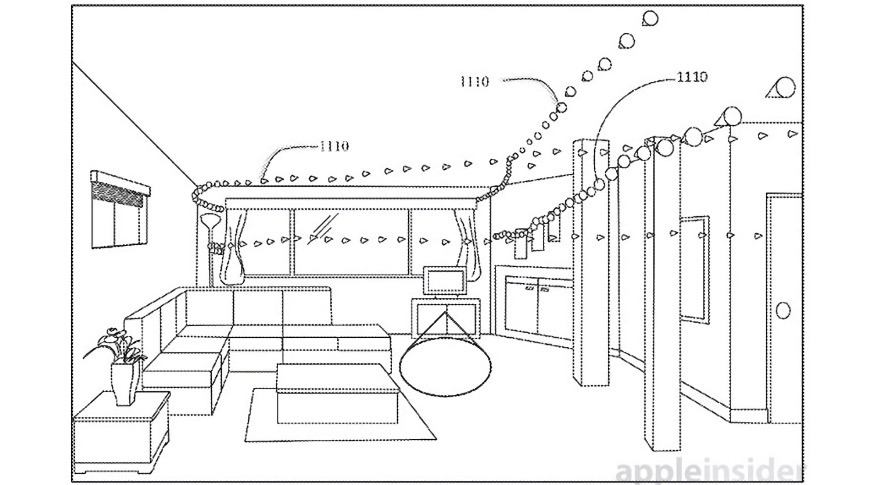

ARKit is capable of finding horizontal planes in a room, such as a table or a floor, which can then be used to place digital objects. It also keeps track of their positions when it temporarily moves out of frame.

The system also uses the camera sensor to estimate the light of the environment. This data is useful in applying lighting effects to virtual objects, helping to match the real-world scene closely, furthering the illusion that the virtual item is actually in the real world.

ARKit can track a user's face via the iPhone TrueDepth camera. By creating a face mesh based on data from the TrueDepth camera, it is possible to add effects to the user's face in real-time, such as applying virtual makeup or other elements for a selfie. The Animoji feature also uses this system.

Apple's ARKit documentation includes guides on how to build a developer's first AR experience, dealing with 3D interaction and UI controls in AR, handling audio in AR, and creating face-based AR experiences.

Apple has also added lessons in its Swift Playgrounds app, giving younger and inexperienced developers an introduction to the framework. The "Augmented Reality" Challenge teaches users commands to enable the iPad's camera to handle when planes are detected, to place a character on a plane, and to make the scene interactive.

While it has optimizations in Metal and SceneKit, it is also possible to incorporate ARKit into third-party tools.

ARKit 6

Apple introduced 4K HDR video to AR scenes with improvements in rendering scenes, recording, and exporting. Other updates were made to enhance previously introduced features like motion capture and location anchors.

ARKit 5

Updates in 2021 were focused on improving existing features like location anchors and face tracking. Apple also introduced the ability to detect App Clip images while in AR to create interactive objects or scenes.

ARKit 4

iOS 14, which Apple released in September 2020, contains several features that pave the way for improved AR experiences on new Apple hardware.

Location anchors will let developers and users attach augmented reality objects to locations in the real world. When using AR on a device, users will pan the camera around to find a suitable AR surface. While this happens, the camera will use the surrounding architecture to help pinpoint exact geolocation.

This is all handled on-device, using existing map data generated by Apple Maps and its Look Around feature.

The new Depth API was created to take advantage of the LiDAR system on fourth-generation iPad Pros, iPhone 12 Pro, and iPhone 12 Pro Max. Using the depth data captured and specific anchor points, a user can create a 3D map of an entire room in seconds.

This feature will allow for more immersive AR experiences and give the application a better understanding of the environment for object placement and occlusion.

Face and hand tracking have also been added in ARKit 4, which will allow for more advanced AR games and filters that utilize body tracking. Applications can benefit by allowing a user to simply use their face or hands as an input for certain experiences, like a Snapchat filter or video chat environment.

LiDAR and ARKit 3.5

The fourth-generation iPad Pro and iPhone 12 Pro series each include a LiDAR sensor on the rear of the device. Starting with an update to ARKit for iOS 13.4, Apple's software now supports the LiDAR system and can take advantage of the latest hardware.

With ARKit 3.5, developers will be able to use a new Scene Geometry API to create augmented reality experiences with object occlusion and real-world physics for virtual objects. More specifically, Scene Geometry will allow an ARKit app to create a topological map of an environment, unlocking new AR features and additional insights and information for developers.

The LiDAR will be used for 3D environment mapping that will allow for AR to be massively improved. The scanner works from up to 5 meters away and gets results instantly, which makes AR apps much easier to use and more accurate.

Using a LiDAR sensor will rapidly increase AR mapping times and the ability to detect objects and people. This should also improve portrait mode and photography in general as 3D maps can be used with every image.

ARKit 3

ARKit 3 brought quite a bit of fine-tuning to the system, employing machine learning and improved 3D object detection. Complex environments are now capable of being more accurately tracked for image placement and measurement.

People Occlusion has been added with the advent of ARKit 3. This allows a device to track people and allow digital objects to pass seamlessly behind or in front of them as needed.

It also brought Motion Capture, giving the device the ability to understand body position, movement, and more. This enables developers to use motion and poses as input to an AR app.

ARKit 3 allows for simultaneous front and back camera tracking. Users can now interact with AR content from the back camera by using facial expressions and head positioning.

Up to 3 faces can be tracked with ARKit Face Tracking, using the TrueDepth camera.

Developer Reaction and Initial Response

The developer response to ARKit's tools is "unbelievable," according to Apple VP of worldwide iPod, iPhone, and iOS product marketing Greg Joswiak in a late June 2019 interview. Noting quickly developed projects ranging from virtual measuring tapes to an Ikea shopping app, Joswiak said, "It's absolutely incredible what people are doing in so little time."

"I think there is a gigantic runway that we have here with the iPhone and the iPad. The fact we have a billion of these devices out there is quite an opportunity for developers," said Joswiak. "Who knows the kind of things coming down the road, but whatever those things are, we're going to start at zero."

ARKit Compatibility

ARKit was released alongside iOS 11, which meant any device capable of running iOS 11 would be able to utilize AR features.

ARKit 4 still has features that are compatible with devices running iOS 11, but its more advanced features are restricted to devices that feature A12 Bionic chips or better. The latest features and depth-sensing require LiDAR on the device, which is only the iPad Pro and iPhone 12 Pro series and later.

William Gallagher

William Gallagher

Amber Neely

Amber Neely

Wesley Hilliard

Wesley Hilliard

Mike Peterson

Mike Peterson