Apple is updating its Vision framework in iOS 14 and macOS Big Sur to allow developers to detect body and hand poses or gestures within their apps.

The new functionality, which will debut in iOS 14 and macOS Big Sur later in 2020, was detailed in a WWDC 2020 video session on Wednesday. Apple didn't mention it during Monday's keynote address, however.

As noted in the video, the updates to the Vision framework will allow apps to analyze the gestures, movements or specific poses of a human body or hand.

Apple gives a series of examples of how developers can leverage that capability.

That includes a fitness app that could automatically track an exercise that a user is performing, media editing app that can find photos or video clips based on the poses within them, or a safety app that can help train employees on correct ergonomic postures.

The hand gesture detection app could also open up more opportunities for users to interact with third-party apps.

Apple's own Brett Keating showed off a proof-of-concept app that allowed a user to "draw" in an iPhone app using a finger gesture without touching the display.

The company notes that Vision can detect multiple hands of bodies in a frame, but the system may run into problems with people wearing gloves or robe-like clothing, as well as certain postures like people bending over or facing upside down.

In 2019, Apple updated its Vision framework to use machine learning to find cats and dogs in images and videos.

While Apple's ARKit offers similar analytic capabilities, they're restricted to augmented reality sessions using the rear-facing camera. The updates to Vision, however, could potentially be used in any kind of app.

Apple has taken steps to bolster its development of machine learning and computer vision systems across its platforms.

The new iPad Pro LiDAR sensor, which could potentially be added to the "iPhone 12," vastly improves motion capture. Portrait Mode on the 2020 iPhone SE also relies solely on machine learning to separate a photo's subject from their foreground.

Mike Peterson

Mike Peterson

-m.jpg)

Christine McKee

Christine McKee

Marko Zivkovic

Marko Zivkovic

Mike Wuerthele

Mike Wuerthele

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Wesley Hilliard

Wesley Hilliard

4 Comments

Very cool video ~25 minutes discussing and demonstrating hand motion capture. I hope all future WWDCs sessions post videos in the same way for all to see. But let’s go back to a live keynote next year, even if a live event has a few unexpected hiccups.

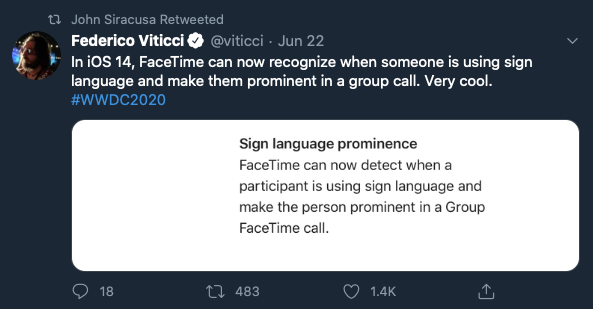

Related? I wonder if they'll be able to do live bidirectional translation of ASL soon, that'd be amazing.

Fantastic! Could be useful for gaming, AR, sign language interpretation, interpreting body gestures to control a UI without touching a surface and much more.