Alongside all of Apple's work in Apple AR, and "Apple Glass," regarding live experiences, the company wants users to be able to record, edit, and later view AR — together with automatically-generated extras.

Apple has previously researched ways of recording AR — and playing those recordings back on 2D devices such as the iPad. Separately, it's also investigated recording AR audio, and it has already included VR editing in Final Cut Pro. Now it's continuing to explore ways of achieving recordable, useful AR, in a pair of newly-revealed patent applications.

"Computer-generated Reality Recorder," is the first, and it concerns the myriad problems of capturing a 360-degree AR/VR experience. Not only is there a vast amount of data to record from one person wearing a headset, there is also the issue that multiple people can be involved.

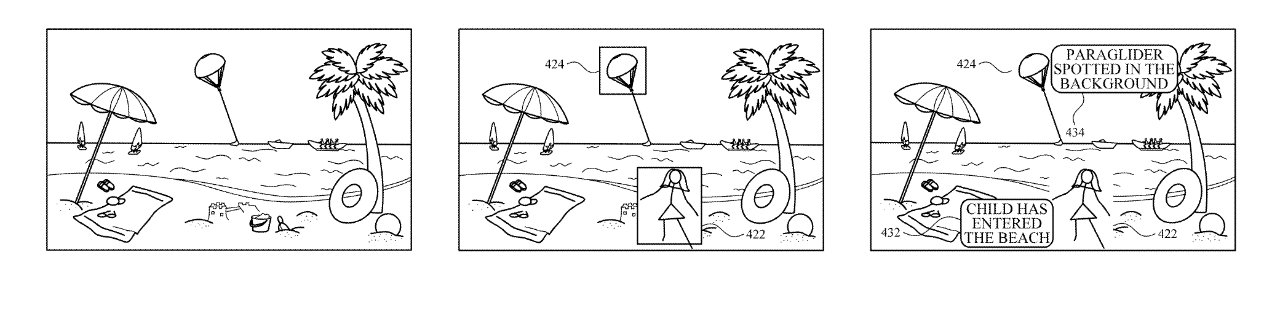

Apple doesn't resolve all of these within this patent application, but it does help because it's really about recording very specific parts of an AR experience. It's about determining which parts a user is interested in, and then capturing those.

"The subject technology identifies, based at least in part on at least one of a user preference or a detected event, a region of interest or an object of interest in the recording of content," says Apple. "Based at least in part on the identified region of interest or object of interest, the subject technology generates a modified version of the recording of content."

The examples given are chiefly to do with sports, and so a user might for instance be particularly interested in following one player. Whatever other players do, and whatever the user chooses to watch at the time, the system could capture just what this one player does.

Then the recording could automatically have more information added into the scene.

"As a result, the electronic information appears to be part of the physical environment as perceived by a user," continues Apple. "[Plus it could include] a user interface to interact with the electronic information that is overlaid in the enhanced physical environment."

This is similar to Apple's prior research where "Apple Glass," or other device, could display comparison information for shoppers. Hold two items up and Apple AR would show related information about them both.

It's all about successfully mixing virtual objects or information, in with reality — and then recording it to play back later. "[Play back feature] a computer-generated reality environment including two-dimensional (2D) video for sharing and playback," says Apple.

Alternatively, the recording "subsequently generates a three-dimensional (3D) representation merging information from all sensors and/or combining recording with other users' recordings (e.g., different point of views (POVs) and/or different fields of view (FOVs))," continues the patent application.

Being able to determine points of interest, and interpret reality to know where to display information, means being conscious of proximity, position, and distance. Once a system can effectively pick out any given object in the scene, it can then also alter that object.

Hiding sensitive objects in an AR recording

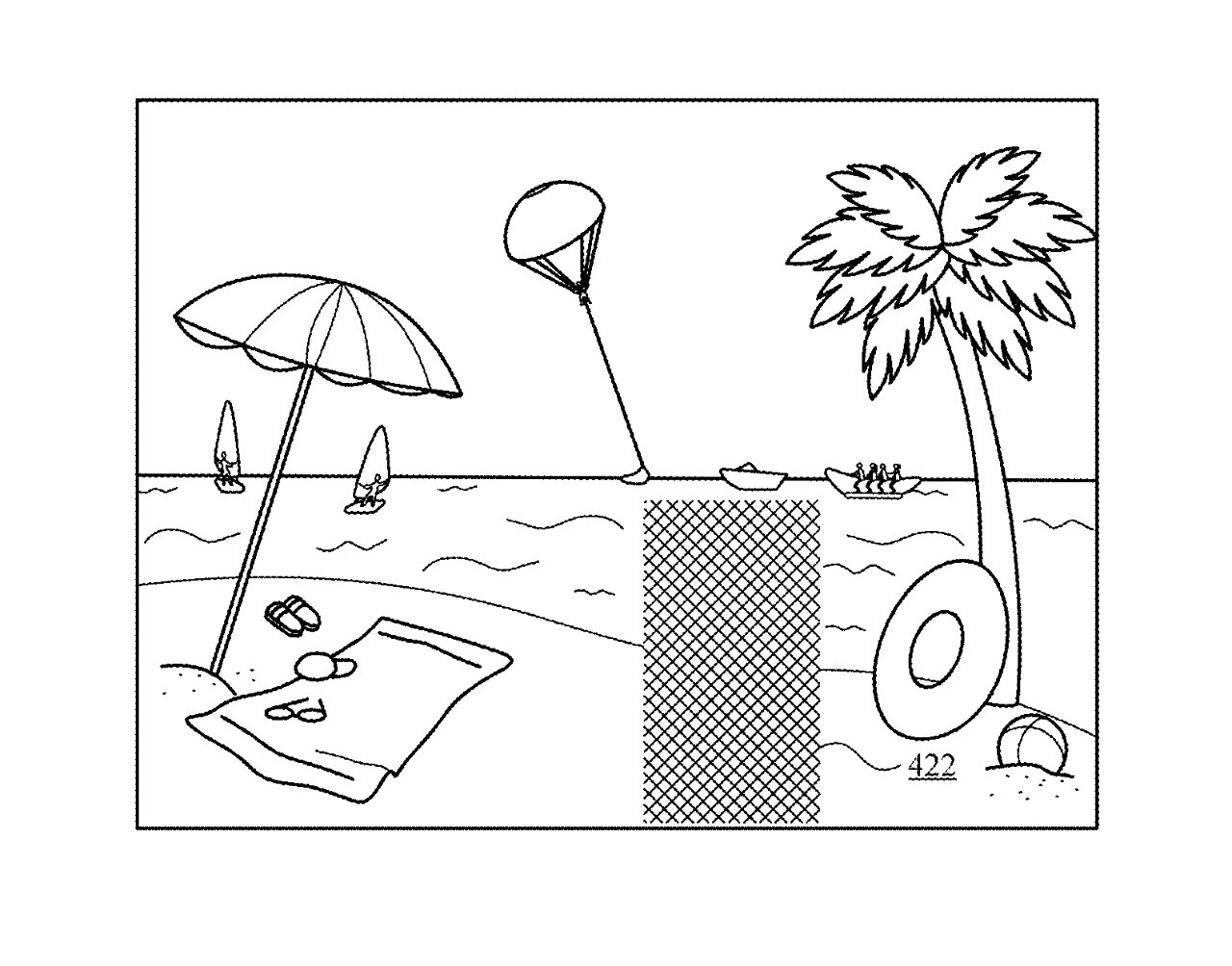

The second newly-revealed patent application regarding recording AR is concerned with altering objects in a scene when they are in some way sensitive. "Providing restrictions in computer-generated reality recordings," is similar to previous research where video could automatically blur out logos or other elements in live video conferences.

That was to do with objects in a 2D space, where this new patent application is for real or virtual 3D ones in AR or VR.

According to Apple, this proposal involves "analyzing a recording of content within a field of view of a device," and recognizing "a set of objects included in the content."

"The subject technology identifies a subset of the set of objects that are indicated as corresponding to protected content," continues the patent application. There's no discussion over what constitutes protected content, because that's not a technical decision, it's an editorial one.

Whoever decides that whatever is "protected content," the system can identify the objects and do something about them. " The subject technology generates a modified version of the recording that obfuscates or filters the subset of the set of objects," says Apple.

In other words, it puts a blur over an object, or redacts it, presumably as preferred by the user.

This is again applied during a live AR experience, but the patent application also specifies that the "modified version of the recording" is sent to a "host application for playback" later.

These two patents share two of the same inventors, Ranjit Desai, and Maneli Noorkami. The second patent also credits Joel N. Kerr.

Desai's work, prior to joining Apple in 2016, includes a patent for Philips concerning an image retrieval system that's able to identify objects and people using color histograms.

Keep up with AppleInsider by downloading the AppleInsider app for iOS, and follow us on YouTube, Twitter @appleinsider and Facebook for live, late-breaking coverage. You can also check out our official Instagram account for exclusive photos.

William Gallagher

William Gallagher

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Andrew O'Hara

Andrew O'Hara

3 Comments

I've seen this movie. Chris Walken and Natalie Wood, right?

How is this different from the sports advertising from, for example a football match, where the players from one piece of action stay in colour but everyone and everything else is switched to grayscale? Or the Formula 1 footage from Sky where they sometimes have replays that display labels pointing to each car when the traffic is congested?

Doing it in real time will probably require AI assistance and thus much more processing power and more sophisticated models. I think those performance requirements are going to be a much more effective barrier to competition than a bunch of patents that at first blush seem to be pre-empted by prior art.

Didn’t go so well in Strange Days...