A future iPad, iMac, or MacBook may offer a unique form of privacy for a user looking at sensitive documents, by tracking their gaze to work out what part of the screen they are looking at, while simultaneously obscuring the rest of the display with bogus data to throw off onlookers.

Anyone looking at sensitive information in documents, both in printed form and on a device's display, may be concerned about others looking over their shoulder to see what they're reading. In cases where discretion and confidentiality is needed, this can be a big problem, and may limit the ability for the person to actually look at the information in areas where other people may be located.

In the case of computer screens, filters are available that can limit the field of view that the display can be seen from to a narrow band. However, this isn't practical for mobile devices like an iPhone or iPad, and the filters could also make the viewing experience worse overall for their intended users.

In a patent granted to Apple by the U.S. Patent and Trademark Office on Tuesday titled "Gaze-dependent display encryption," Apple suggests that a document could be openly read in public by a user, with security via obscurity.

The patent first surfaced as an application in March 2020, and was originally filed on September 9, 2019.

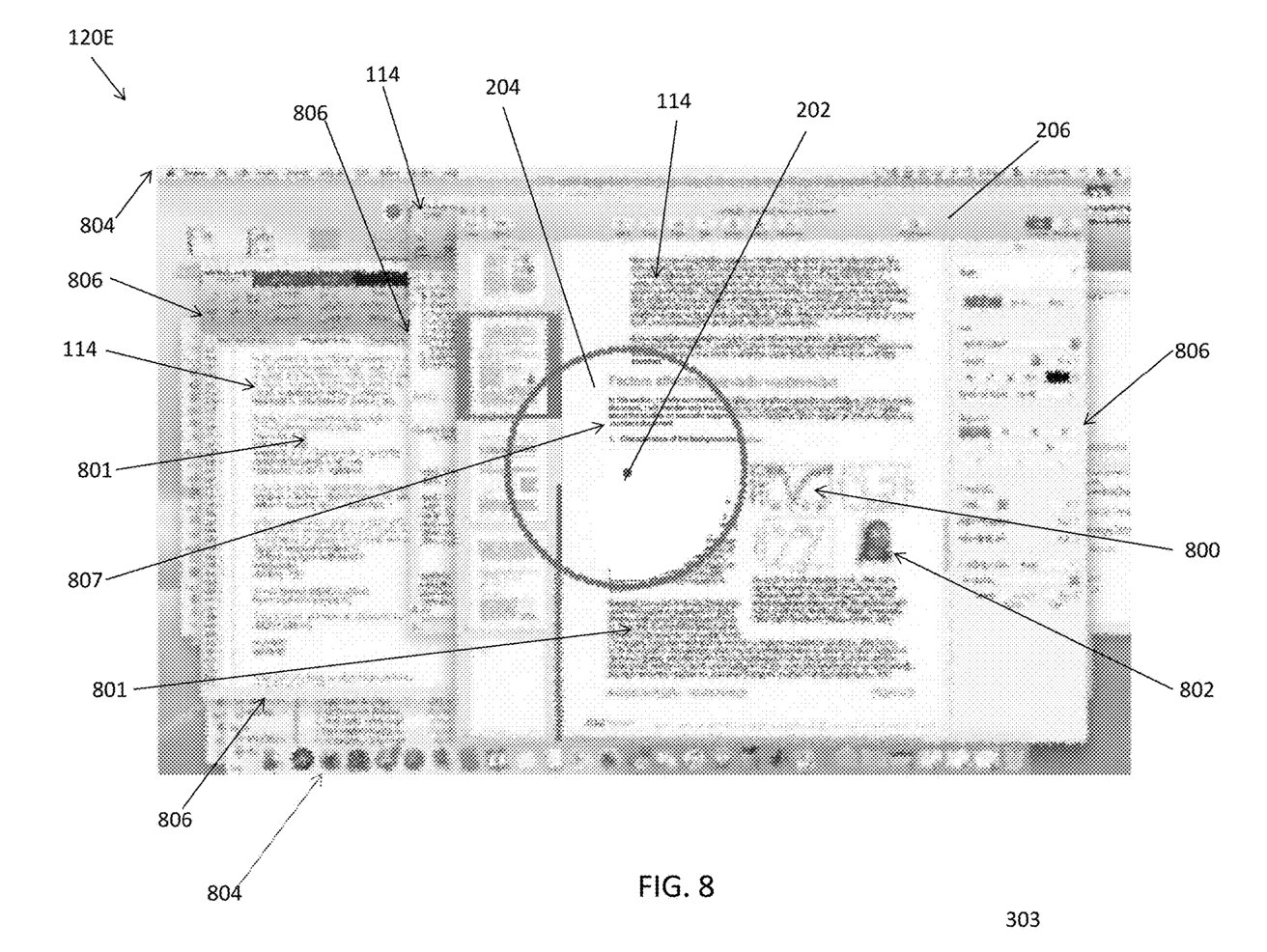

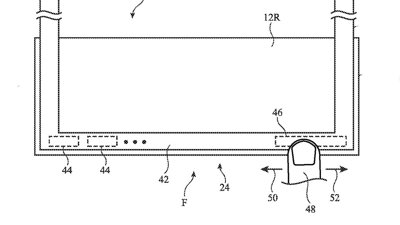

An example of a mostly-obscured display, with a region highlighted showing where a user's gaze is detected.

An example of a mostly-obscured display, with a region highlighted showing where a user's gaze is detected. The idea of the patent revolves around the device knowing exactly where the user is looking at the display. By detecting and determining the user's gaze, the device can use the point on the screen the gaze falls as its starting point.

For that point of the screen and for a limited surrounding area, the display appears as normal. Outside of that area that the system considers the area of focus for the user at that time, the system has free reign to alter the image so that it becomes practically unreadable.

It can accomplish this in a few ways, such as changing text to useless or unintelligible versions, or by adding graphical filters on unviewed areas. Whatever the system does, it does so in such a way that anyone nearby who sees the screen may be able to recognize the application being used, for example, but has no hope of seeing what the user is looking at from a simple glance.

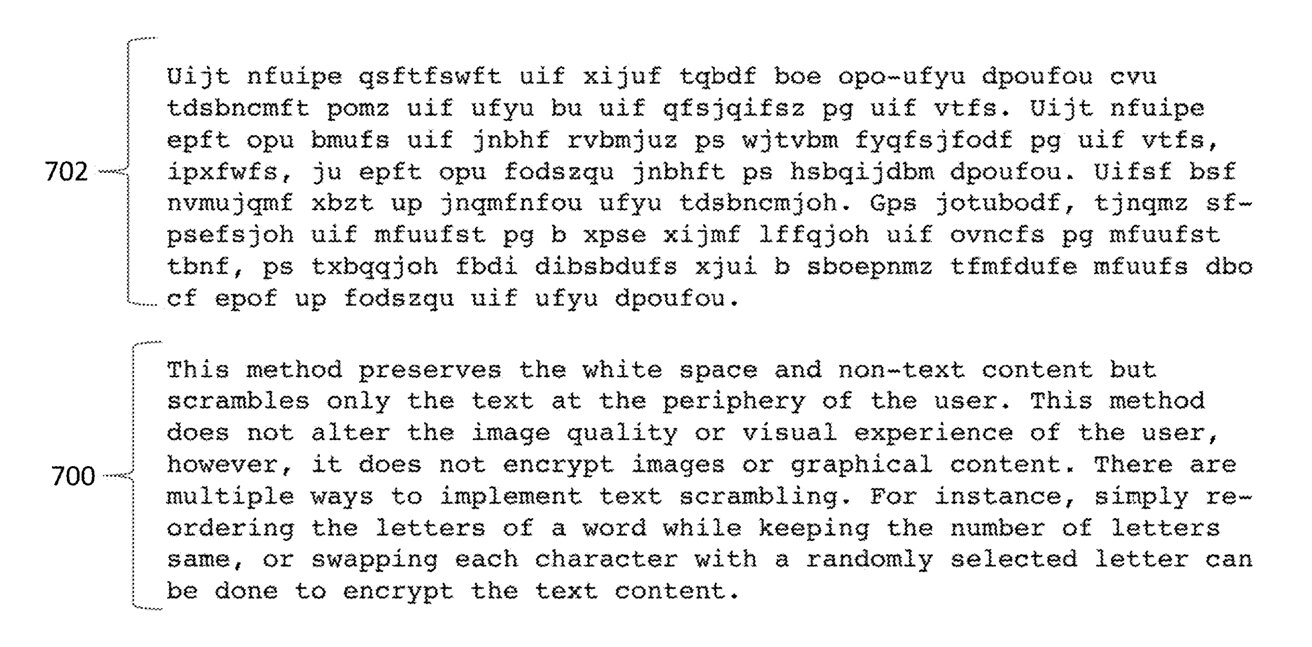

An example of how the text on a display could be turned into gibberish, while maintain the same word lengths and letter spacing as the original.

An example of how the text on a display could be turned into gibberish, while maintain the same word lengths and letter spacing as the original. While it is true that a section of the screen would be clearly viewable to anyone, it would only be at the point where the user is looking, and not the entire screen. By disguising but not outright blocking the rest of the display, observers have to put effort in to determine which area of the screen is actually readable at that time.

As the current user changes the part of the screen that they are looking at, the display updates the position of the readable section based on the new gaze data, and uncovering or disguising areas where needed. This means users will always see their intended content, and could even read a document completely normally to them, while being extremely difficult for others to actively read simultaneously.

The patent lists its inventors as Mehmet Agaoglu, Cheng Chen, Harsha Shirahatti, Zhibing Ge, Shih-Chyuan Fan Jiang, Nischay Goel, Jiaying Wu, and William Sprague.

Apple files numerous patent applications on a weekly basis, but while the existence of a patent indicates areas of interest for Apple's research and development teams, it doesn't guarantee the idea will appear in a future product or service.

This is a patent that Apple may already have most of the component parts in place to actually implement. Devices like the iPhone and iPad Pro with Face ID have features such as Attention Awareness, to ensure users are looking at the device to unlock it. There are also eye tracking elements within ARKit, which could easily lead to gaze tracking down the line.

Apple's other patent filings have also raised the use of gaze detection for different purposes.

In 2019, one patent explained how gaze detection could solve drift issues in AR and VR headsets between the user's head position and that of the headset itself. The gaze detection could also help improve the ability for users to select items from a menu while wearing the headset.

A patent application from September 2020 outlined an eye-tracking system that could be used in "Apple Glass," one that would cut down on the amount of processing needed to track a user's gaze.

In April 2021, gaze tracking was proposed to be used to control external cameras, and to automatically adjust lenses within a headset. More practically, another April 2021 patent application offered that gaze detection could be used to tell whether a user had looked at a notification or message on an iPhone.

Stay on top of all Apple news right from your HomePod. Say, "Hey, Siri, play AppleInsider," and you'll get latest AppleInsider Podcast. Or ask your HomePod mini for "AppleInsider Daily" instead and you'll hear a fast update direct from our news team. And, if you're interested in Apple-centric home automation, say "Hey, Siri, play HomeKit Insider," and you'll be listening to our newest specialized podcast in moments.

Malcolm Owen

Malcolm Owen

-xl-m.jpg)

-m.jpg)

Amber Neely

Amber Neely

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Thomas Sibilly

Thomas Sibilly

7 Comments

That seems over-complicated.

Lenovo has a licensed software on Windows available for monitors and other devices with webcams that blurs the entire screen when you look away or if somebody comes up behind you.

How about having Siri say "Hey you ... quit spying"

Wouldn't it be cool if the the entire screen was blurred except for the user who is viewing the screen with Apple Glasses/AR.