On Monday at 6:00 PM local time, the EFF and other privacy groups are protesting Apple's CSAM identification initiatives in person at select Apple Retail stores across the country.

The Electronic Frontier Foundation is sponsoring a nationwide protest of Apple's CSAM on-device protections it announced, then delayed, for iOS 15 and macOS Monterey. The protest is being held in several major US cities, including San Francisco, Atlanta, New York, Washington D.C., and Chicago.

A post from the EFF outlines the protest and simply tells Apple, "Don't scan our phones." The EFF has been one of the largest vocal entities against Apple's CSAM detection system that was meant to release with iOS 15, citing that the technology is no better than mass government surveillance.

"We're winning— but we can't let up the pressure," the EFF said in a blog post. "Apple has delayed their plan to install dangerous mass surveillance software onto their devices, but we need them to cancel the program entirely."

The in-person protests are only one avenue of attack the EFF has planned. The organization sent 60,000 petitions to Apple on September 7, cites over 90 organizations backing the movement, and plans to fly an aerial banner over Apple's campus during the "California Streaming" event.

We need to interrupt next week's #AppleEvent to say clearly— it's not OK to put surveillance software on our phones. https://t.co/6EDXAbo0Wn

— EFF (@EFF) September 11, 2021

Those interested can find a protest in their area, sign up for newsletters, and email Apple leadership directly from this website.

Cloud providers like Google and Microsoft already search for CSAM within their user's photo collections but in the cloud, versus on users' devices. The concern with Apple's implementation lies with where the processing takes place.

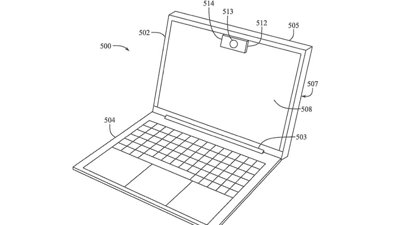

Apple designed it so the hash-matching against the CSAM database would take place on the iPhone before images were uploaded to iCloud. It says this implementation is safer and more private than trying to identify user's photos in the cloud server.

A series of poor messaging efforts from Apple, general confusion surrounding the technology, and concern about how it might be abused led Apple to delay the feature.

Wesley Hilliard

Wesley Hilliard

-m.jpg)

William Gallagher

William Gallagher

Sponsored Content

Sponsored Content

Andrew Orr

Andrew Orr

Thomas Sibilly

Thomas Sibilly

Christine McKee

Christine McKee

Andrew O'Hara

Andrew O'Hara

Malcolm Owen

Malcolm Owen

12 Comments

Scanning on our devices, bad, scanning on servers, good? I guess it doesn't matter which way they do it, but it would have kept the positives-count private if done on device.

https://nakedsecurity.sophos.com/2020/01/09/apples-scanning-icloud-photos-for-child-abuse-images/

https://www.microsoft.com/en-us/photodna

https://protectingchildren.google/intl/en/

I don’t get this. Other scanning systems can do whatever but Apple’s only reports anything if a significant collection of known CSAM is found and that’s worse?

Anyone who think this is good idea of Apple scanning your phones for images fail to see the altruist down line bad effects this can have.

I share this before it only works for images which are known about and stored in the CSAM data base these are image that sick people trade in. It can not catch newly created images since they are not in the database and a Hash has not been created. If it can catch new images not in the data base it looking for feature which could catch other nude image of young kids which is demand acceptable and it becomes an invasion of everyone's privacy. Once the tech is installed what going to stop bad actors for using it for other reasons.

No one can say it will only catch the bad guys, no one know that to be true since no one really know how it works. This will catch the stupid people not the people who are smart and dangerous and who are creating the original images.

You are free to give away your privacy, but your not free to give everyone else's away. I am glad to see what EFF is standing up.