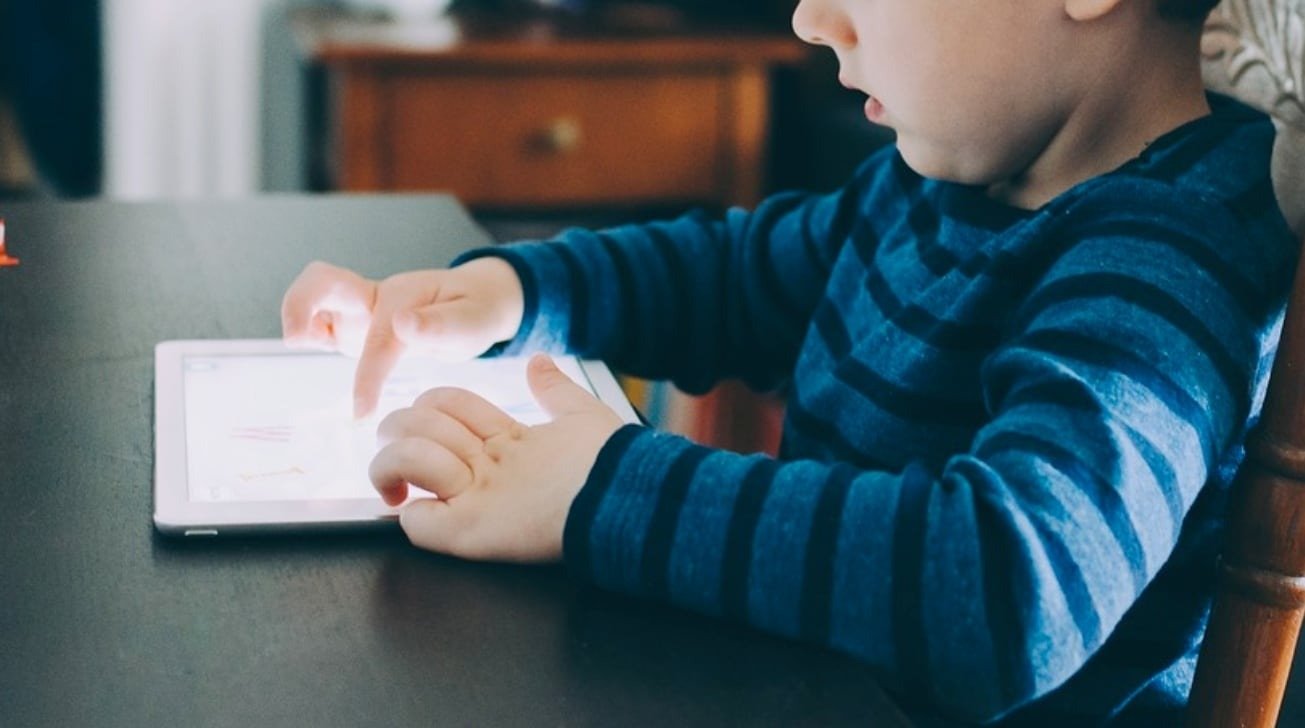

Apple and Google should play more of a role in protecting children online, according to Facebook's former head of security, with the two platform controllers ideally placed to limit access to online services by the user's age.

Laws such as COPPA and other mandates to protect children from seeing unsettling online content are intended to keep companies in line with the expectations of lawmakers. However, while the laws exist, it is suggested that maybe it would be better for platform holders to police the way device are used, rather than turning to website, app, and content creators.

In a Twitter thread on September 28, Alex Stamos of the Stanford Internet Observatory offered suggestions for regulatory changes to improve child safety. The thread provided a number of ideas, but the biggest arguably centered around things that Apple and Google could potentially perform, as controllers of the main mobile operating systems and operators of the App Store and Google Play Store.

One measure, as spotted by Protocol, proposed a change of the initial setup process of a device to ask if the primary user is a child, and to store the birthdate locally. It is reasoned the calculated age rounded to the year could be provided to every app via an API, rather than the user being asked every single time for age verification.

For apps that allow users under the age of 18 to use it, Stamos offers the developers could be forced to publish child safety plans for various age ranges, such as 13 to 15-year-olds, or those under the age of 10 years.

2) Require mobile devices (phones and tablets) sold in the US to include a flow, triggered during initial setup, that asks if the primary user is a child and stores their birthdate locally. The calculated age (rounded to year) should be provided via API to every app.

— Alex Stamos (@alexstamos) September 28, 2021

Stamos suggests that App Stores could play a part, by enforcing an age-gate based on the age of the primary user stored on the device. While there would be a need for developers to outline what age groups should be able to use the app, it would in theory prevent younger users from getting access to apps with more adult content.

Such an approach could minimize the chance of an enterprising younger user bypassing age gates for specific services, such as to view game trailers for mature titles, or simply to access pornography.

Stamos also says that COPPA could be replaced with a law that uses a phased approach for kids, with definable age ranges for gradually providing more online access rather than "one bright line at 13." Lastly, he mentions the need for cooperation with independent researchers for "reasonable privacy controls."

The thread by Stamos arrives with a sense of irony, as Stamos was previously the head of security for Facebook, a tech giant that has been embattled by age-related issues for Facebook. The company's plan to create an Instagram for younger users was denounced and eventually paused, while Facebook attempted to convince critics that it was a good idea.

Facebook has also been criticized via a major internal documents leak, with accusations it cared more about its algorithm than to keep users safe from hate content or misinformation.

Apple does provide some level of parental controls to users, giving parents a way to limit what can be performed on devices and to set limits. However, these typically require a parent to set the controls up in the first place, which may not necessarily be an effective route for protecting children.

Apple's attempts to prevent CSAM from proliferating by introducing new tools, including warning parents if their children were about to see or send sexually explicit images, was roundly criticized as a privacy-infringing measure. The outcry forced Apple to postpone the launch to gather more feedback.

Malcolm Owen

Malcolm Owen

AppleInsider Staff

AppleInsider Staff

Oliver Haslam

Oliver Haslam

Andrew O'Hara

Andrew O'Hara

Wesley Hilliard

Wesley Hilliard

William Gallagher

William Gallagher

Christine McKee

Christine McKee

8 Comments

I'd suggest he and FB keep their insight where the sun don't shine.

What he doesn’t mention is how smart kids are. They are more technologically advanced than older generations and can find the hacks to see whatever content they want. Just having a birthdate won’t do enough

We need Apple and Google to apply the controls b/c we/Facebook will not spend the resources to imagine ways to can stop "enterprising younger users bypassing" our controls. I mean after all we already have our hands full trying to develop workarounds for the lost revenue resulting from Apple's privacy controls.

Coming from the company who was just caught not protecting kids from their own product and they knew it was harmful to teen girls, sounds like do not look here look there they are bad ones. When someone blames you of bad things it’s because they’re the one doing the bad things.

Let’s take a moment and bathe in the irony that Facebook was recently planning an Instagram for Kids, knowing IG was a key cited cause of suicide in pre-teens and teens—but their former security chief wants to lecture the rest of the world about how to keep kids safe on the internet.