New iOS 15.2 beta includes Messages feature that detects nudity sent to kids

Last updated

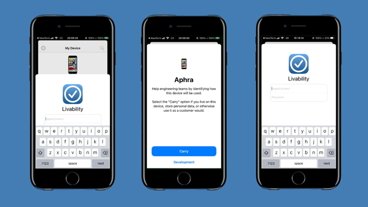

Apple's latest iOS 15.2 beta has introduced a previously announced opt-in communication safety feature designed to warn children — and not parents — when they send or receive photos that contain nudity.

The Messages feature was one part of a suite of child safety initiatives announced back in August. Importantly, the iMessage feature is not the controversial system designed to detect child sexual abuse material (CSAM) in iCloud.

Although not enabled by default, parents or guardians can switch on the Messages feature for child accounts on a Family Sharing plan. The feature will detect nudity in Messages, blur the image, and warn the child.

Apple says children will be given helpful resources and reassured that it's okay if they don't want to view the image. If a child attempts to send photos that contain nudity, similar protections will kick in. In either case, the child will be given the option to message someone they trust for help.

Unlike the previously planned version of the feature, parents will not be notified if the system detects a message contains nudity. Apple says this is because of concerns that a parental notification could present a risk for a child, including the threat of physical violence or abuse.

Apple says that the detection of nudity flag will never leave a device, and the system doesn't encroach upon the end-to-end encryption of iMessages.

It's important to note that the feature is opt-in and only being included in the beta version of iOS, meaning it is not currently public-facing. There's no timeline on when it could reach a final iOS update, and there's a chance that the feature could be pulled from the final release before that happens.

Again, this feature is not the controversial CSAM detection system that Apple announced and then delayed. Back in September, Apple said it debut the CSAM detection feature later in 2021. Now, the company says it is taking additional time to collect input and make necessary improvements.

There's no indication of when the CSAM system will debut. However, Apple did say that it will be providing additional guidance to children and parents in Siri and Search. In an update to iOS 15 and other operating systems later in 2021, the company will intervene when users perform searches for queries related to child exploitation and explain that the topic is harmful.

Mike Peterson

Mike Peterson-xl.jpg)

Amber Neely

Amber Neely

Thomas Sibilly

Thomas Sibilly

AppleInsider Staff

AppleInsider Staff

William Gallagher

William Gallagher

Malcolm Owen

Malcolm Owen

Christine McKee

Christine McKee

19 Comments

I’m legitimately curious, how can iMessage “detect” sexually explicit photos being sent to or from a phone.

Does anyone know how this is being done on device?