Apple's annual Worldwide Developer Conference is often mistaken for a hardware product launch event. However, the main purpose of WWDC is to introduce Apple's latest software enhancements and tools to the third party developers who use them to create apps for Apple's platforms. However, both hardware and software play a role in advancing Apple's future plans— and WWDC serves as the company's unfurling roadmap of strategic direction. Here's a look at what we've already seen and what we can expect next week.

Apple's evolving development strategies at WWDC

WWDC has always sought to offer Apple's third party developers deeper insight into the company's overall strategic directions for its software platforms, largely with the purpose of convincing them to invest their time and effort into building apps on top.

At the same time, WWDC has also debuted new hardware introductions and refreshes, such as last year's iMac Pro and the new HomePod. The latter had very little apparent relevance for third party development. However, there are previous examples of Apple outlining the beginnings of a strategy at WWDC that didn't fully emerge for several years.

Twenty years ago at WWDC 1998, Steve Jobs detailed Apple's plans for what would eventually ship as macOS X. The new software didn't actually ship in beta form until 2000, and it took another two years before Apple began making its advanced new OS its default platform for its Mac hardware. During that eternity of delay, Apple debuted iBook, PowerBook G3 and introduced iPod.

Ten years later at WWDC 2008, Jobs focused on a new mobile platform— which at the time Apple was calling "OS X iPhone." The company was also newly introducing the concept of the iOS App Store, offering developers the ability to work with the same development APIs it used internally to create the iPhone apps bundled on the device. The real timeline of how things were developing inside of Apple was not the same as the public releases that the rest of us were observing from the outside

Jobs also laid out a future strategy for iCloud, then known as MobileMe. The company's new take on cloud-based app storage also took years to work through its first awkward stages, but within a fews years it too became a solid part of Apple's overall strategy, and is now deeply woven into macOS X, iOS and Apple's other platforms, and drives new hardware including HomePod.

The next year Apple began calling its mobile platform "iOS" and after another year it showed off iPad— its future vision of tablet-based iOS computing that had actually predated Apple's plans for a smartphone. The real timeline of how things were developing inside of Apple was not the same as the public releases that the rest of us were observing from the outside.

Machine Learning, AI vision and AR

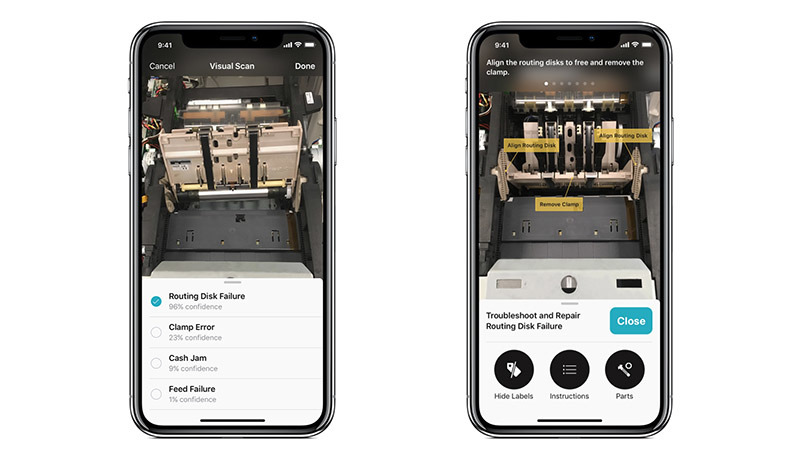

Last year, Apple further exposed internal work as public development interface for third parties to build on top of. These included Core ML, the machine learning framework for computer vision and natural language processing that Apple had already been using inside Siri, its Camera app and the QuickType keyboard. Now, developers could begin building their own model-based ML features.

IBM has since released "Watson Services for Core ML" support for MobileFirst apps to analyze images, classify visual content and train models using its Watson Services, bringing Core ML into enterprise mobile apps.

Apple also detailed at WWDC how the "magic" behind its dual-lens Portrait photos works, and exposed its Depth API for developers to use in their own apps.

It did the same for iPhone X's TrueDepth structure sensor camera, enabling third parties to do facial tracking, and it unveiled an entirely new set of ARKit tools for building Augmented Reality experiences that worked on any A9 or better iOS device.

Motion, wearables, VR, Metal and GPU

ARKit is a major extension of the work Apple has done in Core Motion, which Jobs first introduced in 2010 on the gyroscope-equipped iPhone 4. Apple has since built custom motion coprocessors into its mobile devices to track ongoing physical activity in health and fitness in HealthKit. Paired with camera vision ML, iOS devices can now handle Visual Inertial Odometry.

We can expect to see big new leaps over last year's surprising introductions, with not only ARKit but also applied advancements to health-related fitness tracking in Apple Watch. The applications of motion were important enough for Apple to make it a major focus of its annual presentation to shareholders this February, depicting a video of people whose lives who were changed or even saved by its wearable technology that serves as a fitness coach, bionic bodyguard and a mobile link to the outside world.

Apple is particularly well positioned in wearables, already offering a tactile, glanceable computer that looks like a fashion watch and an audio AR experience in the form of affordable earbuds. Rumored to be next are glasses presenting visual AR and Virtual Reality experiences, something Apple advanced last year in the form of new VR content development on Macs, tied to external GPU support.

Apple has also progressively rolled out advancements to Metal, its hardware optimized framework for GPU coding first introduced in 2014.

Over the last few years, it brought Metal to the Mac, advanced Metal to do more and last year it moved its own A11 Bionic chips to support Metal 2 using a custom Apple GPU design— with surprisingly little fanfare for such a massive undertaking.

iOS UI, Intelligence and messaging apps

Specific to iPad, last year Apple introduced iOS 11 Drag and Drop, a multitasking navigation experience that required rewriting the entire upper-level interface. This year, with another year of polish and development, we can expect iOS 12 to focus on stability after a growing period that introduced more UI glitches than iOS users have perhaps ever had to endure.

There's also talk of bringing iOS and macOS UI development closer together, potentially facilitating the porting of iOS code to more easily adapt to run on Macs. In previous years, Apple did something similar with APFS, radically updating and harmonizing the core file system with minimal interruption for users on both iOS and Macs.

We'd also like to see advancements made in areas such as Data Detectors and text services, where iOS and Macs often come close to greatness before falling over sideways. Recent features such as Continuity-based copy and paste across devices, Handoff of documents, Maps from addresses, inside mapping and calculated travel times for calendar events suggests that there is a lot more in the pipeline waiting to increase the intelligence that our modern devices can suggest.

With Apple's emphasis on fitness and Apple Watch mobility, perhaps we'll see Maps catch up in offering urban biking directions and supplying better off road and offline maps for hikers, mountain bikers, skiers and other outdoor sports enthusiasts.

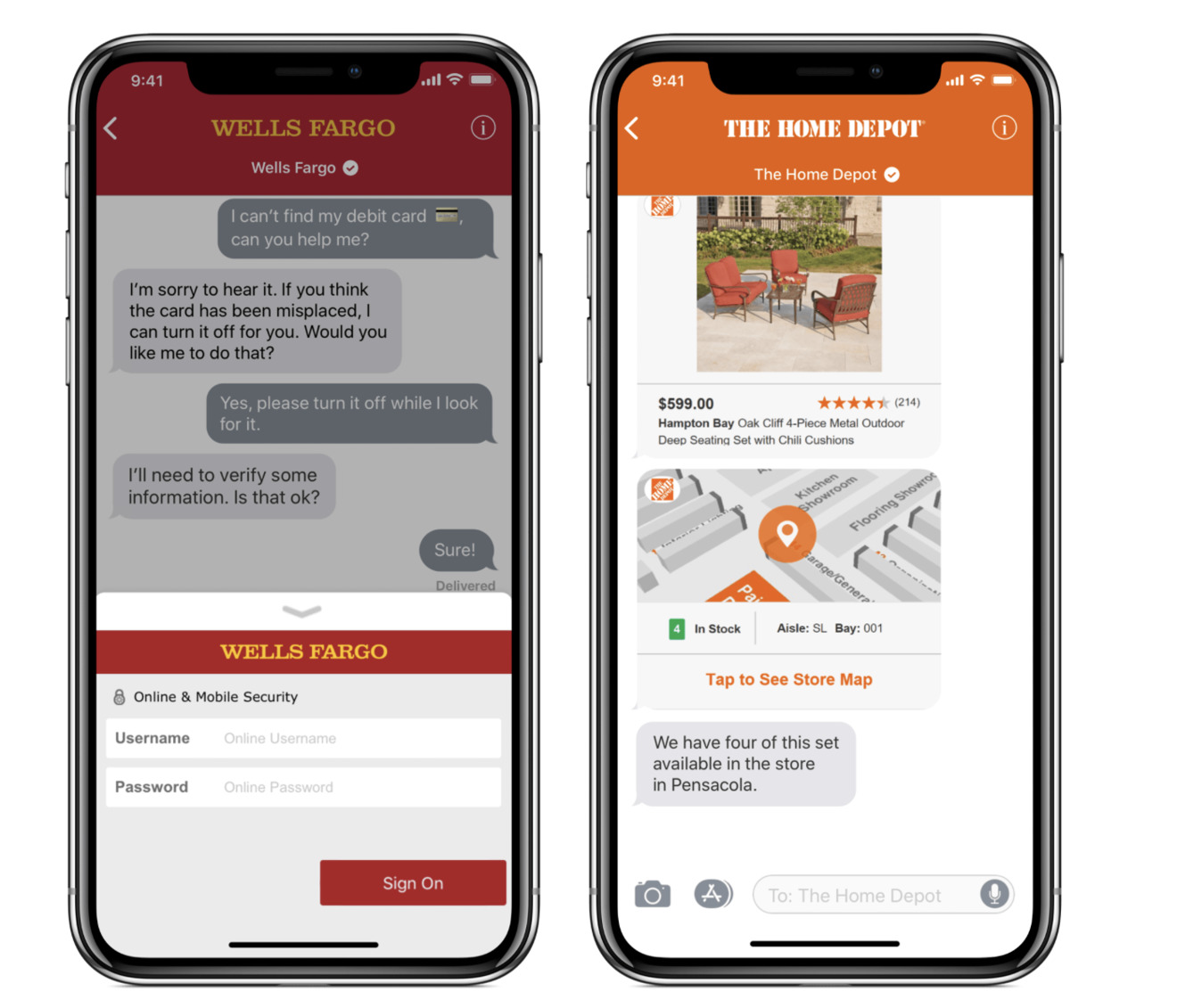

Another thread of advances that has progressively emerged at WWDC: new iMessage features, and particularly iMessage apps, which last year supported the creation of Apple Business Chat, a new platform for companies to use in direct communications with their clients using rich, in-chat software elements to handle orders, payments, schedule appointments and make custom selections.

When iMessage apps first appeared, some people only saw frivolity and an unwelcomed complication to their simple chat app. Apple was actually rolling out the beginnings of a new platform.

Other new developments in the WWDC pipeline

There are similar advances Apple appears to be incrementally preparing. As we've previously noted, Apple has made recent acquisitions including Texture the "Netflix of magazines," with the apparent intent of deploying a periodical subscription service.

Apple has already been working to embellish News and add more video content and is also working to develop custom content for Apple Music and what may eventually be its own parallel subscription video service.

Apple also recently acquired Lattice Data a programming and execution framework for statistical inference for analyzing piles of unstructured data; and Workflow, the automation tool that builds actions into events you can trigger with a touch.

By integrating Workflow with Siri, Apple could greatly expand the richness of what both developers and even casual users can do, by making it easy to set up regular tasks that could be triggered by a voice request, or a tap on Apple Watch.

Apple also reportedly acquired the employees of Init.ai, a natural language startup, and recently hired John Giannandrea, Google's chief of artificial intelligence, to head up Apple's machine learning and AI strategy.

Regarding that hire, Apple's chief executive Tim Cook noted in a message to employees that "John shares our commitment to privacy and our thoughtful approach as we make computers even smarter and more personal."

More Siri at WWDC

Apple has been investing in making Siri's voice more natural with human intonation. It has also replicated the "always listening" features first introduced by Amazon and Google, allowing iOS, Apple Watch and now HomePod to listen for "Hey Siri."

Another way Siri could get better is for more commands to work locally. Apple has already made some initial stabs in this direction, but expect to see more at this year's WWDC.

As with any voice assistant, when the cloud is down or when connectivity is lost, Siri's not very useful at all. Recently, when my Internet went down HomePod couldn't even tell me what time it was, despite all of its onboard computing power. Playback controls and basic tasks like time and local data details (such as calendar and contacts) are all things that Siri could drastically improve upon by doing more processing locally.

For devices like AirPods that lack the ability to do much local computation, the mesh of Continuity could handle Siri tasks on your phone or potentially even Apple Watch, accelerating how quickly a voice command can be put into action, even when Internet cloud connectivity is limited.

While it's easy to complain about Siri and its deficiencies in comparison to rival services with their own unique strategies and intents, it's also rarely noted that Apple's strategy involves making Siri work across far more languages and regions. That not only pertains to the languages it seeks to understand but the interests of users in other countries. Siri supports information on over 100 sports leagues globally, for example, making it relevant to users seeking updates on games being played in their home country.

There are still lots of areas Siri needs to radically improve in to become extremely useful. But a key differentiation in how it works compared to other voice services is that— just as with iMessages— its communications are encrypted both ways, and requests you make are not added to a marketer's profile.

When you ask Siri about the weather, for example, your request is handled without linking your query to your Apple ID and forever remembering who asked about weather where, extrapolating your location and deciding what you might be enticed to buy in the future based on your question.

Siri's efforts to respect users' privacy and keep their data secured rather than mining it for marketing opportunities is not lost upon buyers. It appears that Apple will have an easier time improving how Siri works than rivals will have in earning trust back from users. Currently, the number of people enraptured with the entertainment-level novelty of voice is small and likely short-lived. Once we start commonly using voice commands for more important things, the nature of their security and trustworthiness will be far more important than whether a complex conversation query could be handled back in 2018.

Amazon and Google were once hailed as having a tremendous advantage over Apple due to their openly expressed policy of consuming user data and freely using it to improve their own services. But Apple then detailed its own differential privacy efforts to anonymize samples of user content without risking the leakage of personally identifying data.

As a result, Apple now employs similar deep machine learning on vast amounts of user data without the same privacy concerns, but Amazon and Google can't ever be fully trusted with the data they have taken, nor do consumers generally trust that anyone outside of Apple really cares about their privacy. The "potential for improvement" tables have turned.

Apple's reputation moat

Recall that several years ago, Android phones essentially had a monopoly on 4G LTE service, a truly compelling and vast jump in data speed over what iPhone 5 could do at the time. That advantage lasted for years, but today is irrelevant.

Qualcomm is now trying to resurrect this in advertising the potential for 1.2Gbit mobile data on its Android chipsets— something that isn't even available in practice from typical mobile networks. But that marketing hasn't stopped Apple's iPhones from being the most popular devices around the world— even with a substantial price premium.

If novel features like voice search and AI were really compelling features that drove significant numbers of buyers to new hardware, Google's Pixel 2 and Andy Rubin's Essential phone would not have been total duds. The reality is that mainstream buyers consider factors like longevity, reliability and brand experience, and that gives Apple a reprieve from chasing down every short-term tech fad and brief feature advantage its competitors can offer.

That doesn't mean, however, that Apple can just sit around basking on its past accomplishments. This year we expect to see some major advances for not just the (dare I say it, "beleaguered") Siri, but other initiatives including HomeKit, ARKit, Core ML, Metal and GPUs, and of course wearables, with Apple Watch seeing new fitness-related capabilities and expanded ways to make easy purchases and activate devices via NFC.

There's another device in particular that we are likely to see more about at WWDC, which will be considered tomorrow. What do you expect to see more of at WWDC? Let us know in the comments below.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Sponsored Content

Sponsored Content

Amber Neely

Amber Neely

28 Comments

I'd like to see more VR news. I was surprised last year by the on stage star wars demo. Since then though it's been hard to get any support from SteamVR and Vive and while i've managed to create some VR experiences using Unity it would be nice to hear some extra VR support from apple.

FCP X already supports VR headsets, since last year's update. Here's a 360° video I shot at the official reveal: https://www.youtube.com/watch?v=cWvumlKDuss&t=39s

One should not forget about HomeKit - there are a lot of Smart Home Gateway Users eagerly waiting for being able to a) expose their connected devices to HomeKit and b) integrate HomeKit-Devices into their Gateway. A lot of work has been put into this area by 3rd party gateway developers lately and all of them are waiting for the Go of Apple (my vendor has it running in beta and it works as designed - one-directionally integrating Z-Wave/ZigBee and Enocean-Devices into HomeKit only so far).

HomeKit itself will without any doubt be more integrated into Voice services and hopefully into macOS (a dedicated Home app is still missing), while a lot of supported functionality is generally missing from the iOS Home App UI at the moment. I doubt that HomeKit will be exposed to 3rd party Voice systems, though.