Machine learning led to more powerful technologies people ultimately dubbed "artificial intelligence." While it is mostly a misnomer based on science fiction, Apple eventually began to embrace the term until it moved past it with Apple Intelligence.

Apple CEO Tim Cook will tell you that Apple Intelligence isn't named because of the "AI" that came before it. Instead, he'll insist that it was given the name that best described the technology.

Regardless, Apple Intelligence is Apple's take on generative technologies that have taken the world by storm. OpenAI, Google, and others have flooded the market with their imperfect implementations in the hope of wrestling away some early market share.

Despite pundit declarations that Apple was behind and would never catch up, the company has had a hand in machine intelligence for over a decade. Now, finally, Apple has chosen to appease these complainers by introducing a more nuanced version of the technology.

A limited set of features like Writing Tools and the new Siri animation became available as of iOS 18.1, released in October 2024.

iOS 18.2 released on December 11, 2024. It includes Image Playground, ChatGPT integration, Visual Intelligence for iPhone 16, and Genmoji.

Apple released iOS 18.3 in January 2025 with few updates focused on AI. The company announced that the app intent system that would create a more contextually aware Apple Intelligence and Siri is delayed into the coming year.

What's next for Apple Intelligence

Apple Intelligence is an on-device model with custom prompts depending on the mode it is being used in, like Writing Tools or Image Playground. Siri will get smarter thanks to Apple Intelligence, integrations with ChatGPT, and third-party app intents, but it still doesn't have an LLM backend.

Siri may have new features that let it understand user prompts better and will soon gain a better understanding of what's on the display, but these are AI parts in a machine learning product, not a full overhaul. Such an overhaul of Siri's backend may come, but not until iOS 19.

A rumor suggests Apple will rebuild Siri with an LLM backend that will fundamentally change the assistant into a full-on chatbot similar to ChatGPT. However, Apple won't reveal iOS 19 until June 2025 and the new LLM backend isn't expected until early 2026.

Meanwhile, users are still waiting on the more contextual Siri and Apple Intelligence promised with iOS 18. That feature set was delayed and may not appear until early 2026.

iOS 19 and AI updates

Work on Apple Intelligence features in iOS 18 has reportedly set Apple back on work for iOS 19, so features expected at launch in September 2025 may be pushed back. However, given that iOS 19 won't be announced until June, there is no way of knowing what Apple actually expected to ship before that date.

Rumors suggest that Apple is working on implementing an AI agent for Apple Health that would be a kind of "AI doctor." There's little chance Apple would actually call it a doctor for regulatory reasons, but it could perform useful functions with health data.

There is a massive amount of data that users can contribute to Apple Health. Everything from sleep tracking to calories eaten show up in the app, and it can be a lot for users to parse.

An AI Health agent could provide useful insight on Health data while remaining private, on-device, and secure. While users shouldn't expect medical advice, it could help make health-conscious decisions about diet, exercise, and other actionable items.

Concerns regarding Apple Intelligence

So-called artificial intelligence hit the market running and has been iterated at a full sprint since. The explosive growth of the AI industry relied on having access to mountains of data gathered from the internet and its users.

Some companies, like Google, had a strong foundation to train their models thanks to the literal mountains of data collected from users. However, there wasn't any way to predict exactly how models would react to learning from the trove of human-produced data.

The result, so far, has been chatbots and search engines that hallucinate because of poorly sourced data from non-factual sources. Replies to searches and chats can sometimes result in funny suggestions like glue on pizza, but other times result in something potentially dangerous.

Other competitors, like OpenAI's ChatGPT, release updates that seem to lean into the meme culture of the internet. The image generation tool became viral as users created Studio Ghibli-inspired art based on photos they fed the tool.

Apple Intelligence is a group of foundational models built to run either at a smaller scale for iPhone, iPad, and Mac or at twice the size or bigger on a server. It is a testament to Apple's vertical integration of hardware and software.

Since the models are local to the user's device, they can access sensitive data like contacts, photos, and location without the user needing to worry about compromising that data. Everything gathered by the Appel AI never leaves the device and isn't used to train the model.

In the event a request can't be run on the device, it can be sent to a server owned by Apple, running Apple Silicon with a Secure Enclave. All data sent off the device is encrypted and treated with the same protections it would have if it stayed local. This server-side technology is called Apple Cloud Compute.

Even with privacy and security at its foundation, Apple Intelligence still had to be trained on something. Apple purchased licensed content for some of the training, but other data was sourced from Applebot, a web scraper that has existed since 2015 for surfacing Spotlight search data.

There is an ethical conundrum surrounding Apple's model training practices, but the company has assured that it has taken steps to ensure all public data is freely available on the web, lacks copyrighted or personal content, and is filtered for low-quality content. It's a small reassurance, given other companies had no regard for such precautions.

There has also been some concern about Apple Intelligence and the environment. On-device models don't have an impact on Apple's green energy initiatives, and server-side computation is performed on Apple Silicon, which is powered by green energy sources.

Apple Intelligence features

Apple Intelligence is made up of several models that have specific training to accomplish different tasks with minimal risk of error or hallucinations. There are several planned features at launch, with more coming over time to iOS 18, iPadOS 18, and macOS Sequoia.

Writing Tools works anywhere text entry is supported across the device's operating system. It analyzes text to proofread for errors, change the tone of the text to be more professional, or summarize the text.

Priority Notifications analyze incoming notifications to ensure only the most important are at the top, and they are summarized too. It ensures messages, deliveries, and other important information is available at a glance.

Data summarization is everywhere in the supported operating systems. Summarize web pages, emails, text threads, and more.

Apple has come under fire due to notification summaries sometimes combining different news headlines into one that's wrong, confusing, or suggests someone is dead that isn't. As a result, notifications that are summarized will get an updated UI element to ensure users know it is an AI summary and not a headline.

Image Playground is a app from Apple that generates images like emoji or cartoon-styled art. There is a standalone app, but the function appears in different locations, such as in iMessage. It was included in iOS 18.2 with mixed reviews as the results are not flattering or useful in their launch state.

Apple has more AI features separate from its proprietary systems thanks to a partnership with OpenAI. They are off by default, but if enabled, users can send some requests to ChatGPT. Every request must be screened and approved by the user, and all data from the interaction is discarded.

Users can to carry out more natural conversations with Siri, even if they make a mistake and correct themselves mid sentence. And as long as the user asks questions within the same relative timeframe, Siri will remember context from previous queries.

More advancements are coming to Siri later thanks to a planned update involving app intents.

App intents & contextual AI

Siri is set to get a huge boost from Apple Intelligence by having access to data presented by the various on-device models. The smart assistant is more knowledgeable about the user, their plans, who they're talking to, and what data is within apps.

The voice interactions with Siri will primarily serve as a way to activate Apple Intelligence features from anywhere. However, Siri will have additional abilities thanks to app intents providing context for what is visible on the display.

Apps with Apple Intelligence Siri support:

- Books

- Calendar

- Camera

- Contacts

- Files

- Freeform

- Keynote

- Magnifier

- News

- Notes

- Photos

- Reminders

- Safari

- Stocks

- Settings

- Voice Memos

The upgrade to Siri could take some time. Rumors suggested Apple would not release the new Siri until iOS 18.4 sometime in spring 2025, but that has been delayed into later 2025 — likely an iOS 19 release.

It's Glowtime

Apple's iPhone 16 event occured on September 9, 2024 and had a clear theme around Apple Intelligence. The "Glowtime" name is a reference to the new Siri glow, which was a core part of the event.

The entire iPhone 16 lineup can take advantage of Apple Intelligence features. The A18 and A18 Pro have a more powerful neural engine, and the new devices are what Apple calls the first iPhones designed from the ground up for AI.

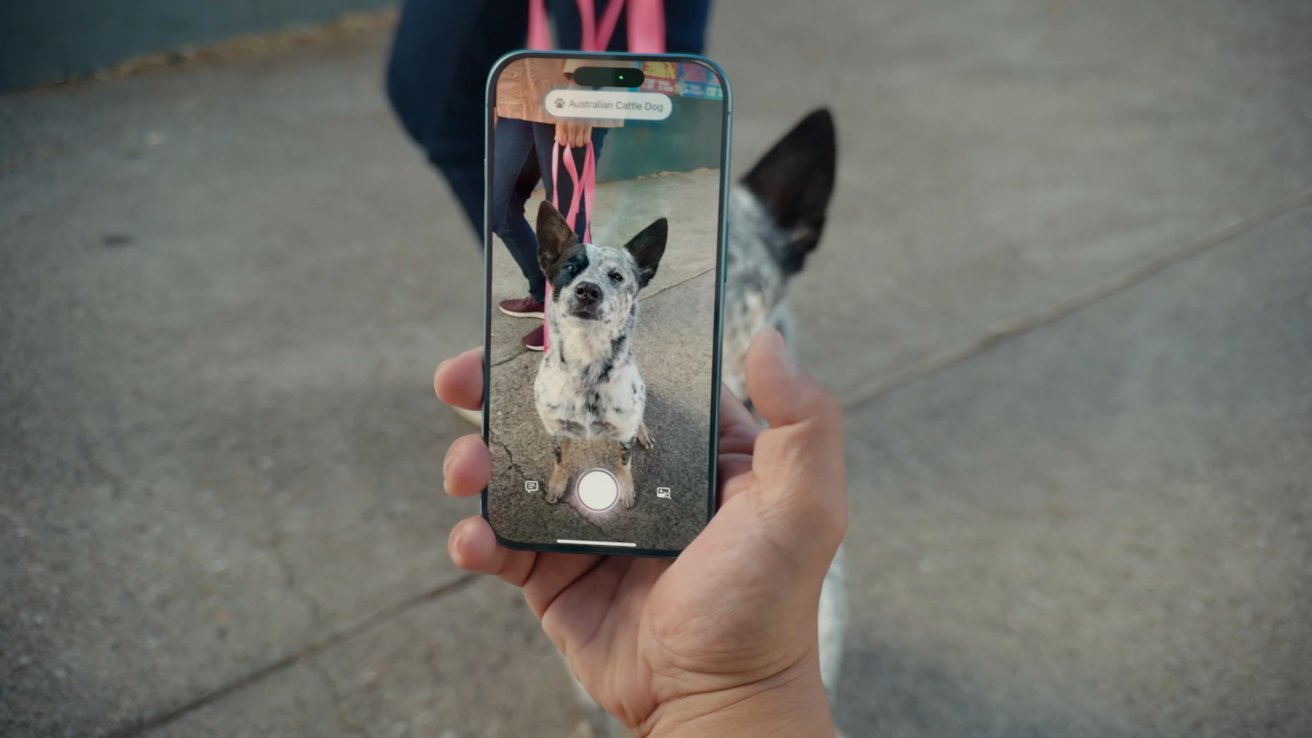

The Visual Intelligence features attached to the Camera Control button was also revealed. It is an exclusive AI feature for the iPhone 16 lineup.

iPhone 16 supercycle

Analysts expect Apple to have a record release cycle with iPhone 16 thanks to Apple Intelligence. Anyone that doesn't own an iPhone 15 Pro or iPhone 15 Pro Max, which is most people, will have to upgrade for access to Apple's AI.

That means 2024 was expected to be a significant year for iPhone sales similar to the 5G boom and the bigger iPhone craze launched with iPhone 6 Plus. Consumers know about AI like ChatGPT and want access to it, and Apple's promise of private, on-device models could have been enticing.

However, evidence showed that while demand was strong for the iPhone 16 lineup, a supercycle didn't happen. It could be attributed to the slow rollout of AI features or lack of overtly exciting party tricks at launch.

Visual Intelligence

Press and hold on the Camera Control button on any iPhone 16 and the Visual Intelligence experience will launch. It acts as a Google Lens-like feature that lets the iPhone "see" the world and interact with different elements.

Point the camera at a concert poster to add the concert to the user's calendar. Or, launch directly into the visual lookup feature and check dog breeds or flower names just by taking a photo.

Two buttons at the bottom will take whatever is in the viewfinder and pass it to Google for search or ChatGPT for parsing. As is standard with Apple Intelligence and AI on the iPhone, all interactions with AI is user controlled.

Visual Intelligence was later spread to the iPhone 15 Pro and iPhone 16e via the Action button.

Apple Intelligence requirements

Apple claims that Apple Intelligence couldn't exist until it could run locally on a device, and that wasn't possible until the A17 Pro. So, the available devices for the technology are very limited.

The base requirements appear to be tied to the size of the Neural Engine and the amount of RAM. The iPhone 15 Pro and iPhone 15 Pro Max have 8GB of RAM and run the A17 Pro with a sizeable Neural Engine.

The launch of the iPhone 16 lineup added four more devices that support Apple Intelligence, which could spur demand.

Macs and iPads can run Apple Intelligence as long as they have an M-series processor. The desktop-class processor has always had a large Neural Engine and enough RAM at 8GB minimum for the chipset.

Apple Intelligence release date

Apple Intelligence launched to the public with iOS 18.1, iPadOS 18.1, and macOS Sequoia 15.1 on October 28, 2024. The initial features included Writing Tools, Photos Clean Up, and system-wide summaries.

iOS 18.2 arrived in December 2024 with Image Playground, ChatGPT integration, and Visual Intelligence. More features are delayed until iOS 19.

Everything below this point is based on pre-WWDC 2024 rumors and leaks. It has been preserved for reference.

Pre-announcement AI rumors

Apple is known to adopt its own marketing terms to describe existing phenomenons, like calling VR or MR by the in-house term "spatial computing." It seemed like Apple was going to lean into Artificial Intelligence, or AI, as a term, but it is expected to add a twist by calling it "Apple Intelligence."

Whatever Apple calls it, the result is the same. There will be system-wide tools that run using generative technologies that are much more advanced than the machine learning algorithms they'll replace or augment.

Apple may reveal plans for a server-side LLM during WWDC, but rumors suggest it isn't ready for prime time. Instead, the focus will be on local models made by Apple that can call out to third-party server-side LLMs like ChatGPT.

Local Apple Intelligence

OpenAI's ChatGPT and Google's Gemini Large Language Models (LLMs) have one thing in common — they are gigantic models trained on every ounce of available data on the internet that live in servers.

The "Large" in LLM is literal, as these models can't live on something like an iPhone or Mac. Instead, users call the models and their various versions through the cloud via APIs. That requires data to leave the user's device and head to a server they don't control.

Server-side models are a must for such enormous data sets, but they come at the cost of privacy and security. It may be harmless to ask an LLM to generate a photo or write an essay about historical events, but asking it to perform a budget may cross a line.

Apple hopes to address these types of concerns by providing more limited local models across the iPhone, iPad, Mac, and Apple Vision Pro. The pre-trained models will serve specific purposes and will contain very limited but specialized data sets.

The on-device models will learn based on user data. The data will never leave the device without explicit permission.

Upgrading Siri

A part of local Apple Intelligence will be providing significant upgrades to Siri. Apple's assistant is expected to become much more conversational and understand contexts better.

A specific model, likely based on the "Apple Ask" pre-trained model that's been tested internally, will be the driver behind Siri. Its limited data set is said to be a protection against hallucinations, which plague LLMs like Google Gemini and ChatGPT.

Users can ask Siri to perform many tasks across Apple-made apps, from simple to complex. It isn't yet known if an API will allow developers to target this new Siri, but it is likely.

Apps with Apple Intelligence Siri support:

- Books

- Calendar

- Camera

- Contacts

- Files

- Freeform

- Keynote

- Magnifier

- News

- Notes

- Photos

- Reminders

- Safari

- Stocks

- Settings

- Voice Memos

Many Siri functions in these apps involve general navigation and search. Like opening a specific book or creating a new slide.

It seems many of the functions found in the new Siri commands are derived from previously available accessibility options that could control the device via voice. This melding of features will provide more control for all users, not just ones relying on accessibility options.

Apple Intelligence at WWDC

All will be revealed during WWDC 2024. Apple is expected to reveal Apple Intelligence during its keynote, where iOS 18, iPadOS 18, macOS 15, tvOS 18, watchOS 11, and visionOS 2 will also be announced.

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

Malcolm Owen

Malcolm Owen

Marko Zivkovic

Marko Zivkovic