A report on Monday claims Apple is putting together a team of A-list speech recognition researchers, including high-ranking employees from Nuance, to create an in-house Siri engine based on neural networking.

According to Wired, Apple has created a group of software engineers and researchers from Nuance, the firm responsible for Siri's voice-recognition functionality, as well as other companies to work toward a next-generation backbone for the virtual assistant.

The publication points to a number of Apple hires over the past few years, including Nuance's former vice president of research Larry Gillick and Gunnar Evermann, who is currently working as Siri's speech project manager.

Speaking to the publication, Microsoft research division head Peter Lee said Apple hired Alex Acero away from the Redmond, Wash. software giant in 2013. Acero is now a senior director on the Siri team.

As for neural networks, a term for machine learning algorithms that work in a manner similar to the brain's neurons, Wired said IBM, Microsoft and Google have deployed the deep learning technology in various speech-related applications.

Microsoft, for example, is using a neural network to power the real-time translation feature set to debut in Skype later this year. Google is dabbling in neural nets with Android's "Google Now" speech recognition functionality.

With the reported hires, Lee guesses Apple is likely planning a neural net-powered Siri backbone built entirely in-house.

"All of the major players have switched over except for Apple Siri," Lee said. "I think it's just a matter of time."

Last year, a report claimed that Apple had assembled a small team of experts, including former employees of a firm called VoiceSignal Technologies, to develop speech recognition technology specifically for the Siri personal assistant. The group supposedly works out of the company's Boston office.

Earlier in June, The Wall Street Journal reported that Nuance was exploring a sale to either partner Apple or Samsung. It appears that Apple may be step ahead of Nuance after poaching talent from the company for its own in-house team.

"Apple is not hiring only in the managerial level, but hiring also people on the team-leading level and the researcher level," said Abdel-rahman Mohamed, a University of Toronto researcher who was supposedly asked to join Apple's team. "They're building a very strong team for speech recognition research."

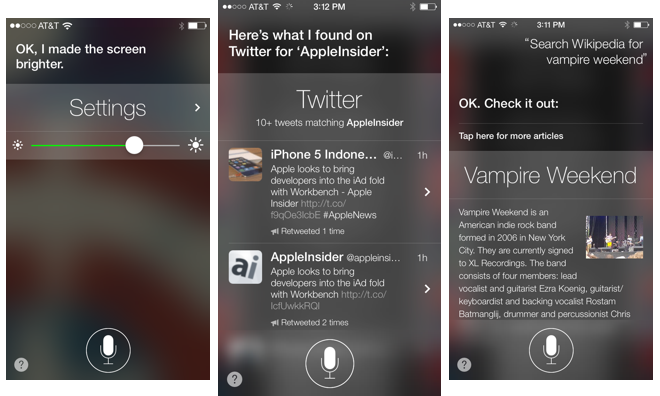

Current builds of Apple's next-generation iOS 8 still include a Nuance-powered Siri, though the virtual assistant has a few tricks up its sleeve with Google Now-style real-time speech-to-text and smart home product control with HomeKit integration, among other enhancements.

AppleInsider Staff

AppleInsider Staff

Charles Martin

Charles Martin

Amber Neely

Amber Neely

Sponsored Content

Sponsored Content

Malcolm Owen

Malcolm Owen

Oliver Haslam

Oliver Haslam

10 Comments

I sure hope they do something by next iOS release because I feel like Siri really hasn't become better and speech recognition since its debut, only more services (that are great) have been added to its usage list.

[quote name="AppleInsider" url="/t/181016/apple-reportedly-putting-together-team-of-speech-recognition-experts-for-neural-network-powered-siri#post_2558095"] Earlier in June, The Wall Street Journal reported that Nuance was exploring a sale to either partner Apple or Samsung. It appears that Apple may be step ahead of Nuance after poaching talent from the company for its own in-house team. "Apple is not hiring only in the managerial level, but hiring also people on the team-leading level and the researcher level," said Abdel-rahman Mohamed, a University of Toronto researcher who was supposedly asked to join Apple's team. "They're building a very strong team for speech recognition research." Current builds of Apple's next-generation iOS 8 still include a Nuance-powered Siri, though the virtual assistant has a few tricks up its sleeve with Google Now-style real-time speech-to-text and smart home product control with HomeKit integration, among other enhancements.[/quote] Now that the zombie has been sucked dry of talent, it's time for Google to swoop in and overpay for what's left. It's hard to believe Siri's out of Beta and all grown up now... I wonder how long before she's given a chip of her own?

Siri needs a grammar loop in that neural net. She makes dictation choices seemingly based on what words are used most often, not what makes sense. For example, in a dictation to a musician I said something like, "I really enjoyed hymn 345." Of course Siri made it "him 345." These mistakes happen all the time. It really hobbles the usefulness of dictation when you have to comb back over it and fix a problem every 10-15 words or so.

Siri needs a grammar loop in that neural net. She makes dictation choices seemingly based on what words are used most often, not what makes sense. For example, in a dictation to a musician I said something like, "I really enjoyed hymn 345." Of course Siri made it "him 345." These mistakes happen all the time. It really hobbles the usefulness of dictation when you have to comb back over it and fix a problem every 10-15 words or so.

Siri plays no part in dictation. That's Nuance's speech recognition engine.

[quote name="mjtomlin" url="/t/181016/apple-reportedly-putting-together-team-of-speech-recognition-experts-for-neural-network-powered-siri#post_2558235"] Siri plays no part in dictation. That's Nuance's speech recognition engine. [/quote] I'm sure he knows that, but Siri ncludes the Nuance/Dragon Dictation-based engine so I think it's fine to simply use the term Siri even when only referring to the dictation aspect of the service.