Rumors of iPhone 7 have so far revolved around purportedly leaked schematics and part images, which only allude to obvious potential hardware changes (a dual lens camera, an iPad Pro-like Smart Connector and replacing the legacy headphone jack with Lightning). However, many of Apple's biggest iPhone leaps have been externally invisible, driven by software advances unlocking the power of advanced silicon hardware inside, many of which debuted at WWDC.

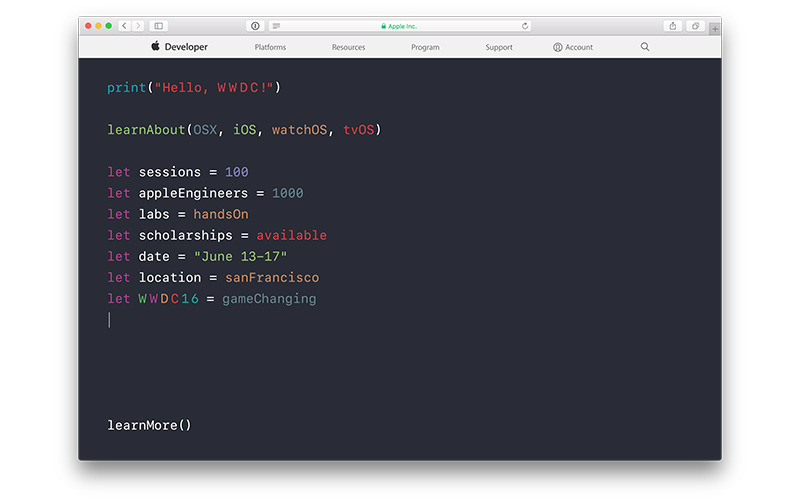

Apple's Swift invite to WWDC 2016

Apple is notoriously secretive about its future plans right up to the point where it is ready to release new hardware at its Apple Event launches, or alternatively, new software and cloud services at its annual Worldwide Developer's Conference in June. Here's what to look forward to at next month's developer event, focusing specifically on iOS 10 and the devices that run it. Other articles look at Apple Watch and its watchOS, the tvOS used by Apple TV and Apple's Mac and iCloud platforms.

WWDC is all about software, as it is the company's annual meeting with app developers, accessory partners and others who interface with Apple's platform APIs. While the company has occasionally debuted new hardware at WWDC, in recent years the event has focused almost entirely on new software releases (iOS, Mac OS, watchOS and iCloud) and ways developers can make the most of Apple's platforms.WWDC has showcased incremental advancements that provide clues about where Apple is headed

Apple's WWDC has showcased incremental advancements that provide clues about where Apple is headed— including strong foreshadowing of future hardware.

For example, Apple began talking to developers at WWDC about gaming related hardware controllers long before it introduced Apple TV. It also unveiled its Bluetooth-based Continuity services prior to introducing Apple Watch, which tightly integrates with your iPhone using those same technologies first perfected on Macs and iOS devices. Last year's iPad multitasking features in iOS 9 were a harbinger of the two new upscale iPad Pro models aimed at business users.

Previous iPhone innovations were often invisible to spy shots

Consider first how little we knew about earlier iPhone releases just from spy photos circulated before their launch. Apple's second generation iPhone 3G debuted a cheaper plastic case than the original model, intended to make it affordable to a much larger audience. But inside that shell was a series of major improvements ranging from its application processor chip to its camera and support for 3G mobility and GPS location services. A spy photo would not have revealed any of that.

The next year, iPhone 3GS looked virtually identical, but it added a digital compass, a video camera and doubled its processor speed. That enabled it to run a new class of more powerful and sophisticated apps, without any radical reshaping. Once gain, that model wildly outsold its two predecessors.

Apple's new design for iPhone 4 notoriously leaked when a prototype was discovered left behind at a bar. But despite having some real Apple hardware in their hands, bloggers at Gizmodo didn't even realize that the device would launch a new high resolution Retina Display, and they didn't know anything about its powerful new A4, the first new ARM chip Apple had developed internally with significant silicon optimizations.

They also didn't know — just from looking at it — that the device would launch the all new FaceTime, or that it would include the first gyroscope in a smartphone. iPhone 4 went on to become the biggest smartphone launch of its time.

The subsequent iPhone 4s built upon these enhancements with even more invisible updates, including those enabling efficient Siri voice recognition (via new mics and sophisticated voice recognition and noise canceling hardware). It also added support for the new iMessages in iOS 5, and a new leap in application processor speed with the A5 chip.

Apple's next external jump, iPhone 5, introduced a taller screen and the new Lightning connector, neither of which were widely seen as must-have features or examples of revolutionary innovation. The biggest improvement, besides its faster A6 chip and greatly enhanced camera, was support for LTE, something that many carriers at the time were just beginning to roll out beyond major cities. It went on to sell more units than ever, and lived on for another year as iPhone 5c.

iPhone 5s similarly looked little different from its predecessor apart from the metallic ring around its Home button alluding to its support for Touch ID. Its biggest leap was the surprise delivery of A7, the world's first 64-bit smartphone chip. That chip also incorporated a very sophisticated imaging engine that helped establish iPhones as excellent mobile cameras, drawing a sharp distinction with cheap Android phones that smeared memories into blurry photographs.

Apple has since launched two iPhone 6 models that actually did significantly change in their outward appearance with larger, higher resolution displays. But the A8 behind the scenes — packing a much faster GPU — did much of the work to make that larger screen possible while still retaining iPhone's lag-free user interface.

Last year's iPhone 6s models similarly had no physically discernible features, but got a more durable frame, a faster A9 chip and packed significantly better cameras (with software support for Live Photos), along with adding sophisticated support for 3D Touch pressure sensitivity— creating distinctive shortcuts throughout the user interface, on the Home screen and within apps that added support for the new features (and tons of developers quickly scrambled to do just that).

Despite looking little different from the original iPhone 6, iPhone 6s slightly outperformed its predecessor in its crucial launch quarter, even despite more difficult economic conditions and very unfavorable currency changes, particularly affecting China.

iPhone 6s didn't need a wildly new form factor to maintain the same unit sales surge as its blockbuster predecessor.

This indicates that while iPhone 7 might not look radically different on the outside, it won't be out of line for a new iPhone to deliver a major jump in features and capabilities due to externally invisible hardware advancements and new software that can take advantage of them and expose them to third party developers.

New at WWDC: iOS 10

While all of the headline features of iPhone 7 aren't expected to drop until September, a new version of iOS regularly debuts at the June WWDC, often paving the way for new features on the upcoming iPhone launch. We won't know for certain everything Apple is working on related to iPhone 7 and iOS 10 for another month, but here's an outline of things the company likely — or definitely — has in its crosshairs.

A few are obvious, such as continuing work on Xcode, the company's development tool for building iOS software, and Swift, Apple's new development language that's taken off and gained enthusiastic adoption despite its fledgeling newness.

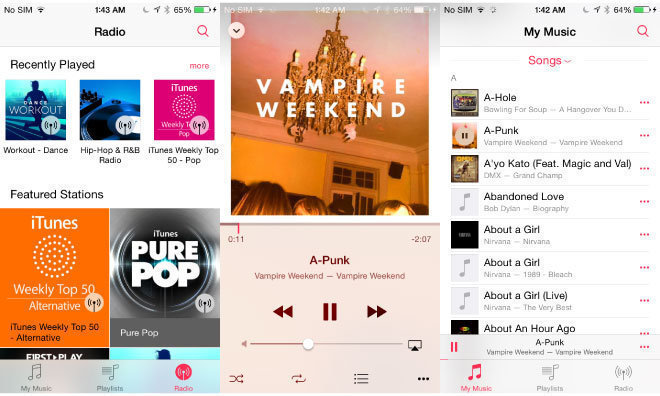

Rumors have also already outlined potential changes to Photos, as well as Apple Music being the recipient of a targeted enhancement, specifically adding 3D Touch support for previews, enhancing content search and better exposing lyrics.

If Apple were serious about building social media "stickiness" to Apple Music, it would expand its Connect service from being a sort of clumsy "other" way to follow artists, and add the ability for Apple Music subscribers to swap "mix tape" playlists, or even let other users follow them and listen to what they are currently playing, like a pirate radio station. Except in this case, users on both sides would be subscribers, and the music industry (and its artists) would therefore be benefiting from the way people play, share and listen to their music as streams compiled by different types of people. If done right, this could make subscription super popular and the default way to listen to and share music.

Harvest — and seed — the App Store

Changes to the App Store, and in particular search, are also likely to be introduced at WWDC, also related to the recent leadership changes under the iTunes & Services umbrella. While the App Store itself can benefit improved search and navigation, Apple's App Store ecosystem could also use some attention.

In particular, Apple needs to more aggressively buy up the best apps and component ideas that blossom in its App Store and incorporate these into iOS as integrated services or features. This would ensure that iOS maintained an exclusive, crowdsourced collection of the best mobile software ideas, even those that haven't originated within Apple itself. Apple has built an amazing platform, but it needs to do more than just maintain it. It needs to harvest it and possibly even seed investment in new app startups, a sort of Apple Venture Capital group. What better use of some of Apple's cash hoard could there be other than investing it into getting new developers off the ground on its own platforms?

Many of the flashy features of competitors' smartphones that the media gushes over as "innovation" are really just simple apps that Apple could be funding and incorporating into its platform while it works on the core hardware and OS innovations that members of the media often fail to grasp the significance of. This would entertain simple journalists while also fleshing out useful high level features that third parties have already developed, and which Apple doesn't need to duplicate on its own.

A generation ago, Microsoft successfully made Windows 95 into a powerful platform by building not just a copy of the Mac desktop, but by assembling and acquiring a portfolio of Office apps to drive adoption of Windows. Apple fumbled its own productivity apps of the era, spinning off its own AppleWorks suite off into a subsidiary in order to avoid contention with third party developers. That didn't work out well for the Mac platform in the 90s.

After Steve Jobs returned, Apple put a new focus on finding "killer apps," buying up and developing a creative suite of apps from iTunes, iMovie and GarageBand to Mail, to Logic and Final Cut Pro, to Keynote, Pages and Numbers, all with little regard for how those apps might affect competing third party titles. This turned out to be wildly successful.

Over the past several years, however, very little apparent new app development has originated within Apple apart from acquiring Beats to build Apple Music, the development of new system apps such as Health, Wallet and News, and the new Music Memos tool and the Classroom app for education users taking advantage of the new features in iOS 9.3.

Note that Microsoft — after being caught completely off guard by the rise of mobile devices, and completely failing in its efforts to establish Windows Mobile, Windows Phone and then Windows 10 as a viable mobile platform — has turned around and quite rapidly and successfully acquired the components of a mobile Office suite for sale (or subscription) on iOS and Android.

If Apple continues to act like an unbiased platform for other developers' apps, it could again find itself competing against a third party developer with a serviceable clone of iOS and a portable app suite to run on it, erasing the stickiness that currently makes the iOS ecosystem so valuable. If Apple doesn't want to develop its own software, then it should buy another developer with some of the tons of money it has, sitting in Tbills earning less than inflation.

New App Extensions

Some of the best examples of third party software Apple could seek out and buy up include innovative App Extensions that users might be less likely to see the value in buying outright, despite their ability to greatly enhance the overall user experience, such as photo and video filters, audio effects, sophisticated text editing, transformation and translation, document editing tools and web content filters.

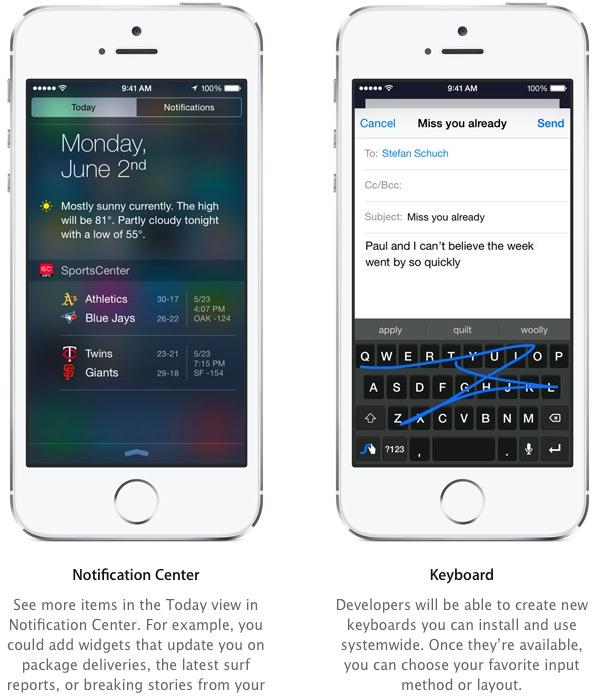

Apple introduced App Extensions two years ago, enabling third party Share Sheets, Widgets and Keyboards, Photo editing components, File and Document Providers and Actions.

Last year it added support for building WebKit Content Blockers and Audio Unit Extensions. This year, WWDC is likely to expose new extension points in iOS 10, enabling third parties to further embellish or customize how the system works. Apple should be watching what sprouts up and quickly identify the best species for grafting into its platform as default features.

Deeply Integrated Services in iMessages

Facebook, Microsoft, Google and others are all working on bot-like assistants that provide access to a variety of services from a chat-like interface. This has also become quite popular in China, where messaging apps like WeChat allow users to not only communicate with each other, but also to order products or request a ride.

These companies all need to run requests for services through their messaging apps because they lack control over their own device platform to integrate these services into. Facebook, for example, has repeatedly flopped while attempting to launch its own Facebook Phone, and then spectacularly failed in its efforts to layer a Facebook Home on top of Android phones in 2013.

Google is facing scrutiny in the U.S. and EU over its efforts to force Android devices to prioritize use of its own services, the same way Microsoft ran into anti-competitive action in response to its own attempts to monopolize the user experience on Windows PCs.

Apple's situation is different because it isn't licensing its software for others. It is the only source of iOS-based hardware, and because iOS wields no monopoly control over smartphones, tablets or any other devices, it can deeply integrate solutions into iOS.

Apple launched iMessage back in 2011 in a effort to bridge telephony SMS chat with IP-based Instant Messaging on the Mac. The result is a unique, encrypted messaging platform that has more recently been enhanced to support ephemeral picture and video sharing and sending voice recordings. Via Continuity, Messages allows Mac users to join text chats or even answer phone calls.

Given that nearly a billion users are regularly using iMessage to communicate on iPhones, integrating Siri as a new bot-like assistant service to the default messaging app seems like an obvious next step. Apple should integrate Siri with iMessage, allowing users to text queries to Siri when they don't want to speak out loud.

Being able to directly text Siri would expand the utility of the personal assistant with little work on Apple's part, and enable users who can't speak (or can't get Siri to recognize what they're saying) to send (and amend) requests and to ask questions directly, without having their conversation vanish when exiting Siri's overlay blur world.

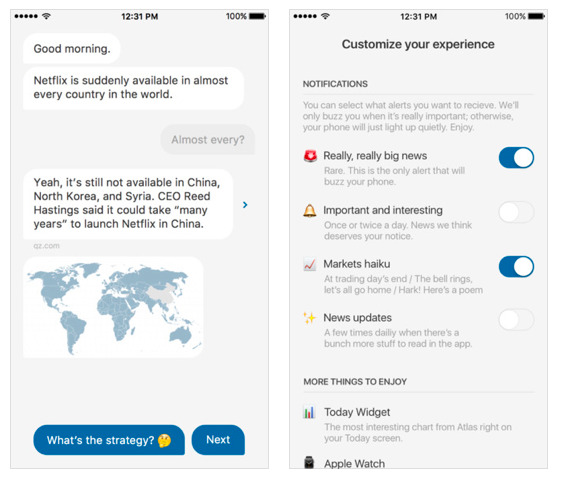

Quartz recently launched a mobile news app that lets users converse via text with a news feed, enabling users to navigate their interests through the headlines. The generation that grew up texting would find conversing with Siri via text to be second nature. And while some users would prefer to ask questions out loud, in many cases the ability to get Siri's answers to queries typed as text messages would be preferable.

Further, once texting users get comfortable with the types of requests Siri can answer, they'd be more likely to ask questions out loud, taking advantage of the always-on Hey Siri feature, both on iPhones and on Apple Watch. Spotlight search on iOS already answers some basic questions, such as performing math or currency conversions. Why not allow Siri to take a crack at suggesting results there, as well as being a special contact that anyone could text message. This would also provide Apple with millions of written requests, allowing the company to fine tune its results algorithms without concern for recognizing complex speech. It also might make it easier to introduce a text-only Siri in new languages before perfecting voice recognition in that language.

Apple can also extend Siri to manage and navigate VoiceMail messages, and even answer calls on your behalf to take messages and dictate them into text messages. Siri also needs an open API for interacting with third party apps, allowing users to request a Lyft ride, or to jump into specific features within apps they use by enabling developers to expand Siri's ability to recognize and understand app-specific features and terms.

Apple should also enable Siri on iOS devices to send requests to other devices, starting with Apple TV. With a phone in hand, you shouldn't need to find your Siri Remote just to navigate your TV programming. HomeKit already links Siri to external devices, so it's puzzling why Apple TV isn't easy to direct from nearby users' iPhones. Minimally, the next version of Remote for iOS should enable support for this, but Siri should also be smart enough to interpret and send commands directly to your Apple TV, the same way you can tell Siri to turn your Hue lights red or turn up the temperature on your HomeKit thermostat.

It also appears very likely that Apple will use iMessage as a platform for personal Apple Pay, allowing users to transfer money to others with just a few taps and a Touch ID press. Apple could also capitalize on the popularity of Snapchat-style empirical message sharing by making it easier to add simple photo captions and iChat Theater-style video filters on live images users share over iMessage. Despite its inscrutable user interface, Snapchat is rapidly becoming the way young people send postcard-like messages to their friends. Apple can keep iMessage relevant by maintaining its own cute ways to embellish photos and apply silly video transforms, keeping its users in blue bubbles rather than third party apps with ports for Android.

Digital Touch

Apple Watch introduced an expansion of messaging with Digital Touch, an interface the lets you choose a friend, then call, text or, via Digital Touch, doodle an animated message. The downside to Digital Touch is that it only works between Apple Watch users. This seems like a mistake, one that Apple should address.

By adding the ability to send and receive Digital Touch messages from any iPhone (or iOS device, or even a Mac), Apple could greatly popularize the format and radically increase its potential audience network, capitalizing on and drawing new attention to the underutilized Apple Watch feature.

Digital Touch also supports taps (sent as Taptic Engine vibrations) and heartbeats. There's no reason why these can't also be sent to non-Apple Watch users; Apple really needs to recognize this if it wants Digital Touch to be anything other than a curiosity locked up within the isolating walls of Apple Watch. Along with iMessage, Digital Touch on Apple Watch could also gain the ability to send money via Apple Pay, making it even more popular.

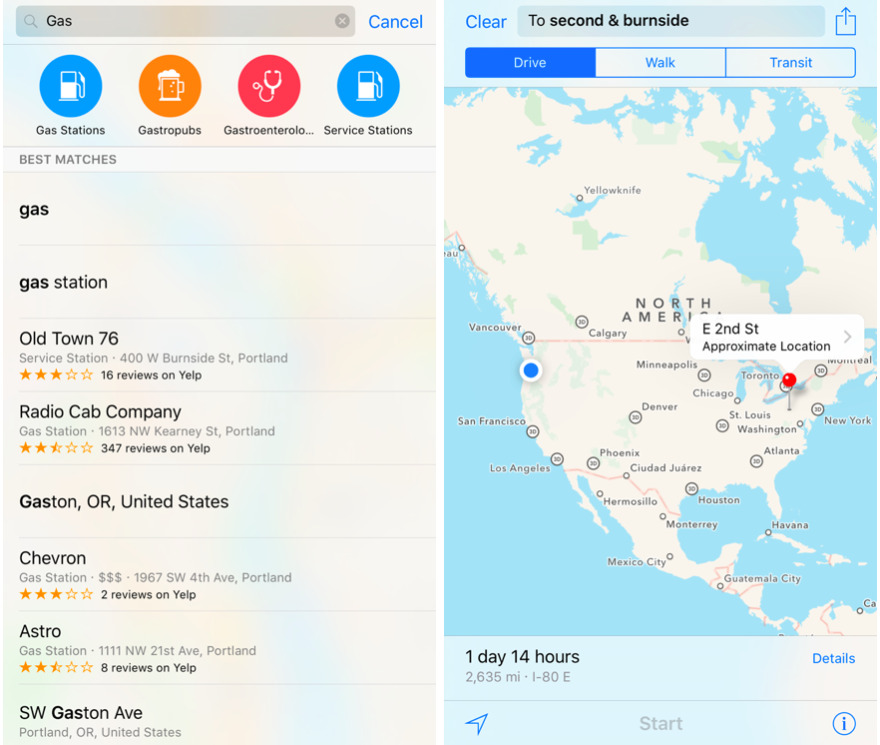

Maps, Flyover & Spotlight

Apple should also greatly enhance Maps-related searches by either intelligently limiting searches to local results, or manually introducing a UI element to do this. Too frequently, a search for results that seems obviously intended for nearby businesses returns insane results from across the country, if not on the other side of the globe.

Whoever is managing Maps on iOS should be forced to use this product until it works without offering such irrelevant suggestions. Maps also needs smarter parsing of natural language searches, such as asking for a specific intersection of nearby streets.

Another way Apple could dramatically enhance Maps is to make it easier to create Flyover sequences that users could share, including a dynamic Flyover of directions to a given location, or a series of landmarks that anyone could contribute toward, vastly expanding the current support for limited Flyover Tours of select cities.

Last year, Apple introduced a smarter Spotlight with "intelligence" support for deep linking into third party apps, such as finding recipes in a food app. That team is no doubt working on enhancing how iOS devices search and the relevance of the results they present. Some of this intelligence needs to be shared with the search in Maps, which falls down on some of the same queries that work correctly when given to Spotlight. And as noted earlier, Siri and Spotlight should also mind-meld rather than offering two separate worlds of query.

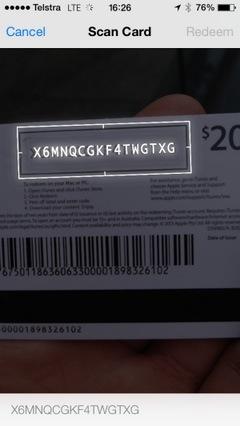

OCR everywhere

Another feature conspicuously missing from iOS on the platform level is OCR, or optical character recognition, which enables the system to extract text from static images or a live camera feed. Apple already has this ability, and uses it in iTunes to redeem gift cards or to read the numbers and dates on user credit cards when setting up Apple Pay.

OCR features should be turned into a system-wide service that lets anyone extract the text of an image and paste it into Notes, an email, send as a text or recognize as actionable data for Data Detectors, such as pulling up a Map in response to "seeing" an address in a document or business card, and then offering to create a new contact from the information.

Some of these features already exist among third party apps. Apple's App Store acts as multibillion dollar startup incubator for software ideas, but apart from collecting developer fees and a percentage of sales, Apple itself doesn't benefit from the value created there as much as it could if it were more actively working to pick up and fund ideas bubbling up to the top of the meritocracy.

MDM, Enterprise & Healthcare

Apple has already taken on efforts to enhance Mobile Device Management for multiple iPads in education using its new Classroom app. It really needs to enhance its own MDM offerings for institutional and enterprise users, and is likely to eventually use that support to also enable multiple users per device in both corporate and family settings.

Last year Apple introduced new multitasking features aimed at iPads. Since then, it has launched two new iPad Pro models. Apple is likely to add new enhanced support for performing more complex tasks and work flows targeting that new hardware in iOS 10. This could include the ability to pull Today Widgets out onto the Home screen to take more advantage of the larger screens on iPad Pro. Apple may also add new iOS 10 features tied to the iPad Pro's new Apple Pencil.

Tim Cook's Apple has fixed new attention on building enterprise partnerships, most notably with IBM's MobileFirst apps, SAP's HANA platform for enterprise resource planning (operations, financials and human resources), and Cisco's networking products.

iOS 10 will likely build in additional features needed to enhance the experience iOS devices in enterprise environments, given that iPhones and iPads have taken a decisive lead in corporate, higher education and government adoption.

Another new organizational partnership Apple has entered relates to healthcare, including ResearchKit and the new CareKit. Both new initiatives are not just supporting university research, but are also designed to integrate into large scale healthcare data, including EPIC Systems' leading digital health records system. Large hospital networks, including Kaiser Permanente, are now evaluating ways to decisively become more mobile, so expect a continued effort to enhance how iOS devices— and Apple Watch— integrate to serve the needs of healthcare professionals.

What else would you like to see in iOS 10? Let us know in the comments below.

Daniel Eran Dilger

Daniel Eran Dilger

-m.jpg)

Marko Zivkovic

Marko Zivkovic

Christine McKee

Christine McKee

Andrew Orr

Andrew Orr

Andrew O'Hara

Andrew O'Hara

William Gallagher

William Gallagher

Mike Wuerthele

Mike Wuerthele

Bon Adamson

Bon Adamson

-m.jpg)

71 Comments

I think iOS on the iPad feels like a stretched out iPhone. 3rd party apps have done well designing their apps that takes advantage of the bigger screen but Apple at the OS level and their own apps have not. Lots of wasted space and not taking advantage of the big screen. That is what's keeping the iPad from really being a true laptop replacement and the Pro from being a true pro device. Apple needs to do better with iOS on the iPad.

And Apple bringing their "pro" apps like FCX & Logic to the iPad would help bring more credibility on the iPad Pro as a pro device.

QR reader built into the camera app?

What about the Mac???

Some of the platform restrictions lifted - especially sorting out the crippled BT MAP implementation which is a real handicap in the automotive market