New benchmarks say Apple Vision Pro has by far the best system of any major headset for relaying views of a user's surroundings to them.

Meta Quest 3's Mark Zuckerberg didn't mention this point when he reviewed the Apple Vision Pro. Both his headset and Apple's are mixed reality (MR) ones, which means they both show the wearer a view of their surroundings, overlaid with digital content.

The process involves having cameras on the headset relay what they are seeing, to the screens in front of the wearer's eyes. It's called the see-through, and Apple has claimed that Apple Vision Pro does this with groundbreaking speed.

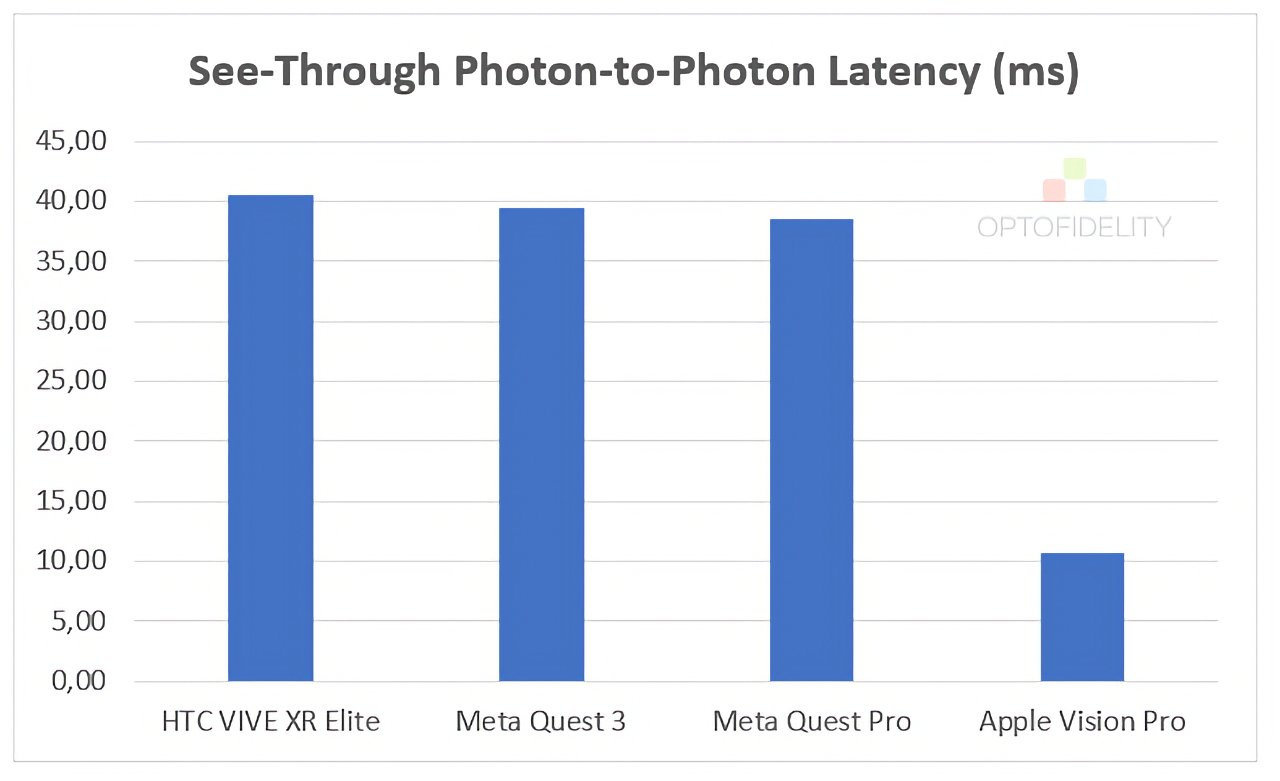

According to new benchmark results, it does. OptoFidelity, which makes testing equipment, investigated the see-through capabilities of four major MR headsets and measured what's called the photon-to-photon latency.

Photon-to-Photon latency measures the time it takes for an image of the outside world to be transferred through the headset and to the user. Using its OptoFidelity Buddy Test System, the company measured the photon-to-photon latency of:

- Apple Vision Pro

- HTC VIVE XR Elite

- Meta Quest 3

- Meta Quest Pro

The three non-Apple ones came in at around 40 milliseconds. Previous tests with them reportedly saw results ranging from around 35 milliseconds to 40 milliseconds. The testers say this figure used to considered a good standard.

Apple Vision Pro's see-through is 11 milliseconds.

That makes it almost four times faster at relaying the real world to the headset's wearer. Its closest rival was the Quest Pro, which came out at just under 40 milliseconds.

This does all mean that Apple is correct in saying it has groundbreaking technology in the Apple Vision Pro, at least in terms of its record-breaking latency issue. But even with by far the best latency of the major headsets, Apple Vision Pro does have a question mark over its intended audience.

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard

Christine McKee

Christine McKee

Malcolm Owen

Malcolm Owen

Amber Neely

Amber Neely

8 Comments

Very nice test.

And to think, this see-through photon-to-photon latency needs to drop by half to 6 ms to expand headset use cases. Crazy how much compute and lower latency this product needs to reach maturity.

10 finger typing on a virtual keyboard is going to need some bonkers hand tracking too. It may need to use a different keyboard layout to do really do it.

When AR games start taking advantage of Apple's RealityKit, it is really going to make the Quest 3 look like ancient technology. The scene matched lighting of Apple's game engine far surpasses Unity and Unreal for AR-style applications. The GPU is capable of rendering objects with very intricate details devoid of normal mapping tricks that make Quest 3 games look fake. I can't wait for developers to utilize this in new games. Open any of the many AR QuickLook demos on the web for what RealityKit is capable of.

This is amazing performance, compared to the competition. However, I haven’t noticed any lag when using my quest 3, so I’m curious about real world impact.