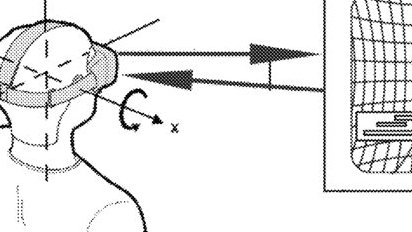

Apple working on Spatial Audio system for virtual and mixed reality devices

Apple is working on developing a system that could integrate spatial audio experiences into virtual or mixed reality platforms, potentially for a head-mounted device like Apple Glass.

Mike Peterson

Mike Peterson

Malcolm Owen

Malcolm Owen

William Gallagher

William Gallagher

Wesley Hilliard

Wesley Hilliard